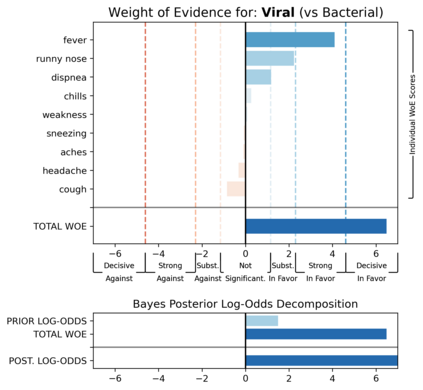

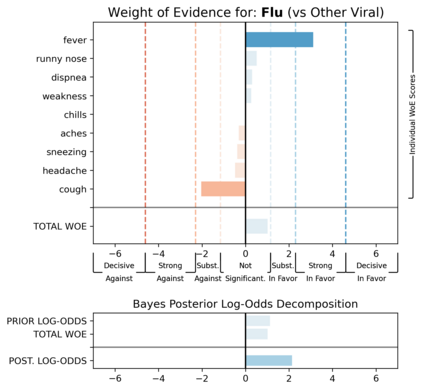

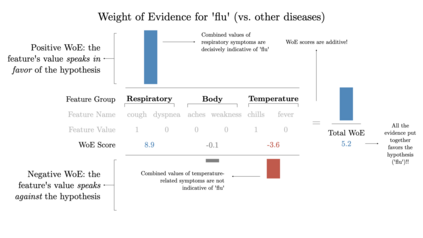

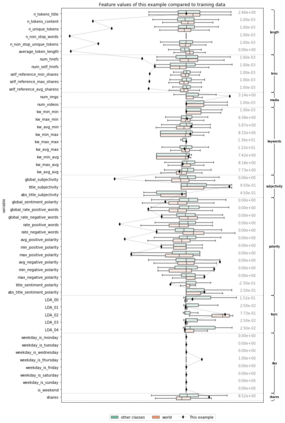

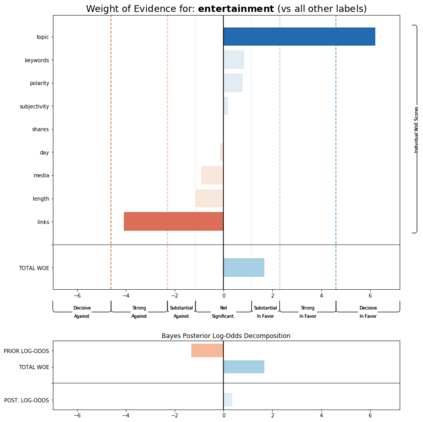

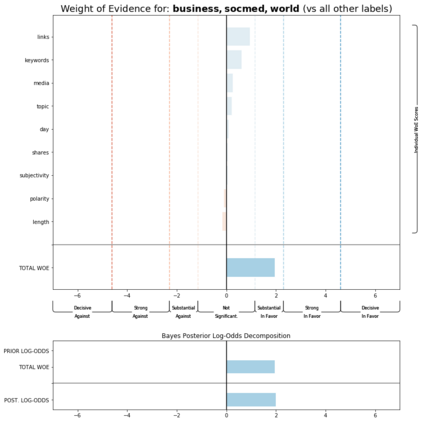

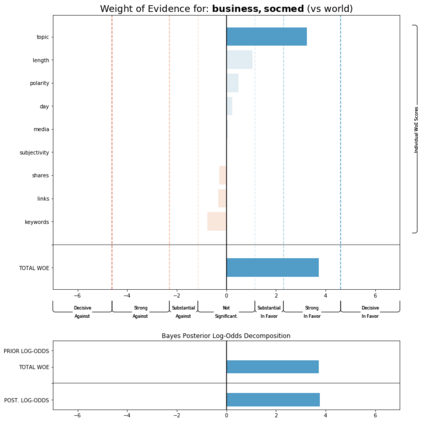

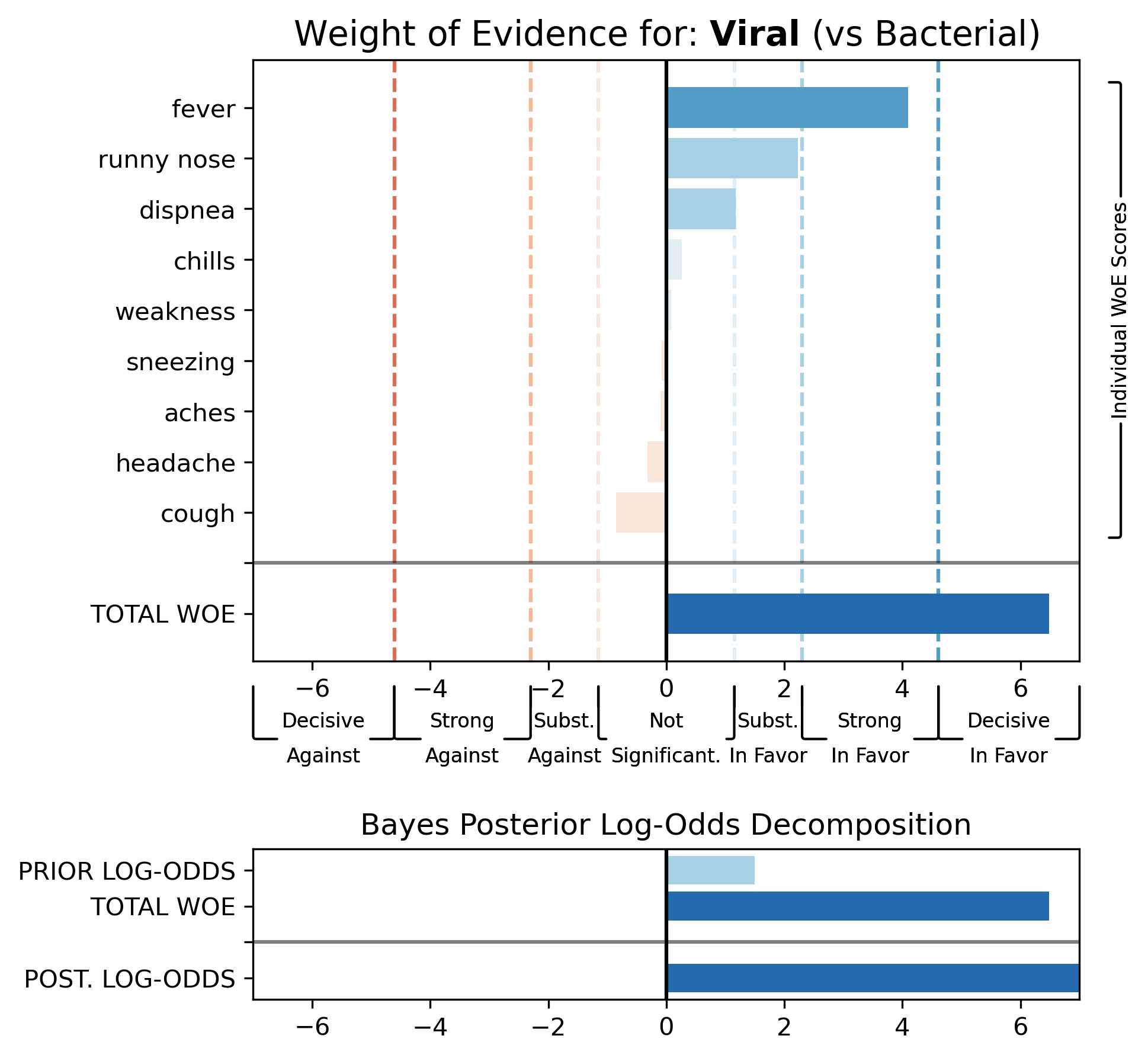

We take inspiration from the study of human explanation to inform the design and evaluation of interpretability methods in machine learning. First, we survey the literature on human explanation in philosophy, cognitive science, and the social sciences, and propose a list of design principles for machine-generated explanations that are meaningful to humans. Using the concept of weight of evidence from information theory, we develop a method for generating explanations that adhere to these principles. We show that this method can be adapted to handle high-dimensional, multi-class settings, yielding a flexible framework for generating explanations. We demonstrate that these explanations can be estimated accurately from finite samples and are robust to small perturbations of the inputs. We also evaluate our method through a qualitative user study with machine learning practitioners, where we observe that the resulting explanations are usable despite some participants struggling with background concepts like prior class probabilities. Finally, we conclude by surfacing~design~implications for interpretability tools in general.

翻译:我们从人类解释的研究中得到灵感,为机器学习解释方法的设计和评价提供参考。首先,我们调查哲学、认知科学和社会科学中人类解释的文献,提出对人类有意义的机器解释的设计原则清单。我们利用信息理论中证据的权重概念,开发了符合这些原则的解释方法。我们表明,这种方法可以适应高维、多级设置,产生一个灵活的解释框架。我们证明,这些解释可以从有限的样本中准确估计,并且能够对投入进行小的干扰。我们还通过与机器学习实践者进行质量用户研究来评估我们的方法。我们发现,尽管一些参与者在与前几类概率等背景概念斗争,但由此产生的解释仍然有用。最后,我们通过对一般可解释工具的表面设计来得出结论。