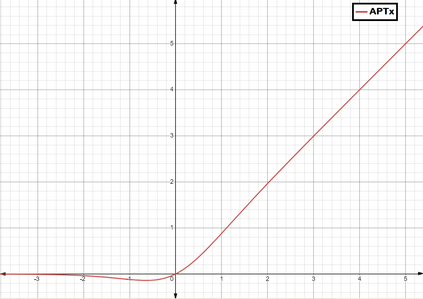

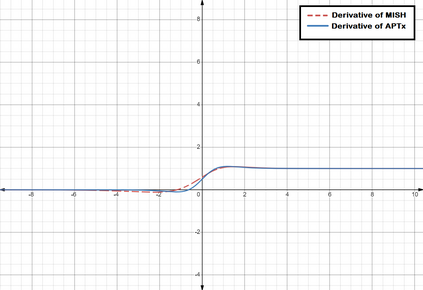

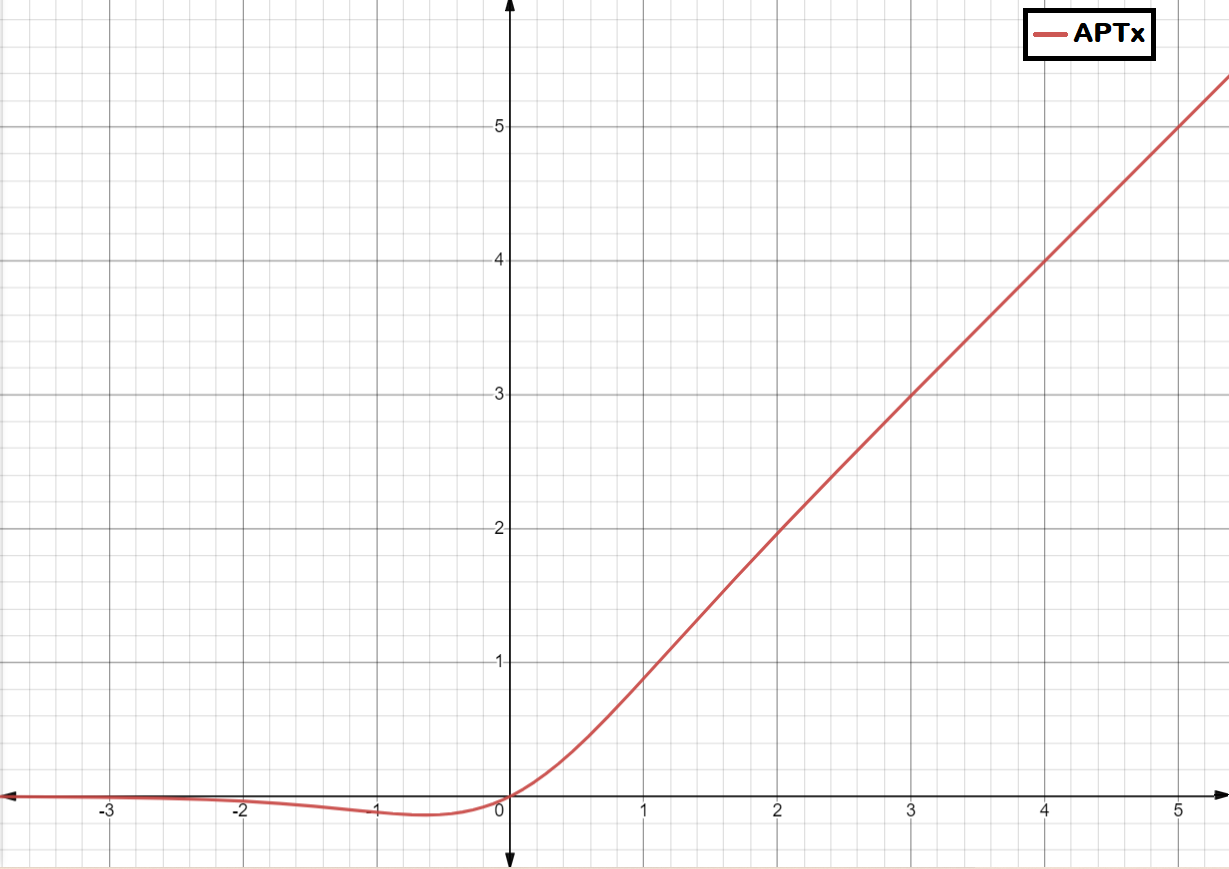

Activation Functions introduce non-linearity in the deep neural networks. This nonlinearity helps the neural networks learn faster and efficiently from the dataset. In deep learning, many activation functions are developed and used based on the type of problem statement. ReLU's variants, SWISH, and MISH are goto activation functions. MISH function is considered having similar or even better performance than SWISH, and much better than ReLU. In this paper, we propose an activation function named APTx which behaves similar to MISH, but requires lesser mathematical operations to compute. The lesser computational requirements of APTx does speed up the model training, and thus also reduces the hardware requirement for the deep learning model.

翻译:激活功能在深层神经网络中引入非线性功能。 这种非线性功能有助于神经网络更快和高效地从数据集中学习。 在深层学习中,许多激活功能是根据问题声明的类型开发和使用的。 RELU的变体、 SWISH 和 MISH 正在跳入激活功能。 MISH 函数被认为与SWISH 相似或甚至更好的性能, 比RELU要好得多。 在本文中, 我们提议了一个名为 APTx 的激活功能, 其行为与 MIS 类似, 但需要较少的数学操作来计算。 APTx 较少的计算要求确实加快了模型培训, 从而也减少了深层学习模型的硬件要求 。