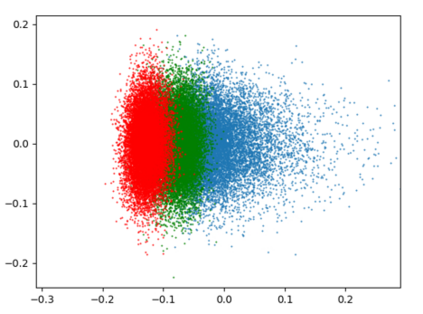

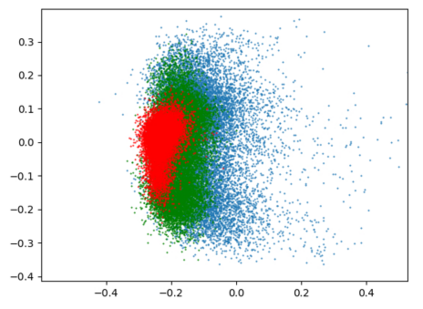

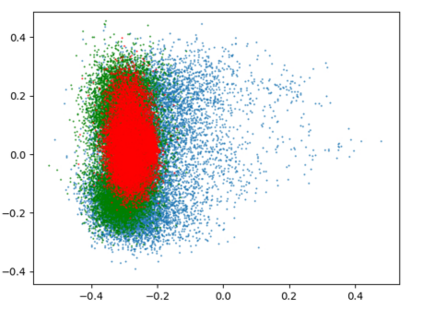

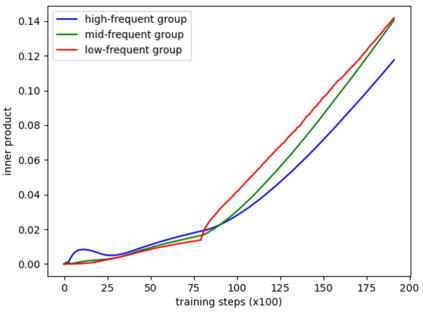

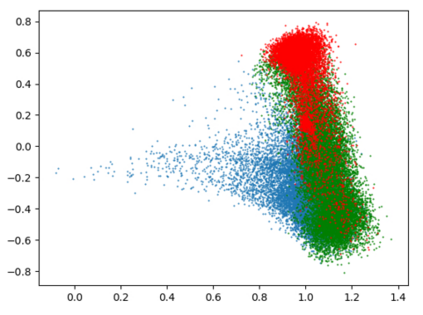

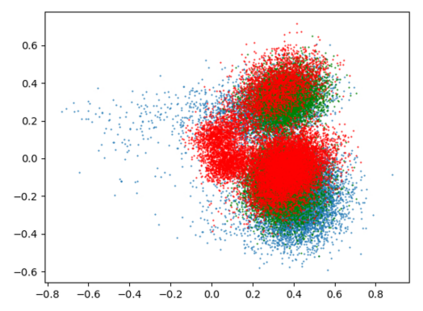

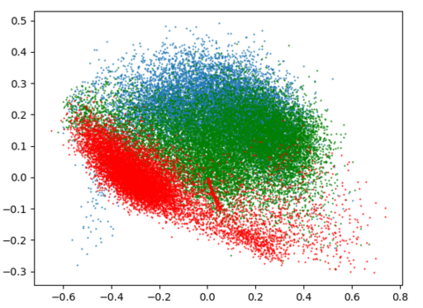

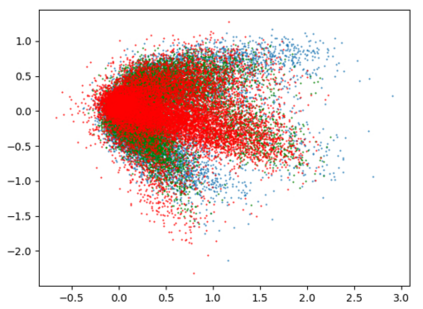

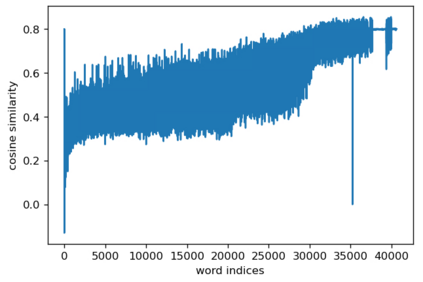

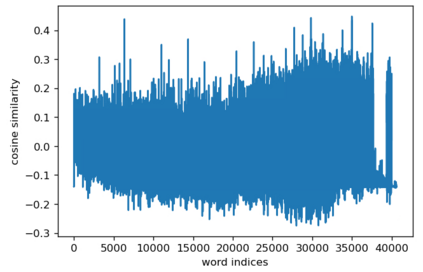

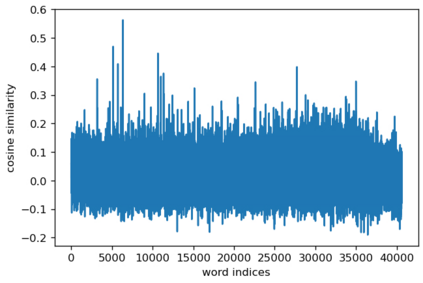

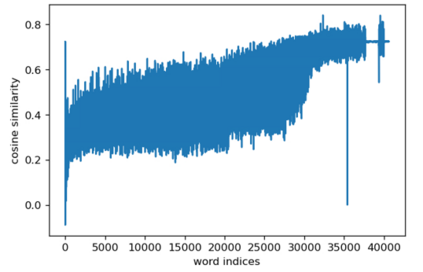

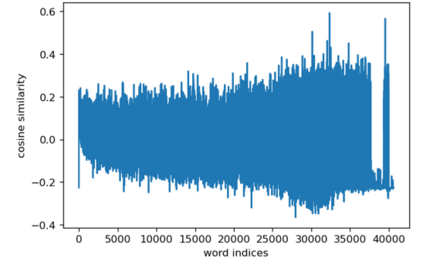

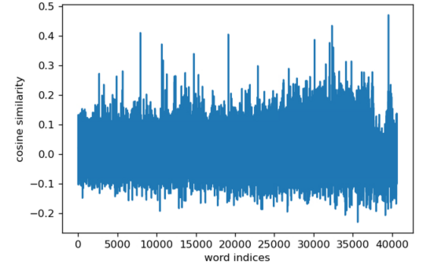

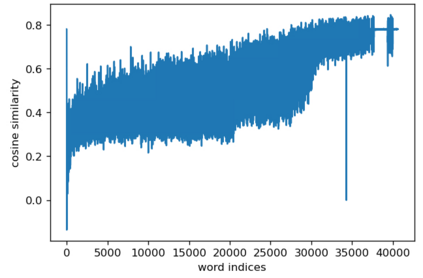

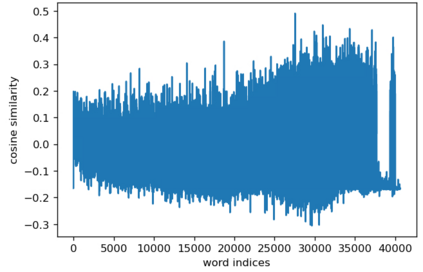

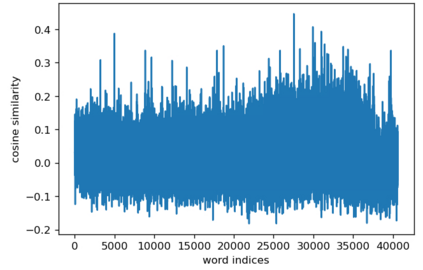

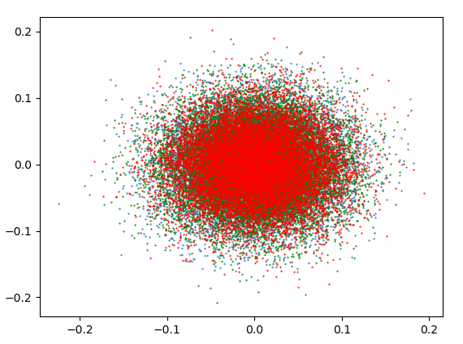

Despite advances in neural network language model, the representation degeneration problem of embeddings is still challenging. Recent studies have found that the learned output embeddings are degenerated into a narrow-cone distribution which makes the similarity between each embeddings positive. They analyzed the cause of the degeneration problem has been demonstrated as common to most embeddings. However, we found that the degeneration problem is especially originated from the training of embeddings of rare words. In this study, we analyze the intrinsic mechanism of the degeneration of rare word embeddings with respect of their gradient about the negative log-likelihood loss function. Furthermore, we theoretically and empirically demonstrate that the degeneration of rare word embeddings causes the degeneration of non-rare word embeddings, and that the overall degeneration problem can be alleviated by preventing the degeneration of rare word embeddings. Based on our analyses, we propose a novel method, Adaptive Gradient Partial Scaling(AGPS), to address the degeneration problem. Experimental results demonstrate the effectiveness of the proposed method qualitatively and quantitatively.

翻译:尽管在神经网络语言模型方面有所进步,但嵌入的代谢变异问题仍然具有挑战性。最近的研究发现,所学的嵌入输出会退化成一个狭锥分布,使每个嵌入体之间的相似性成为阳性。分析变化问题的原因被证明是大多数嵌入体常见的。然而,我们发现,变化问题特别源于稀有文字嵌入的训练。在本研究中,我们分析了稀有文字嵌入变异的内在机制及其关于负日志类似丧失功能的梯度。此外,我们从理论上和经验上证明,稀有词嵌入体的变异导致非弧式嵌入体的变异,并且通过防止稀有文字嵌入体的变异,可以缓解整体的退化问题。我们根据我们的分析,提出了一种新颖的方法,即适应性梯度部分缩放(AGPS),以解决变异性的问题。实验结果表明拟议方法的质量和数量上的有效性。