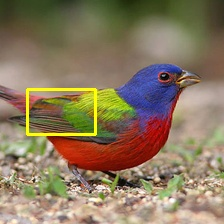

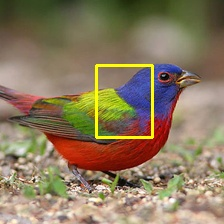

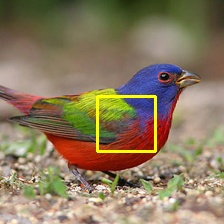

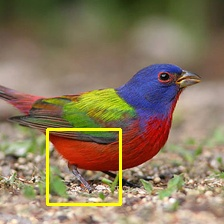

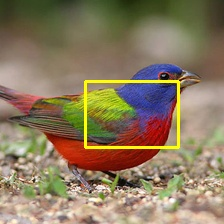

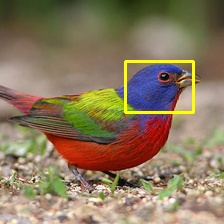

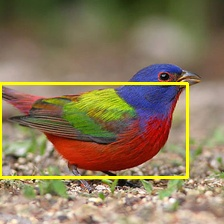

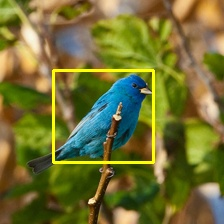

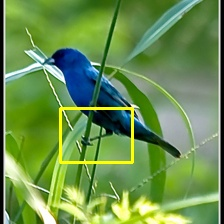

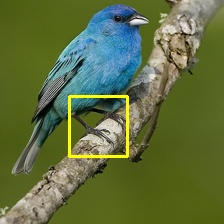

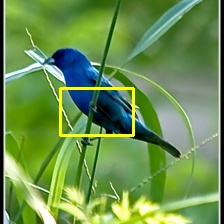

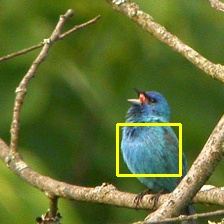

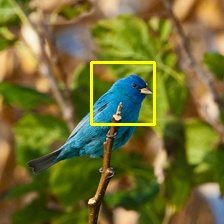

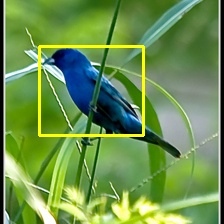

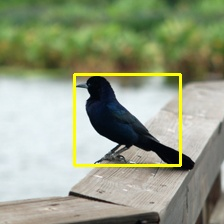

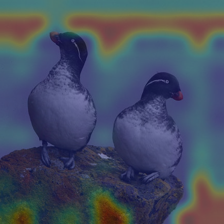

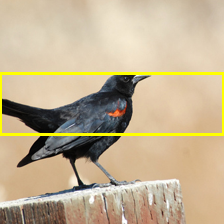

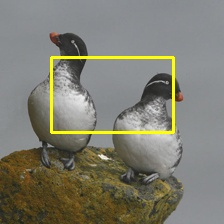

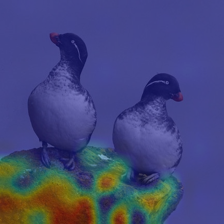

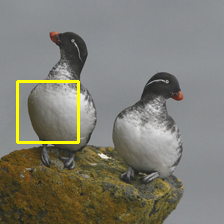

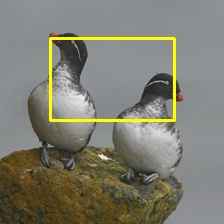

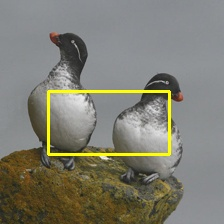

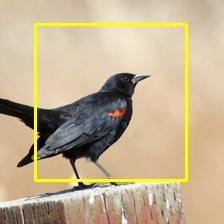

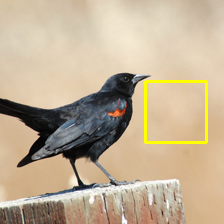

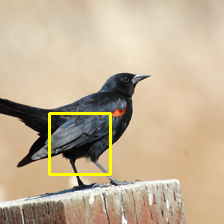

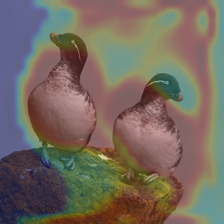

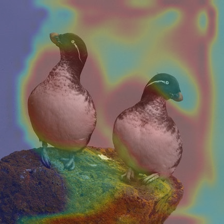

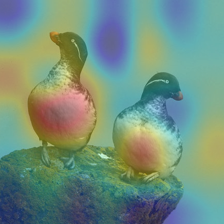

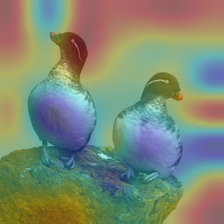

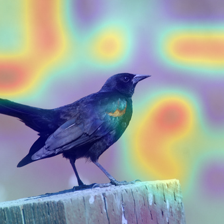

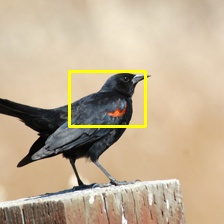

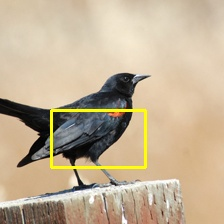

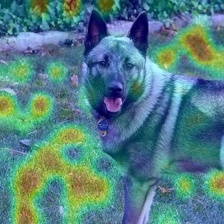

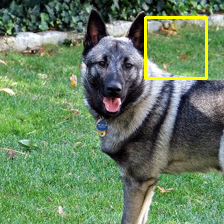

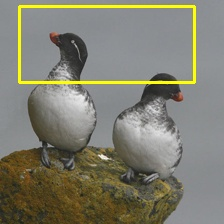

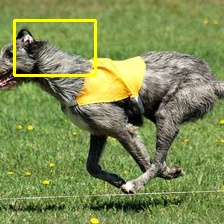

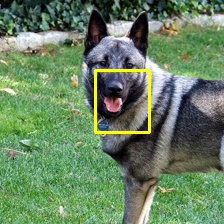

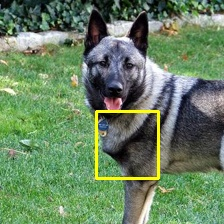

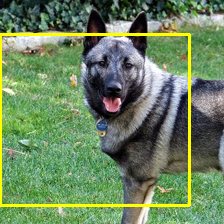

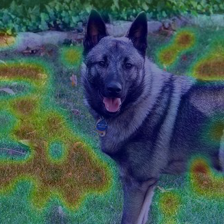

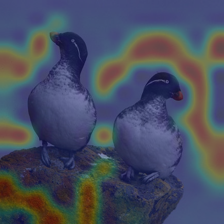

Prototypical part network (ProtoPNet) has drawn wide attention and boosted many follow-up studies due to its self-explanatory property for explainable artificial intelligence (XAI). However, when directly applying ProtoPNet on vision transformer (ViT) backbones, learned prototypes have a ''distraction'' problem: they have a relatively high probability of being activated by the background and pay less attention to the foreground. The powerful capability of modeling long-term dependency makes the transformer-based ProtoPNet hard to focus on prototypical parts, thus severely impairing its inherent interpretability. This paper proposes prototypical part transformer (ProtoPFormer) for appropriately and effectively applying the prototype-based method with ViTs for interpretable image recognition. The proposed method introduces global and local prototypes for capturing and highlighting the representative holistic and partial features of targets according to the architectural characteristics of ViTs. The global prototypes are adopted to provide the global view of objects to guide local prototypes to concentrate on the foreground while eliminating the influence of the background. Afterwards, local prototypes are explicitly supervised to concentrate on their respective prototypical visual parts, increasing the overall interpretability. Extensive experiments demonstrate that our proposed global and local prototypes can mutually correct each other and jointly make final decisions, which faithfully and transparently reason the decision-making processes associatively from the whole and local perspectives, respectively. Moreover, ProtoPFormer consistently achieves superior performance and visualization results over the state-of-the-art (SOTA) prototype-based baselines. Our code has been released at https://github.com/zju-vipa/ProtoPFormer.

翻译:原始部分网络(ProtoPNet)吸引了广泛的关注,并增加了许多后续研究,原因是其对于可解释的人工智能(XAI)具有自我解释性属性。然而,当直接将ProtoPNet直接应用于视觉变压器(VIT)主干网时,所学的原型有一个“二进制”问题:它们具有相对较高的被背景激活的概率,对前景的注意较少。长期依赖性模型的强大能力使得基于变压器的ProtoPNet难以专注于原型部分,从而严重地损害其内在的可解释性。本文建议了原型的直观变压器(ProtoPFormer),以便适当和有效地应用原型方法,与VIT(VIT)主干网(VIT)主干网(Vistraction)一起应用原型方法来适当和有效地应用原型(ProtoPNet)方法来识别和突出目标的整体整体和局部整体特征。全球原型模型被采用的目的是为当地原型(在消除背景影响的情况下,集中关注原型的原型)地方原型,最后的原型(Prototoalalalalalalalal) imalalalalalal) 和(O) imalalal) imalalalalalalationalationalalalalal-heuteal 将分别进行各自的决定,从而可以明确地分析。