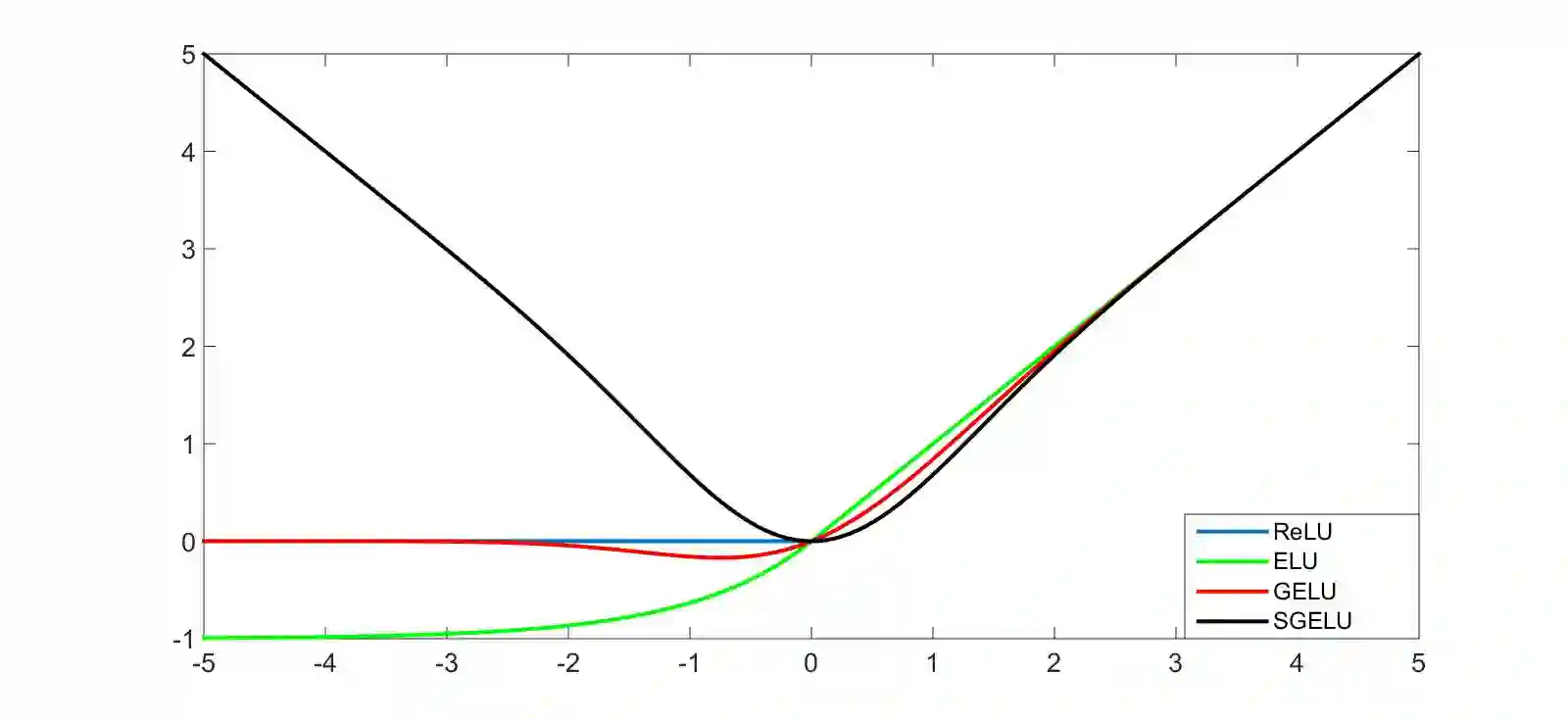

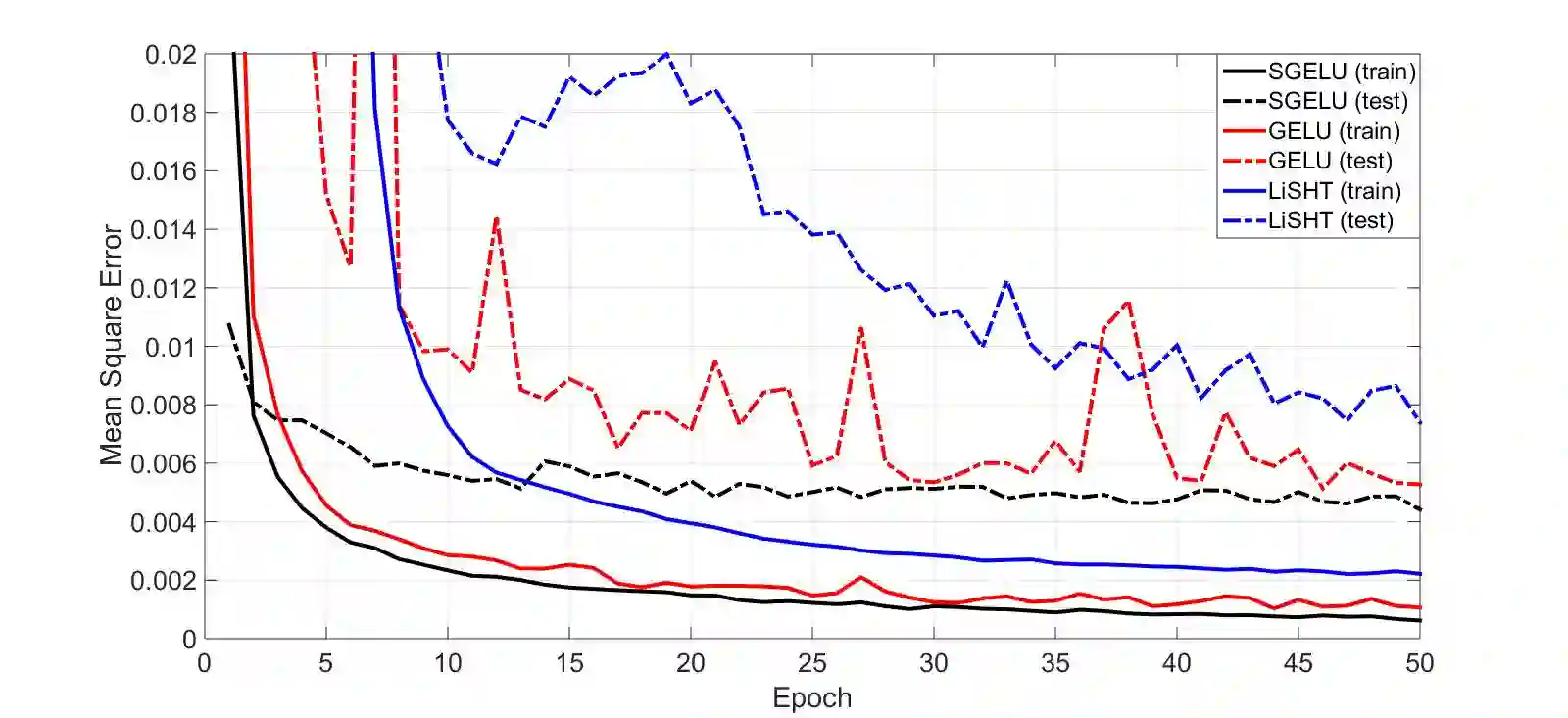

In this paper, a novel neural network activation function, called Symmetrical Gaussian Error Linear Unit (SGELU), is proposed to obtain high performance. It is achieved by effectively integrating the property of the stochastic regularizer in the Gaussian Error Linear Unit (GELU) with the symmetrical characteristics. Combining with these two merits, the proposed unit introduces the capability of the bidirection convergence to successfully optimize the network without the gradient diminishing problem. The evaluations of SGELU against GELU and Linearly Scaled Hyperbolic Tangent (LiSHT) have been carried out on MNIST classification and MNIST auto-encoder, which provide great validations in terms of the performance, the convergence rate among these applications.

翻译:在本文中,拟设立一个新型神经网络激活功能,称为Symmatical Gaussian 错误线性单元(SGELU),以取得很高的性能,其实现方式是将高山错误线性单元(GELU)中随机调节器的特性与对称特性有效结合,与这两个优点相结合,拟议单元提出了双向趋同能力,以便在没有梯度缩小问题的情况下成功地优化网络。 SGELU对GELU和线性缩放超单曲坦肯特(LiSHT)的评价是根据MMSIS分类和MNIST自动编码器进行的,这些分类和自动编码为这些应用的性能和趋同率提供了很大的验证。