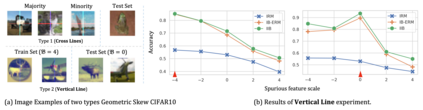

Invariant risk minimization (IRM) has recently emerged as a promising alternative for domain generalization. Nevertheless, the loss function is difficult to optimize for nonlinear classifiers and the original optimization objective could fail when pseudo-invariant features and geometric skews exist. Inspired by IRM, in this paper we propose a novel formulation for domain generalization, dubbed invariant information bottleneck (IIB). IIB aims at minimizing invariant risks for nonlinear classifiers and simultaneously mitigating the impact of pseudo-invariant features and geometric skews. Specifically, we first present a novel formulation for invariant causal prediction via mutual information. Then we adopt the variational formulation of the mutual information to develop a tractable loss function for nonlinear classifiers. To overcome the failure modes of IRM, we propose to minimize the mutual information between the inputs and the corresponding representations. IIB significantly outperforms IRM on synthetic datasets, where the pseudo-invariant features and geometric skews occur, showing the effectiveness of proposed formulation in overcoming failure modes of IRM. Furthermore, experiments on DomainBed show that IIB outperforms $13$ baselines by $0.9\%$ on average across $7$ real datasets.

翻译:然而,对于非线性分类者来说,损失功能很难优化,最初的优化目标可能失败,如果存在假的异差特征和几何偏差,在IRM的启发下,我们在本文件中提出一个域通用的新提法,称为异差信息瓶颈(IIB)。IIB旨在尽可能减少非线性分类者的异差风险,同时减轻伪的异差特征和几何偏差的影响。具体地说,我们首先提出一种通过相互信息预测异差因果关系的新配方。然后,我们采用相互信息的变式配方,为非线性分类者开发可移动的损失函数。为了克服IRM的失败模式,我们提议尽量减少投入和相应表述之间的相互信息。IIB在合成数据集上大大优于IMM,其中出现假的异差和几何偏差特征。我们首先提出了一种新配方,通过相互信息,通过相互提供信息,为非线性分类者开发可移动的损失函数。此外,为了克服IRM的失败模式,我们提议尽量减少投入和相应表述的相互信息。IRM在合成数据集上,伪的特性和几克基底值上显示拟议配方在超过IRM美元的实际基值为13.9美元的基值数据中的效率。