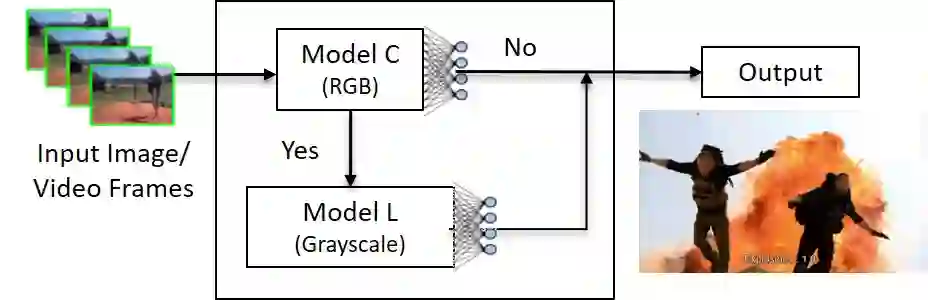

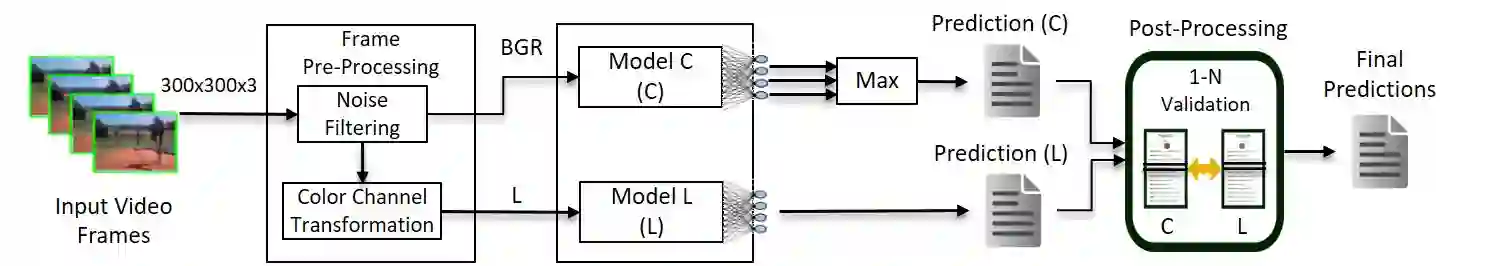

To facilitate implementation of high-accuracy deep neural networks especially on resource-constrained devices, maintaining low computation requirements is crucial. Using very deep models for classification purposes not only decreases the neural network training speed and increases the inference time, but also need more data for higher prediction accuracy and to mitigate false positives. In this paper, we propose an efficient and lightweight deep classification ensemble structure based on a combination of simple color features, which is particularly designed for "high-accuracy" image classifications with low false positives. We designed, implemented, and evaluated our approach for explosion detection use-case applied to images and videos. Our evaluation results based on a large test test show considerable improvements on the prediction accuracy compared to the popular ResNet-50 model, while benefiting from 7.64x faster inference and lower computation cost. While we applied our approach to explosion detection, our approach is general and can be applied to other similar classification use cases as well. Given the insight gained from our experiments, we hence propose a "think small, think many" philosophy in classification scenarios: that transforming a single, large, monolithic deep model into a verification-based step model ensemble of multiple small, simple, lightweight models with narrowed-down color spaces can possibly lead to predictions with higher accuracy.

翻译:为了便利实施高精密深神经网络,特别是资源限制装置的高精密深度神经网络,维持低计算要求至关重要。使用非常深的模型进行分类不仅降低神经网络培训速度,增加推断时间,而且需要更多数据提高预测准确性,减少假阳性。在本文中,我们建议采用一个高效和轻量的深度分类整体结构,其基础是简单颜色特征的组合,特别是“高精度”图像分类和低假阳性。我们设计、实施和评估了适用于图像和视频的爆炸探测用案例。基于大型测试的评估结果显示,预测准确性比流行的ResNet-50模型有了相当大的改进,同时得益于7.64x更快的推断和较低的计算成本。虽然我们采用了爆炸探测方法,我们的方法是一般性的,也可以适用于其他类似的分类使用案例。鉴于我们从实验中获得的洞察力,我们因此在分类假设中提出了“小思考许多”哲学:将单一、大型、单级、单级深层模型转换成一个可能更低的低度模型,可以将一个小的简单级模型转换成一个可能更精确的多级的低级的精确度预测。