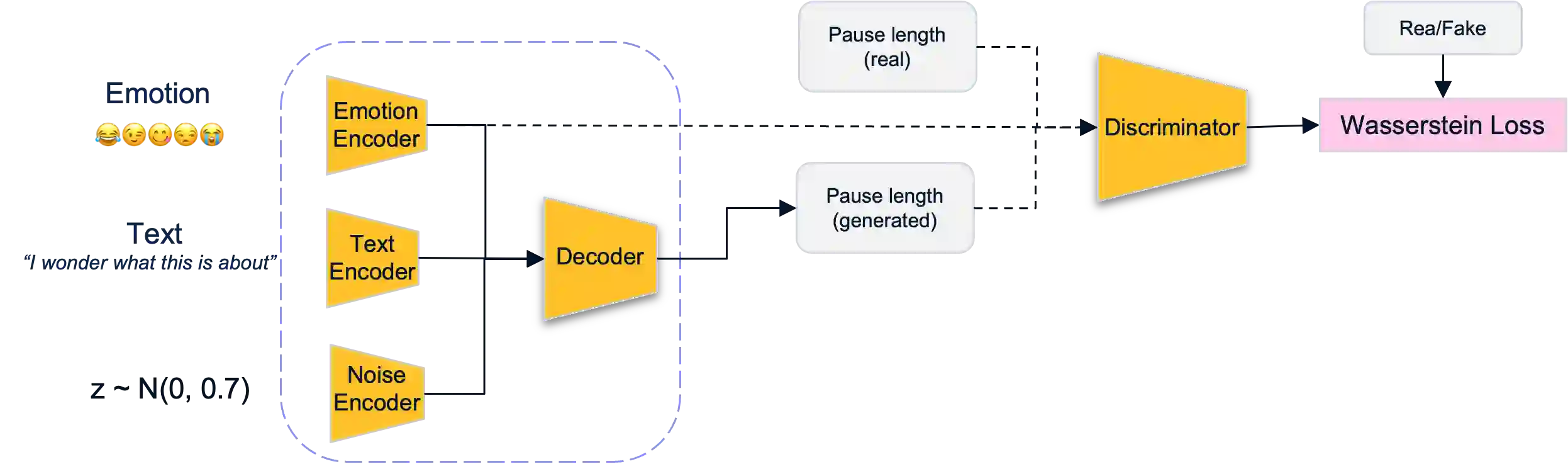

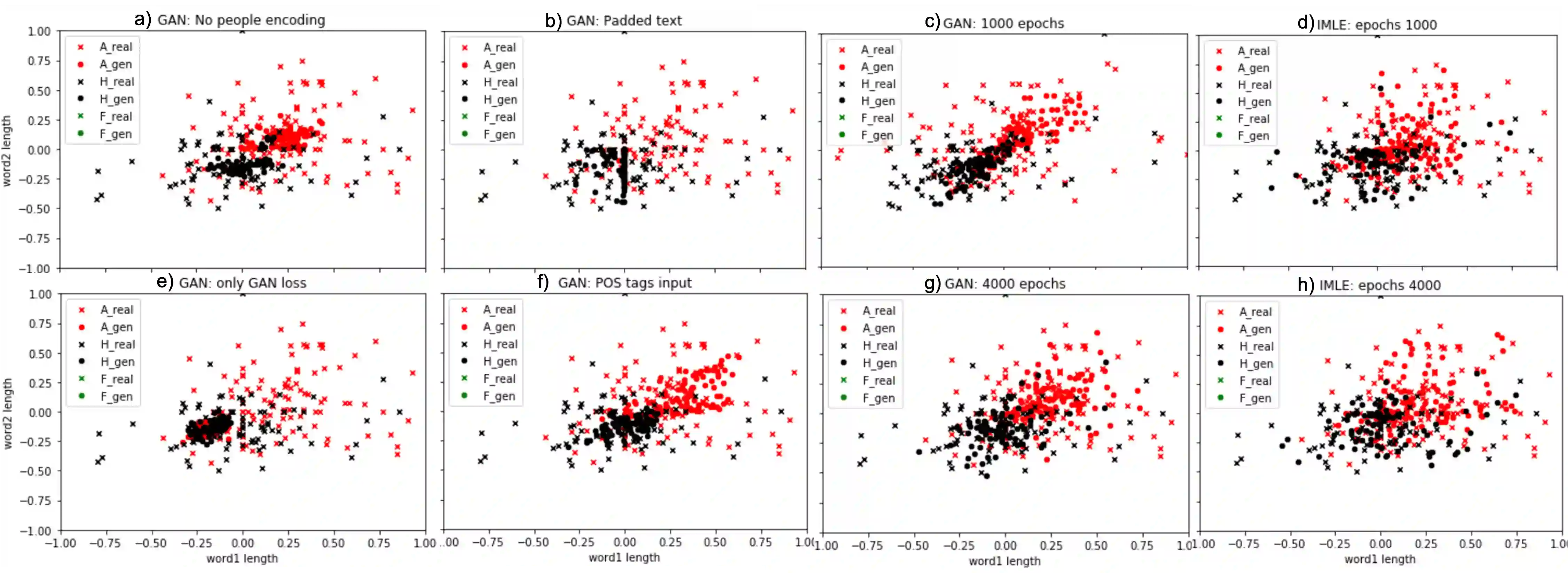

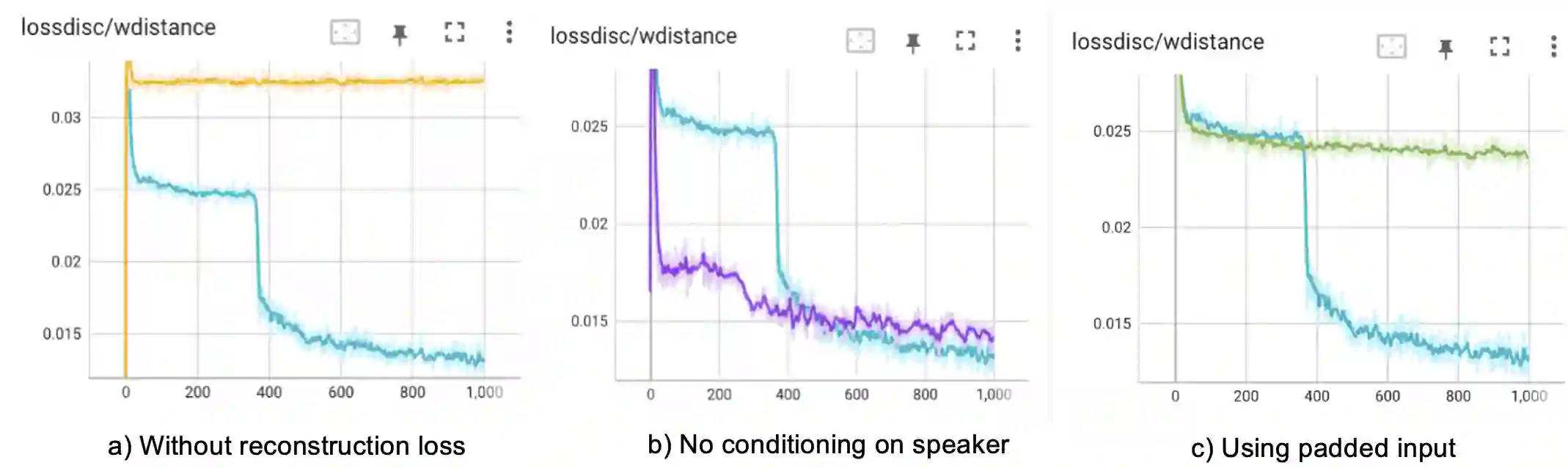

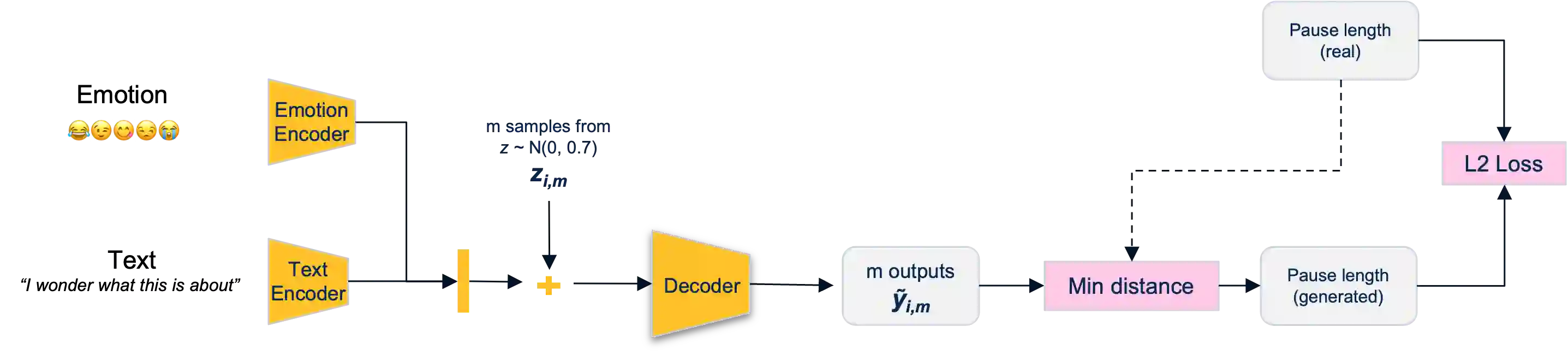

Voice synthesis has seen significant improvements in the past decade resulting in highly intelligible voices. Further investigations have resulted in models that can produce variable speech, including conditional emotional expression. The problem lies, however, in a focus on phrase-level modifications and prosodic vocal features. Using the CREMA-D dataset we have trained a GAN conditioned on emotion to generate worth lengths for a given input text. These word lengths are relative to neutral speech and can be provided, through speech synthesis markup language (SSML) to a text-to-speech (TTS) system to generate more expressive speech. Additionally, a generative model is also trained using implicit maximum likelihood estimation (IMLE) and a comparative analysis with GANs is included. We were able to achieve better performances on objective measures for neutral speech, and better time alignment for happy speech when compared to an out-of-box model. However, further investigation of subjective evaluation is required.

翻译:过去十年来,语音合成工作有了显著改善,产生了非常易理解的声音;进一步的调查产生了能够产生可变言论的模型,包括有条件的情感表达;但问题在于侧重于语句一级的修改和主动声音特征;利用CREMA-D数据集,我们训练了一个以情感为条件的GAN数据集,以情感为条件,为特定输入文本创造有价值的长度;这些字长与中性语言有关,可以通过语音合成标记语言(MLSS)提供给文本到语音系统,以产生更清晰的言论;此外,还利用隐含的最大可能性估计(IMLE)和与GANs的比较分析对基因模型进行了培训;我们能够在中立言论的客观措施上取得更好的表现,并在与箱外模式相比,为快乐的言论更好地调整时间。然而,还需要进一步调查主观评价。