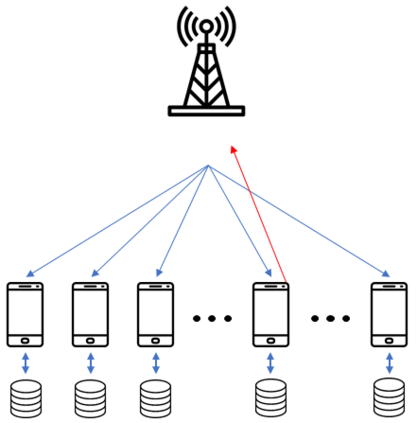

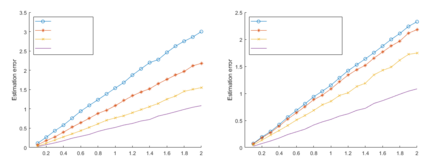

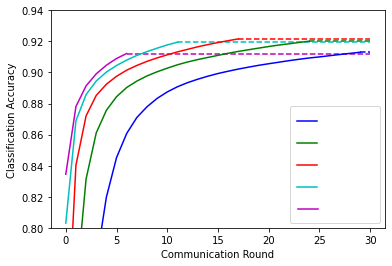

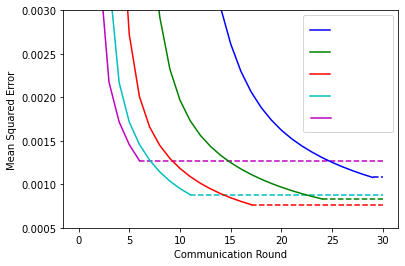

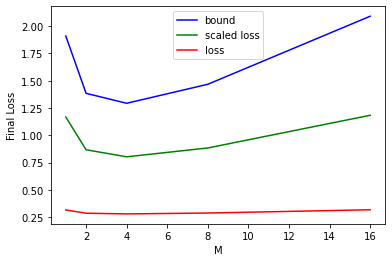

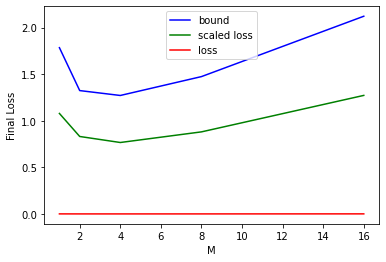

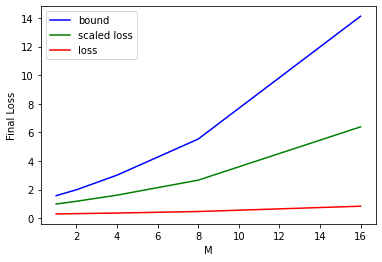

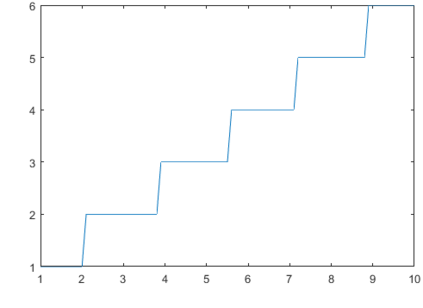

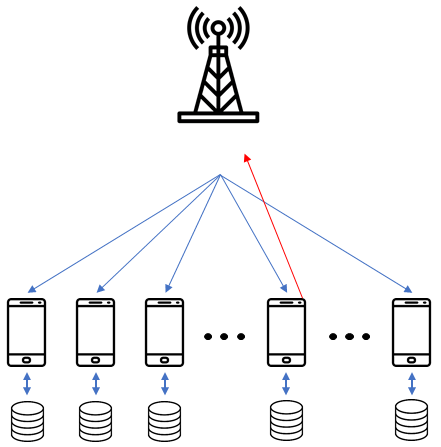

Motivated by increasing computational capabilities of wireless devices, as well as unprecedented levels of user- and device-generated data, new distributed machine learning (ML) methods have emerged. In the wireless community, Federated Learning (FL) is of particular interest due to its communication efficiency and its ability to deal with the problem of non-IID data. FL training can be accelerated by a wireless communication method called Over-the-Air Computation (AirComp) which harnesses the interference of simultaneous uplink transmissions to efficiently aggregate model updates. However, since AirComp utilizes analog communication, it introduces inevitable estimation errors. In this paper, we study the impact of such estimation errors on the convergence of FL and propose retransmissions as a method to improve FL convergence over resource-constrained wireless networks. First, we derive the optimal AirComp power control scheme with retransmissions over static channels. Then, we investigate the performance of Over-the-Air FL with retransmissions and find two upper bounds on the FL loss function. Finally, we propose a heuristic for selecting the optimal number of retransmissions, which can be calculated before training the ML model. Numerical results demonstrate that the introduction of retransmissions can lead to improved ML performance, without incurring extra costs in terms of communication or computation. Additionally, we provide simulation results on our heuristic which indicate that it can correctly identify the optimal number of retransmissions for different wireless network setups and machine learning problems.

翻译:在无线社区,联邦学习联合会(FL)因其通信效率和处理非IID数据问题的能力而特别感兴趣。FL培训可以通过名为Aur-Air Comput(AirComputation)的无线通信方法加速,该方法利用同步上链传输干扰来高效综合模型更新。然而,自AirComp利用模拟通信以来,它引入了不可避免的估算错误。在本文件中,我们研究了这种估算错误对FL趋同的影响,并提议将再传输作为改善FL在资源限制型无线网络上的趋同的方法。首先,我们用“超Air-Compt-Comptation”(AirCompation)的再传输(Air-Air-Ilinkmlining Committee (Air-Air-Air-Air-Compilmlining) 生成的干扰性能来加速进行无线连接传输,然后,我们调查Air-FL损失功能使用两个上层链接的上层连接。最后,我们提议在选择最优的再传输错误计算模型数时,在不精确的网络上,在不精确的引入中,可以显示超L的升级的升级的计算结果中,我们可以提供。