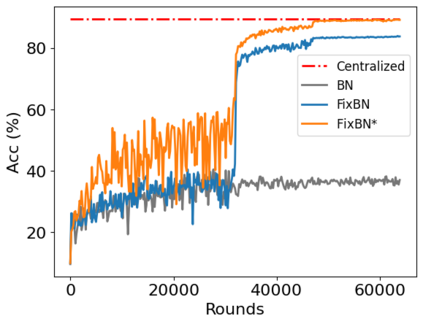

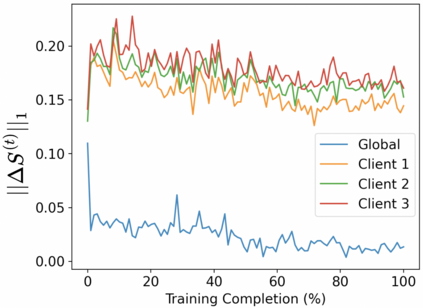

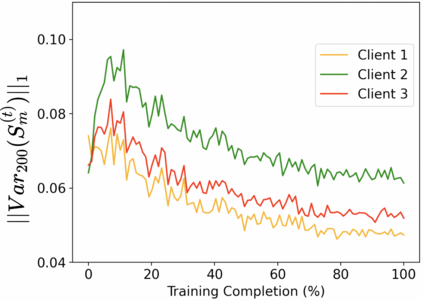

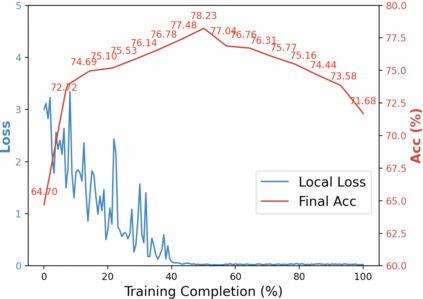

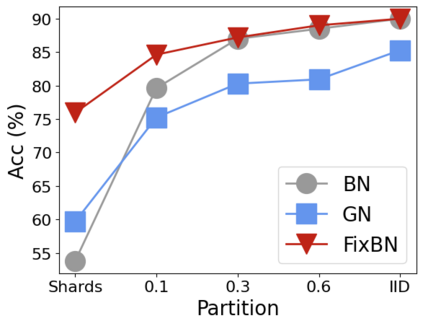

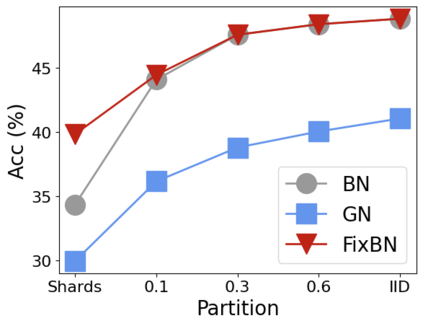

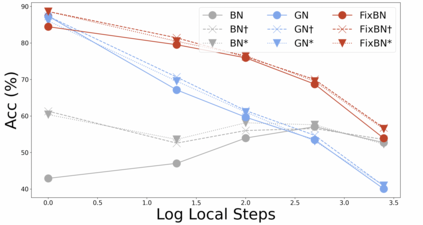

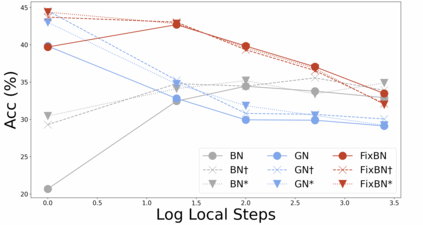

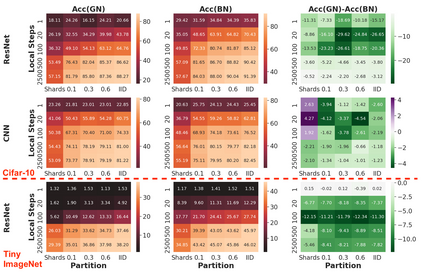

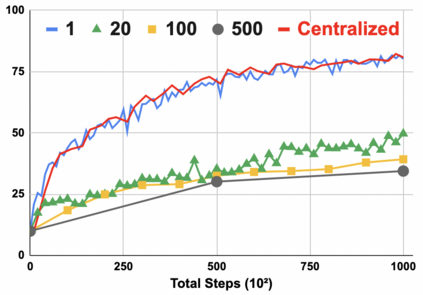

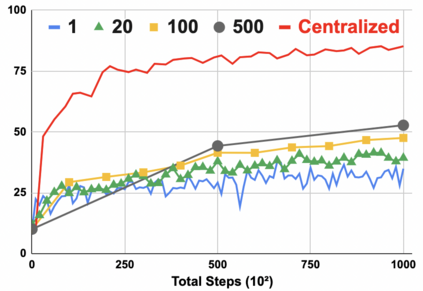

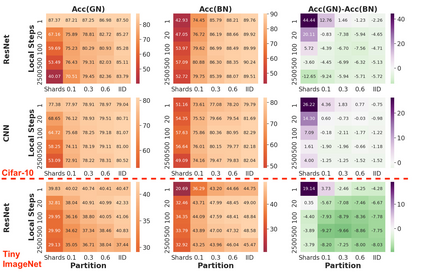

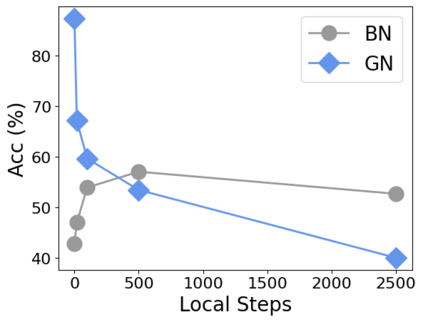

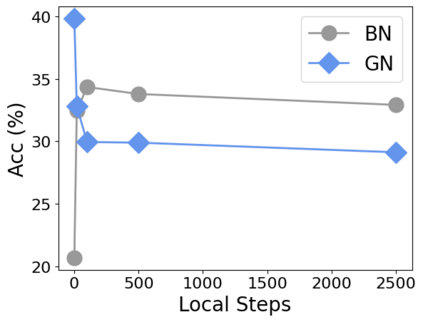

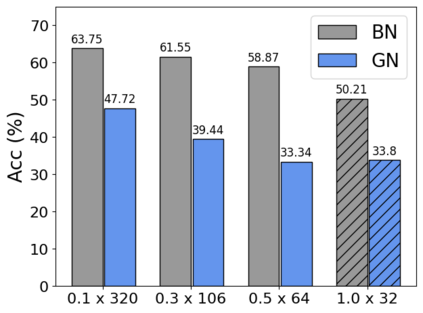

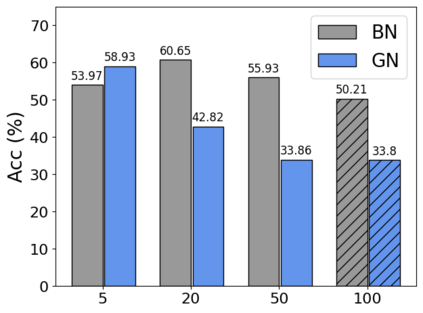

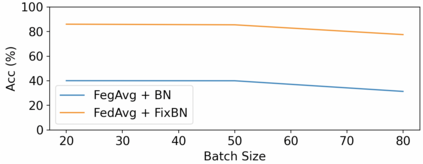

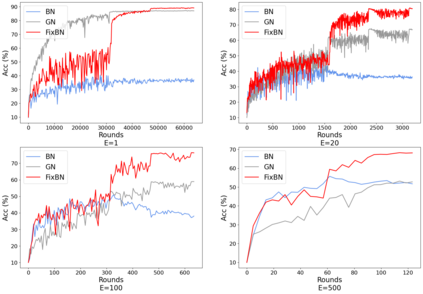

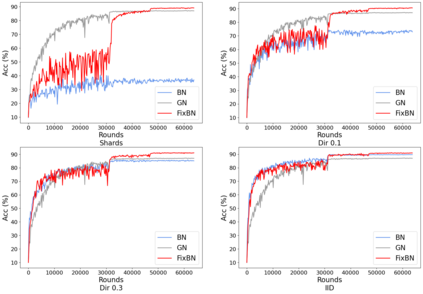

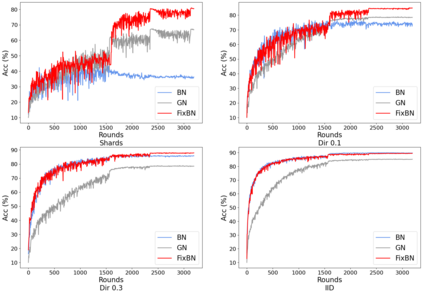

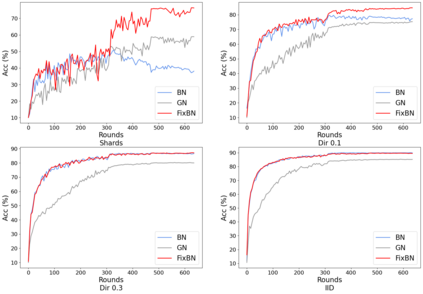

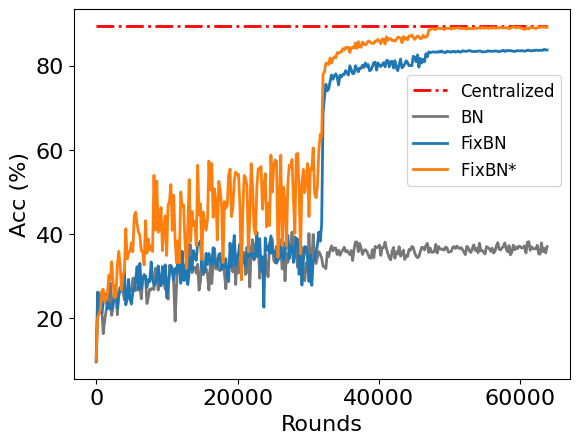

Batch Normalization (BN) is commonly used in modern deep learning to improve stability and speed up convergence in centralized training. In federated learning (FL) with non-IID decentralized data, previous works observed that training with BN could hinder performance due to the mismatch of the BN statistics between training and testing. Group Normalization (GN) is thus more often used in FL as an alternative to BN. In this paper, we identify a more fundamental issue of BN in FL that makes BN inferior even with high-frequency communication between clients and servers. We then propose a frustratingly simple treatment, which significantly improves BN and makes it outperform GN across a wide range of FL settings. Along with this study, we also reveal an unreasonable behavior of BN in FL. We find it quite robust in the low-frequency communication regime where FL is commonly believed to degrade drastically. We hope that our study could serve as a valuable reference for future practical usage and theoretical analysis in FL.

翻译:暂无翻译