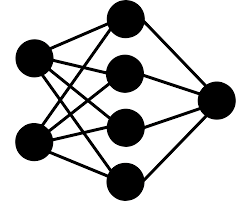

In adversarial machine learning, new defenses against attacks on deep learning systems are routinely broken soon after their release by more powerful attacks. In this context, forensic tools can offer a valuable complement to existing defenses, by tracing back a successful attack to its root cause, and offering a path forward for mitigation to prevent similar attacks in the future. In this paper, we describe our efforts in developing a forensic traceback tool for poison attacks on deep neural networks. We propose a novel iterative clustering and pruning solution that trims "innocent" training samples, until all that remains is the set of poisoned data responsible for the attack. Our method clusters training samples based on their impact on model parameters, then uses an efficient data unlearning method to prune innocent clusters. We empirically demonstrate the efficacy of our system on three types of dirty-label (backdoor) poison attacks and three types of clean-label poison attacks, across domains of computer vision and malware classification. Our system achieves over 98.4% precision and 96.8% recall across all attacks. We also show that our system is robust against four anti-forensics measures specifically designed to attack it.

翻译:在对抗性机器的学习中,针对对深层学习系统的攻击的新防御系统在通过更强大的攻击释放后很快就经常被打破。在这方面,法医工具可以对现有防御系统提供宝贵的补充,其方法是追踪一次成功的攻击,找到其根源,并为减缓今后类似的攻击提供一条前进的道路。在本文中,我们描述了我们开发一个用于对深层神经网络进行毒害袭击的法医学追踪工具的努力。我们提议了一个新型的迭代集群和修剪解决方案,将“无辜”训练样品裁剪,直到剩下的全部是应对攻击负责的有毒数据组。我们的方法组集样本基于其对模型参数的影响,然后使用高效的数据解学方法来淡化无辜群组。我们从经验上展示了我们系统在三种类型的肮脏标签(后门)毒攻击和三种类型的清洁标签毒攻击方面的功效,这三种类型是计算机视觉和恶意分类。我们系统在各种攻击中达到98.4%的精确度和96.8%的回顾力。我们还表明,我们的系统对专门设计用来攻击它的四种抗反毒措施是强大的。