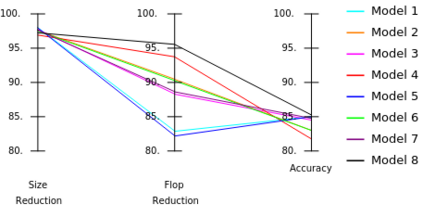

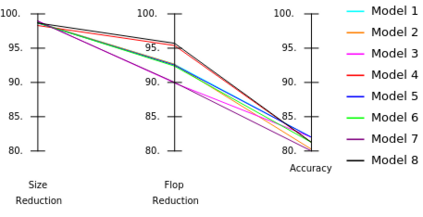

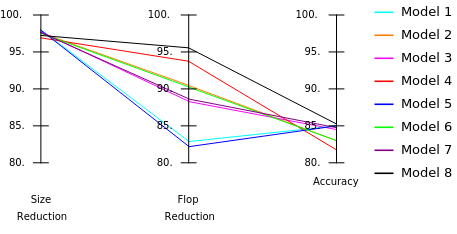

Significant efforts are being invested to bring the classification and recognition powers of desktop and cloud systems directly to edge devices. The main challenge for deep learning on the edge is to handle extreme resource constraints(memory, CPU speed and lack of GPU support). We present an edge solution for audio classification that achieves close to state-of-the-art performance on ESC-50, the same benchmark used to assess large, non resource-constrained networks. Importantly, we do not specifically engineer the network for edge devices. Rather, we present a universal pipeline that converts a large deep convolutional neural network (CNN) automatically via compression and quantization into a network suitable for resource-impoverished edge devices. We first introduce a new sound classification architecture, ACDNet, that produces above state-of-the-art accuracy on both ESC-10 and ESC-50 which are 96.75% and 87.05% respectively. We then compress ACDNet using a novel network-independent approach to obtain an extremely small model. Despite 97.22% size reduction and 97.28% reduction in FLOPs, the compressed network still achieves 82.90% accuracy on ESC-50, staying close to the state-of-the-art. Using 8-bit quantization, we deploy ACDNet on standard microcontroller units (MCUs). To the best of our knowledge, this is the first time that a deep network for sound classification of 50 classes has successfully been deployed on an edge device. While this should be of interestin its own right, we believe it to be of particular importance that this has been achieved with a universal conversion pipeline rather than hand-crafting a network for minimal size.

翻译:正在做出重大努力,将台式和云层系统的分类和识别能力直接引入边缘装置。在边缘深层学习的主要挑战是处理极端资源限制(模拟、CPU速度和缺乏GPU支持)的极端资源限制(模拟、CPU速度和缺乏GPU支持)。我们首先为音频分类提出了一个边缘解决方案,该分类在ESC-50上达到接近最先进的性能,而ESC-10和ESC-50的精确度均高于最先进的性能,而前者的精确度分别为96.75%和87.05 % 。我们随后用新式网络依赖性网络的方法将ACDNet压缩成一个极小的模型。尽管通过压缩和量化,将大型深层神经神经网络自动转换成适合资源耗尽的网络。 压缩网络在80.90至ESC-50的精确度上仍然能达到最佳的网络水平,这是我们正常水平的AS-M-40级水平, 也就是我们最先进的网络在80-90 水平上实现了这个精确度的精确度。