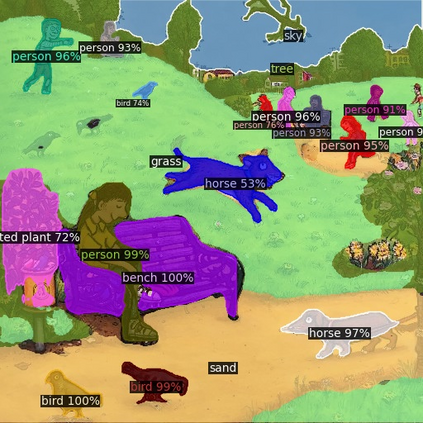

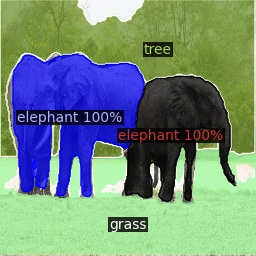

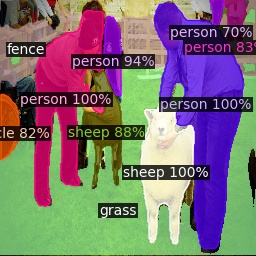

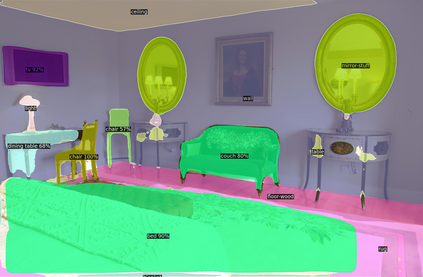

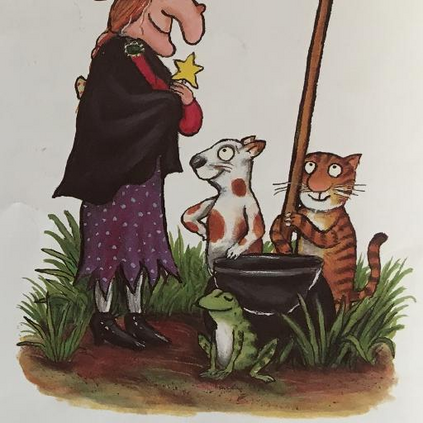

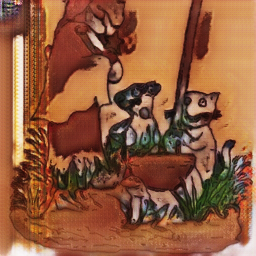

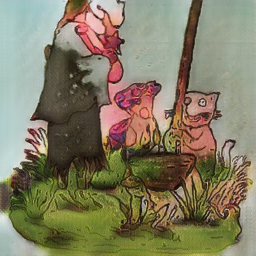

We introduce a new method for generating color images from sketches or edge maps. Current methods either require some form of additional user-guidance or are limited to the "paired" translation approach. We argue that segmentation information could provide valuable guidance for sketch colorization. To this end, we propose to leverage semantic image segmentation, as provided by a general purpose panoptic segmentation network, to create an additional adversarial loss function. Our loss function can be integrated to any baseline GAN model. Our method is not limited to datasets that contain segmentation labels, and it can be trained for "unpaired" translation tasks. We show the effectiveness of our method on four different datasets spanning scene level indoor, outdoor, and children book illustration images using qualitative, quantitative and user study analysis. Our model improves its baseline up to 35 points on the FID metric. Our code and pretrained models can be found at https://github.com/giddyyupp/AdvSegLoss.

翻译:我们采用了一种新的方法来从草图或边缘地图生成彩色图像。 目前的方法要么需要某种形式的额外的用户指导, 要么局限于“ paireed” 翻译方法。 我们争论说, 分割信息可以为素描颜色化提供宝贵的指导。 为此, 我们提议利用一个通用的全光截面分割网提供的语义图像分割法, 来创建额外的对抗性损失功能。 我们的损失功能可以整合到任何基准的 GAN 模型中。 我们的方法不仅限于包含分割标签的数据集, 也可以对“ unpaired” 翻译任务进行培训。 我们用定性、 定量和用户研究分析, 展示了四个不同数据集在室内、 室外和 儿童书中分布的图像的有效性。 我们的模型将其基线提升到FID 的35 点。 我们的代码和预先训练模型可以在 https://github.com/ giddyyupp/AdvSegLos 上找到 。