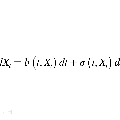

Score-based diffusion models learn to reverse a stochastic differential equation that maps data to noise. However, for complex tasks, numerical error can compound and result in highly unnatural samples. Previous work mitigates this drift with thresholding, which projects to the natural data domain (such as pixel space for images) after each diffusion step, but this leads to a mismatch between the training and generative processes. To incorporate data constraints in a principled manner, we present Reflected Diffusion Models, which instead reverse a reflected stochastic differential equation evolving on the support of the data. Our approach learns the perturbed score function through a generalize score matching loss and extends key components of standard diffusion models including diffusion guidance, likelihood-based training, and ODE sampling. We also bridge the theoretical gap with thresholding: such schemes are just discretizations of reflected SDEs. On standard image benchmarks, our method is competitive with or surpasses the state of the art and, for classifier-free guidance, our approach enables fast exact sampling with ODEs and produces more faithful samples under high guidance weight.

翻译:通过评分进行的扩散模型学习如何扭转将数据映射到噪声的随机微分方程。然而,对于复杂的任务,数值误差可能会增加,从而导致高度不自然的样本。先前的工作通过阈值化来减轻漂移,即在每个扩散步骤后将其投影到自然数据域上(例如,对于图像而言是像素空间)。但是,这导致了训练和生成过程之间存在不匹配。为了以一种原则性的方式纳入数据约束,我们提出了反射扩散模型,其反转了在数据支持上演变的反射随机微分方程。我们的方法通过广义评分匹配损失学习受扰动的评分函数,并扩展了标准扩散模型的关键组件,包括扩散指导、基于可能性的培训和ODE采样。我们还弥合了阈值化的理论差距:这些方案只是反射SDE的离散化。在标准图像基准测试中,我们的方法与现有技术竞争性相当或超过,并且对于无分类器指导,我们的方法能够通过ODE进行快速精确采样,并在高指导权重下产生更真实的样本。