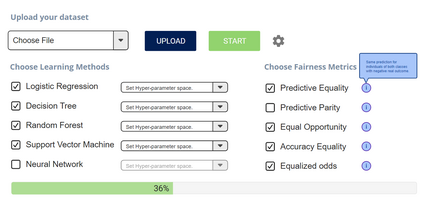

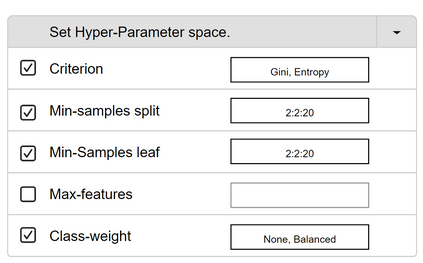

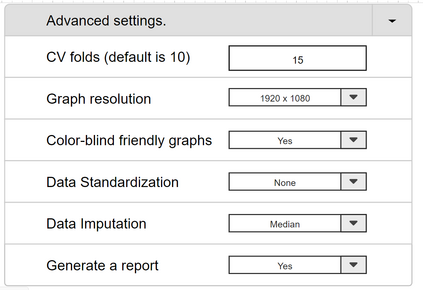

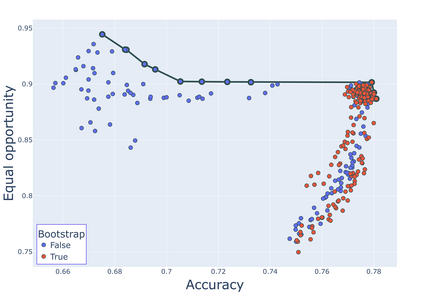

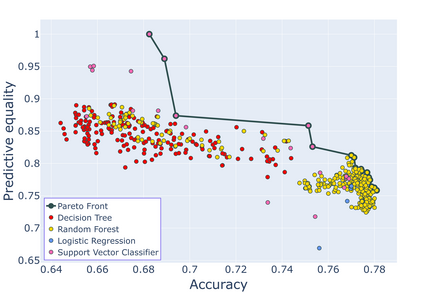

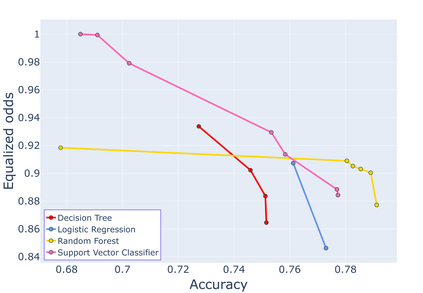

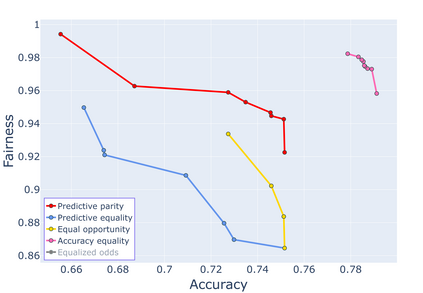

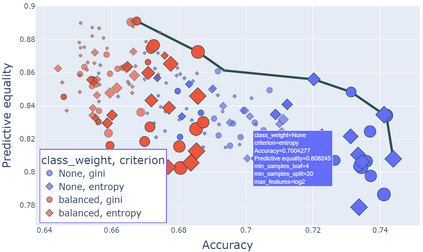

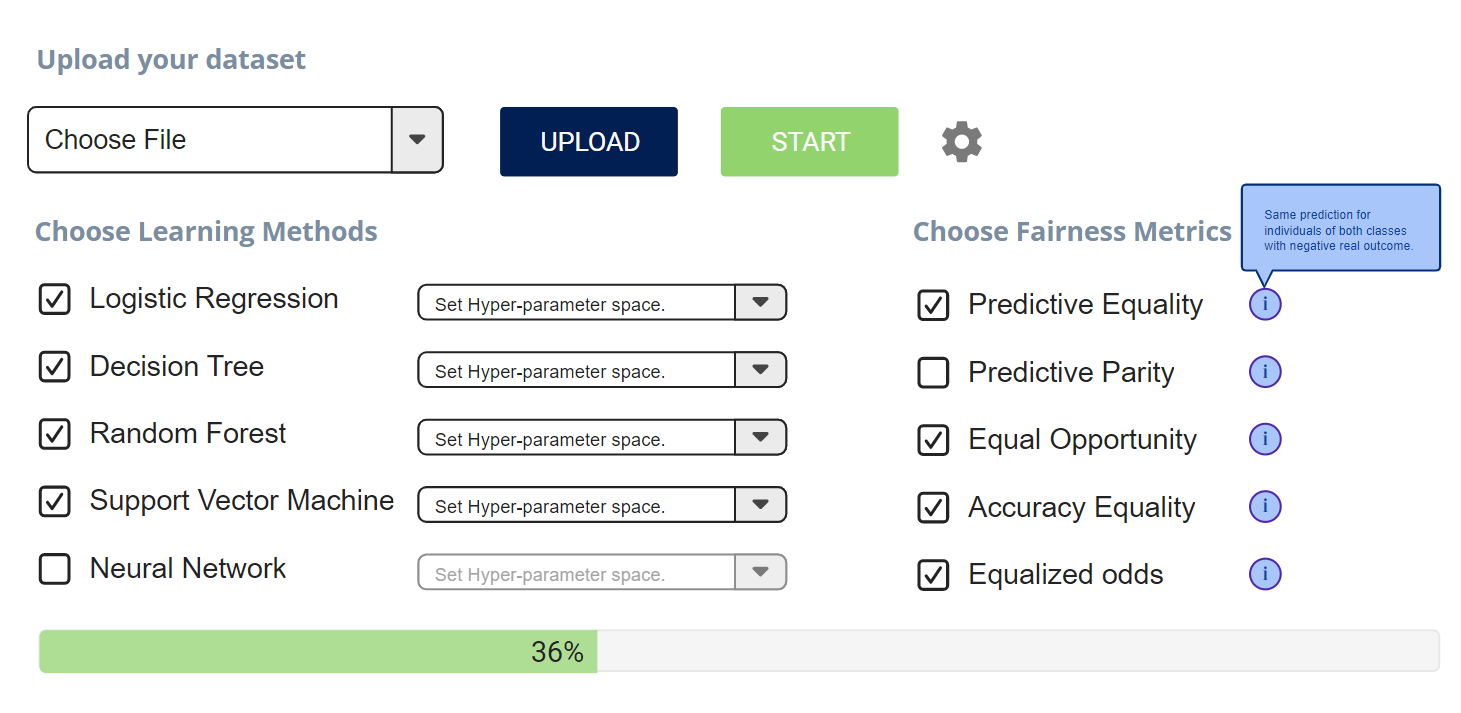

Despite the potential benefits of machine learning (ML) in high-risk decision-making domains, the deployment of ML is not accessible to practitioners, and there is a risk of discrimination. To establish trust and acceptance of ML in such domains, democratizing ML tools and fairness consideration are crucial. In this paper, we introduce FairPilot, an interactive system designed to promote the responsible development of ML models by exploring a combination of various models, different hyperparameters, and a wide range of fairness definitions. We emphasize the challenge of selecting the ``best" ML model and demonstrate how FairPilot allows users to select a set of evaluation criteria and then displays the Pareto frontier of models and hyperparameters as an interactive map. FairPilot is the first system to combine these features, offering a unique opportunity for users to responsibly choose their model.

翻译:虽然机器学习(ML)在高风险决策领域中具有潜在优势,但ML的部署对从业人员来说并不可接受,存在歧视的风险。 在这些领域建立对ML的信任和接受,民主化ML工具和考虑公平性至关重要。 在本文中,我们介绍了FairPilot,一个交互式系统,旨在通过探索各种模型、不同超参数和各种公平性定义的组合来促进ML模型的负责开发。 我们强调选择“最佳”ML模型的挑战,并演示了如何使用FairPilot选择一组评估标准,然后将模型和超参数的Pareto前沿线作为交互式地图显示给用户。 FairPilot是首个结合这些功能的系统,为用户负责选择其模型提供了独特的机会。