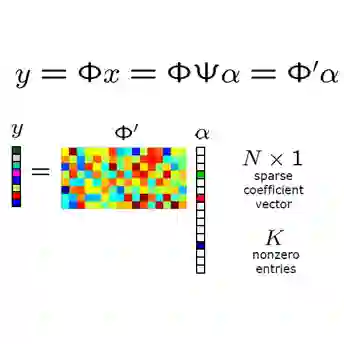

In this paper, a new communication-efficient federated learning (FL) framework is proposed, inspired by vector quantized compressed sensing. The basic strategy of the proposed framework is to compress the local model update at each device by applying dimensionality reduction followed by vector quantization. Subsequently, the global model update is reconstructed at a parameter server by applying a sparse signal recovery algorithm to the aggregation of the compressed local model updates. By harnessing the benefits of both dimensionality reduction and vector quantization, the proposed framework effectively reduces the communication overhead of local update transmissions. Both the design of the vector quantizer and the key parameters for the compression are optimized so as to minimize the reconstruction error of the global model update under the constraint of wireless link capacity. By considering the reconstruction error, the convergence rate of the proposed framework is also analyzed for a non-convex loss function. Simulation results on the MNIST and CIFAR-10 datasets demonstrate that the proposed framework provides more than a 2.5% increase in classification accuracy compared to state-of-the-art FL frameworks when the communication overhead of the local model update transmission is less than 0.1 bit per local model entry.

翻译:在本文中,在矢量量化压缩遥感的启发下,提出了一个新的通信效率联邦学习框架。拟议框架的基本战略是,通过应用维度减少,然后是矢量量化,压缩每个设备的地方模型更新;随后,在参数服务器上重建全球模型更新,对压缩本地模型更新的汇总应用稀疏的信号恢复算法。通过利用维度减少和矢量量化的好处,拟议框架有效地减少了本地更新传输的通信间接费用。矢量量化器和压缩关键参数的设计都得到了优化,以尽量减少无线连接能力限制下全球模型更新的重建错误。考虑到重建错误,还分析了拟议框架的趋同率,以建立非convex损失功能。MNIST和CIFAR-10数据集的模拟结果显示,拟议框架提供了超过2.5%的分类准确度,而当地模型更新传输的通信间接费用低于当地模型输入0.1比0.1位。