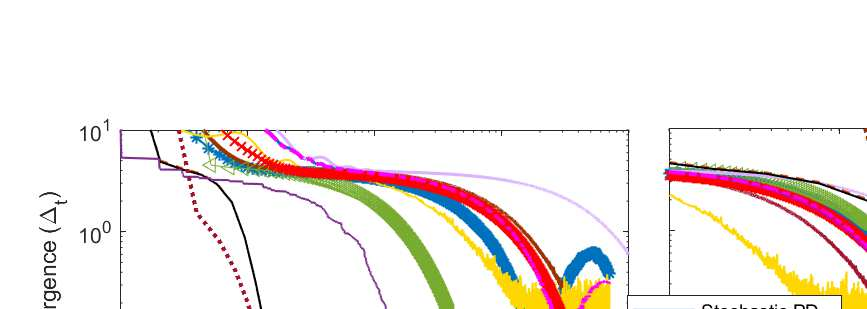

We consider the decentralized convex optimization problem, where multiple agents must cooperatively minimize a cumulative objective function, with each local function expressible as an empirical average of data-dependent losses. State-of-the-art approaches for decentralized optimization rely on gradient tracking, where consensus is enforced via a doubly stochastic mixing matrix. Construction of such mixing matrices is not straightforward and requires coordination even prior to the start of the optimization algorithm. This paper puts forth a primal-dual framework for decentralized stochastic optimization that obviates the need for such doubly stochastic matrices. Instead, dual variables are maintained to track the disagreement between neighbors. The proposed framework is flexible and is used to develop decentralized variants of SAGA, L-SVRG, SVRG++, and SEGA algorithms. Using a unified proof, we establish that the oracle complexity of these decentralized variants is $O(1/\epsilon)$, matching the complexity bounds obtained for the centralized variants. Additionally, we also present a decentralized primal-dual accelerated SVRG algorithm achieving $O(1/\sqrt{\epsilon})$ oracle complexity, again matching the bound for the centralized accelerated SVRG. Numerical tests on the algorithms establish their superior performance as compared to the variance-reduced gradient tracking algorithms.

翻译:我们考虑了分散的 convex 优化问题, 即多个代理商必须合作将累积性客观功能最小化, 每个本地功能都显示为基于数据的损失的经验平均数。 最先进的分散化优化方法依赖于梯度跟踪, 通过二重混合矩阵执行共识。 构建这种混合矩阵并不简单, 甚至在开始优化算法之前也需要协调。 本文为分散化的随机优化提供了一个原始双向框架, 从而避免了对此类双重随机化矩阵的需求。 相反, 保留了双重变量以跟踪邻居之间的分歧。 拟议的框架是灵活的, 用于开发SAGA、 L- SVRG、 SVRG+++ 和 SEGA 算法的分散化变体。 我们使用统一的证据, 确定这些分散化变体的复杂性是$( 1/\ epsilon), 与集中化变体获得的复杂度相符。 此外, 我们还展示了一种分散的原始组合加速型 SVRG 算法, 将其快速化的压值与高压级级的变压相匹配。