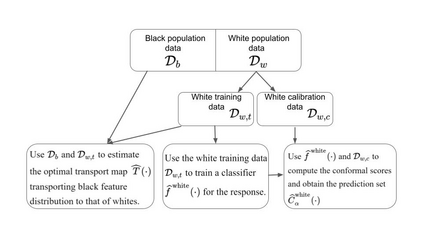

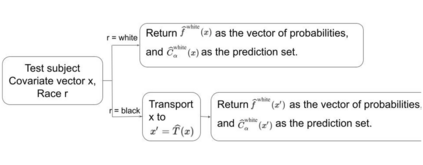

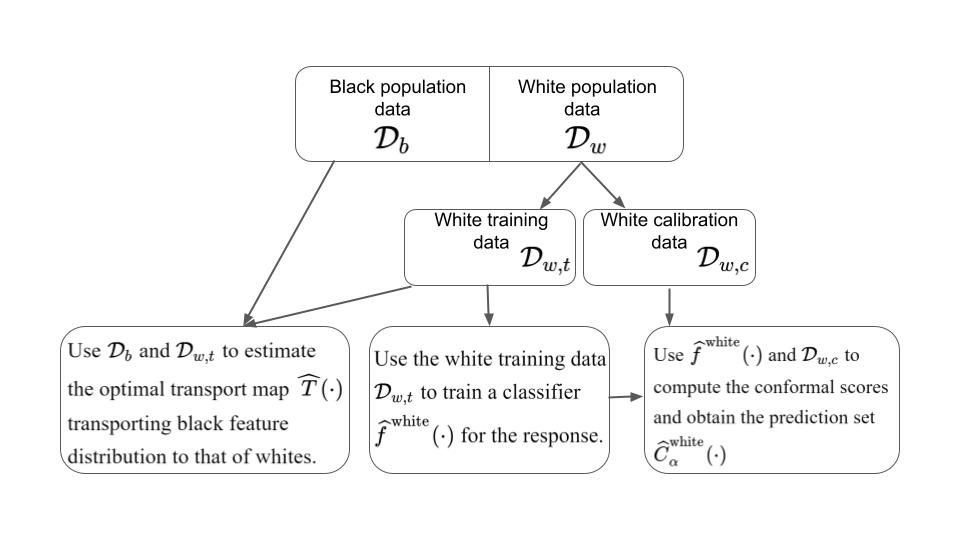

In the United States and elsewhere, risk assessment algorithms are being used to help inform criminal justice decision-makers. A common intent is to forecast an offender's ``future dangerousness.'' Such algorithms have been correctly criticized for potential unfairness, and there is an active cottage industry trying to make repairs. In this paper, we use counterfactual reasoning to consider the prospects for improved fairness when members of a less privileged group are treated by a risk algorithm as if they are members of a more privileged group. We combine a machine learning classifier trained in a novel manner with an optimal transport adjustment for the relevant joint probability distributions, which together provide a constructive response to claims of bias-in-bias-out. A key distinction is between fairness claims that are empirically testable and fairness claims that are not. We then use confusion tables and conformal prediction sets to evaluate achieved fairness for projected risk. Our data are a random sample of 300,000 offenders at their arraignments for a large metropolitan area in the United States during which decisions to release or detain are made. We show that substantial improvement in fairness can be achieved consistent with a Pareto improvement for protected groups.

翻译:在美国和其他地方,风险评估算法正在被用来帮助刑事司法决策者了解情况。一个共同的意图是预测罪犯的“未来危险性 ” 。这种算法被正确地批评为潜在的不公平性,并有一个活跃的家庭工业试图进行修理。在本文中,我们利用反事实推理来考虑当一个较不特权群体的成员被一种风险算法对待时,如果他们是较特权群体的成员,那么这种算法会改善公平性的前景。我们把一个经过新培训的机器学习分类法与最佳运输调整结合起来,以适应相关的联合概率分布,共同对偏见的偏向性索赔作出建设性反应。关键区别在于,公平性主张是经验上可以检验的,公平性主张则不是。我们然后使用混乱表和一致预测组来评价预测风险是否公平。我们的数据是300 000名罪犯在美国一个大都市地区被捕的随机抽样,在这些地方作出释放或拘留决定。我们表明,公平性方面的重大改进可以与Pareto对受保护群体的改进一致。