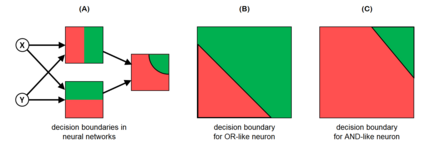

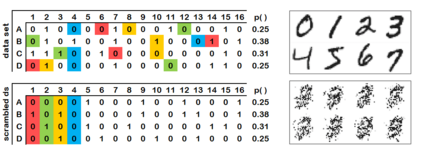

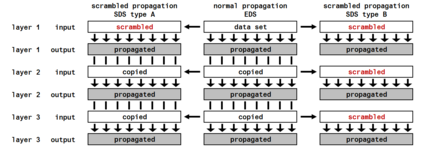

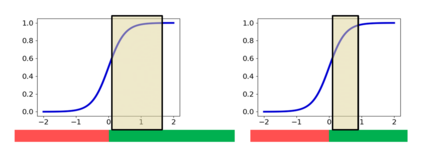

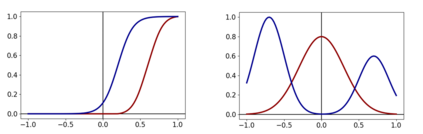

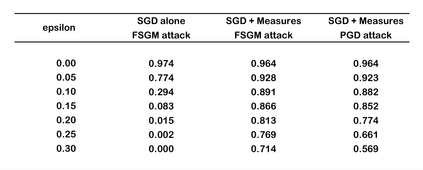

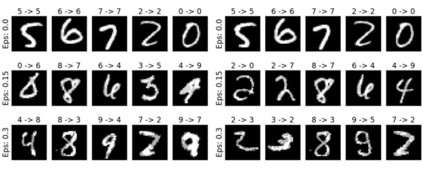

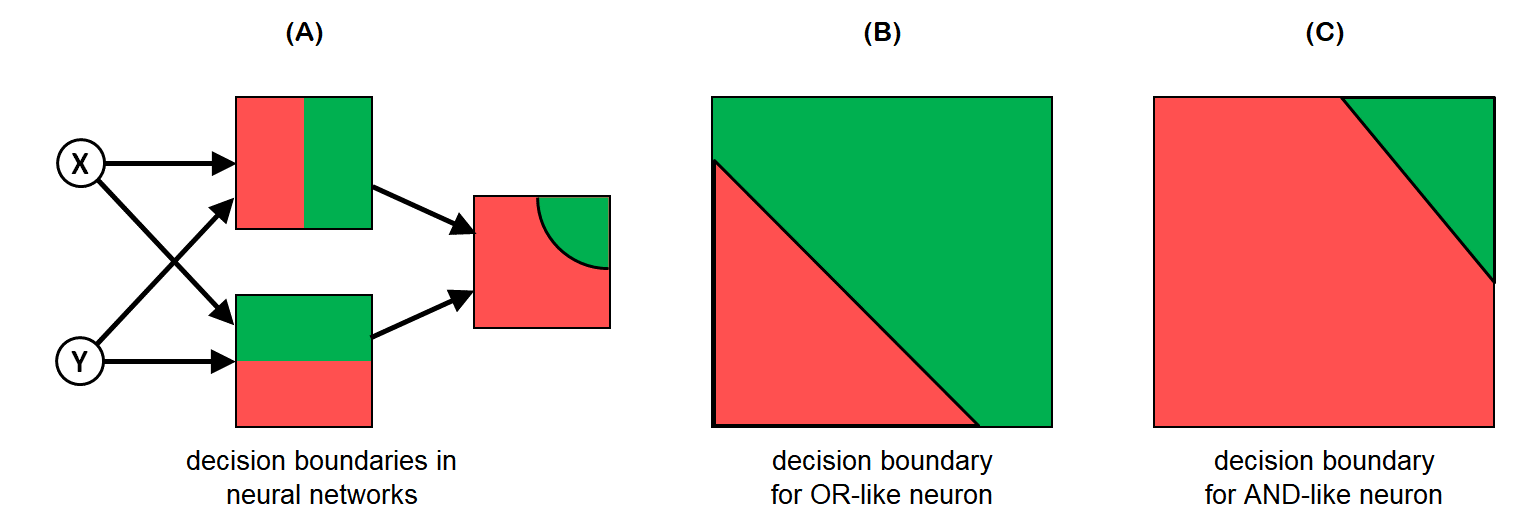

Since its discovery in 2013, the phenomenon of adversarial examples has attracted a growing amount of attention from the machine learning community. A deeper understanding of the problem could lead to a better comprehension of how information is processed and encoded in neural networks and, more in general, could help to solve the issue of interpretability in machine learning. Our idea to increase adversarial resilience starts with the observation that artificial neurons can be divided in two broad categories: AND-like neurons and OR-like neurons. Intuitively, the former are characterised by a relatively low number of combinations of input values which trigger neuron activation, while for the latter the opposite is true. Our hypothesis is that the presence in a network of a sufficiently high number of OR-like neurons could lead to classification "brittleness" and increase the network's susceptibility to adversarial attacks. After constructing an operational definition of a neuron AND-like behaviour, we proceed to introduce several measures to increase the proportion of AND-like neurons in the network: L1 norm weight normalisation; application of an input filter; comparison between the neuron output's distribution obtained when the network is fed with the actual data set and the distribution obtained when the network is fed with a randomised version of the former called "scrambled data set". Tests performed on the MNIST data set hint that the proposed measures could represent an interesting direction to explore.

翻译:自2013年发现以来,对抗性实例现象吸引了机器学习界越来越多的关注。更深刻地理解这一问题,可以使人们更好地了解信息是如何在神经网络中处理和编码的,更一般地说,有助于解决机器学习中的可解释性问题。我们提高对抗性复原力的想法始于观察人工神经元可以分为两大类:类似神经和类似神经或类似神经。直觉上,前者的特征是相对较少的组合输入值,触发神经激活,而后者则是相反的。我们的假设是,在一个足够多的或类似于神经元的网络中存在,可以导致对机器学习中的可解释性问题进行分类。我们的提高对抗性应变能力的想法始于这样一种观察,即人工神经元可以分为两大类:类似神经元和类似神经元。我们开始采取若干措施,提高网络中类似神经元的比例:L1规范的正常化;应用输入过滤器;比较当网络上足够数量的或类似神经输出的分布,当网络被输入时,“输入的内装的内装的内装的内装式数据可以代表实际的内装的内装的内装”数据,我们接下来的内装的内装的内装的内装的内装数据,我们开始开始采用一些内装的内装的内装的内装数据。