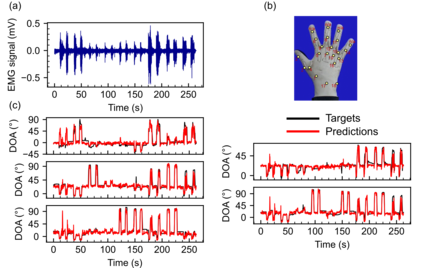

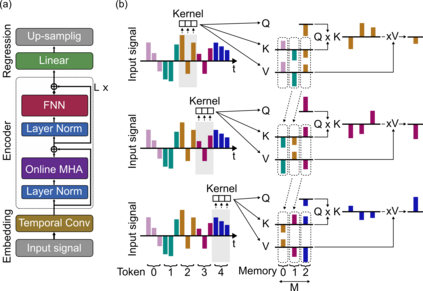

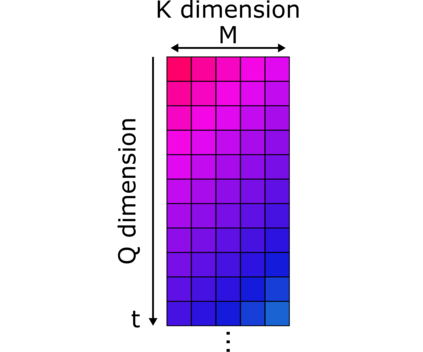

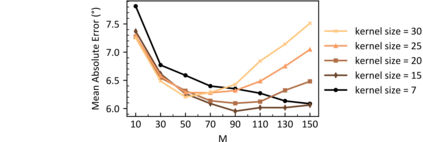

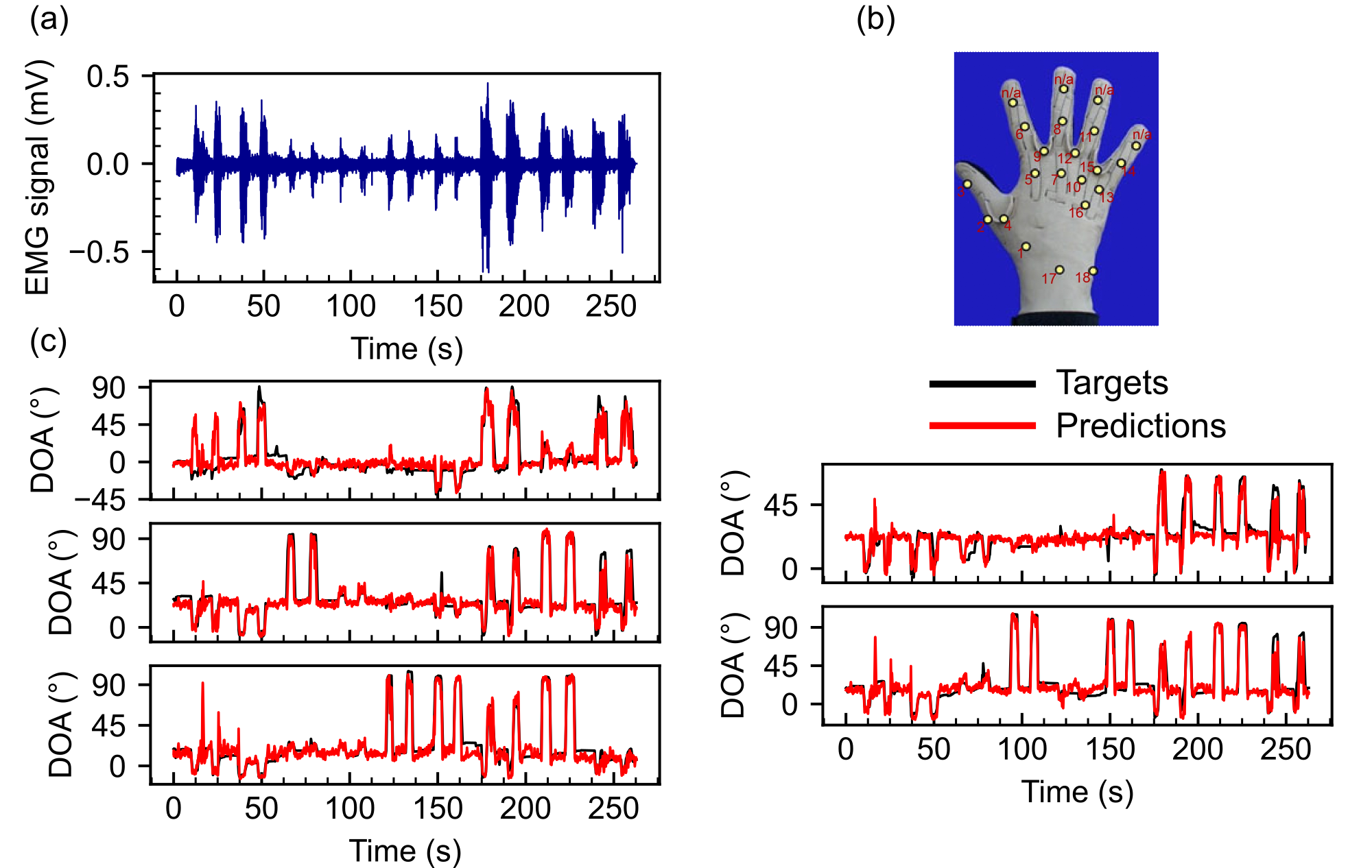

Transformers are state-of-the-art networks for most sequence processing tasks. However, the self-attention mechanism often used in Transformers requires large time windows for each computation step and thus makes them less suitable for online signal processing compared to Recurrent Neural Networks (RNNs). In this paper, instead of the self-attention mechanism, we use a sliding window attention mechanism. We show that this mechanism is more efficient for continuous signals with finite-range dependencies between input and target, and that we can use it to process sequences element-by-element, this making it compatible with online processing. We test our model on a finger position regression dataset (NinaproDB8) with Surface Electromyographic (sEMG) signals measured on the forearm skin to estimate muscle activities. Our approach sets the new state-of-the-art in terms of accuracy on this dataset while requiring only very short time windows of 3.5 ms at each inference step. Moreover, we increase the sparsity of the network using Leaky-Integrate and Fire (LIF) units, a bio-inspired neuron model that activates sparsely in time solely when crossing a threshold. We thus reduce the number of synaptic operations up to a factor of $\times5.3$ without loss of accuracy. Our results hold great promises for accurate and fast online processing of sEMG signals for smooth prosthetic hand control and is a step towards Transformers and Spiking Neural Networks (SNNs) co-integration for energy efficient temporal signal processing.

翻译:Transformer是目前在大多数序列处理任务中表现最好的网络。然而,Transformer中通常使用的自我关注机制需要较长的时间窗口来进行每个计算步骤,因此相较于递归神经网络(RNNs),使其不太适用于在线信号处理。在本文中,我们使用滑动窗口注意机制代替自我关注机制,证明了该机制对于具有有限范围依赖关系的输入与目标之间的连续信号更加高效,并且我们可以使用它逐个元素地处理序列,因此使其与在线处理相兼容。我们在NinaproDB8手指位置回归数据集上测试我们的模型,该数据集使用皮肤上的表面肌电信号(sEMG)测量以估计肌肉活动。我们的方法在这个数据集上实现了最新的准确性记录,并且在每个推理步骤中仅需要3.5毫秒的非常短的时间窗口。此外,我们使用Leaky-Integrate and Fire(LIF)神经元单元增加了网络的稀疏性,LIF是一种生物启发式的神经元模型,仅在通过阈值时稀疏地激活。因此,我们将突触操作数量降低了多达$\times5.3$,而不会丧失准确性。我们的结果为准确而快速的sEMG信号在线处理提供了巨大的希望,是Transformer和脉冲神经网络(SNNs)集成进行能量高效的时间信号处理的一步。