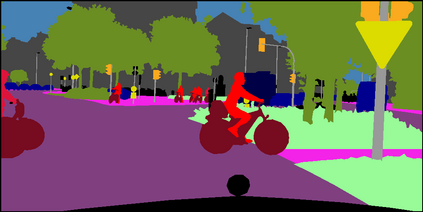

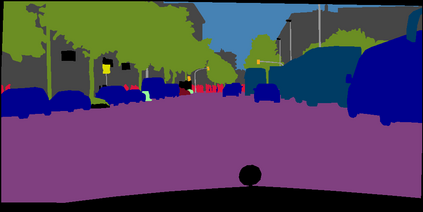

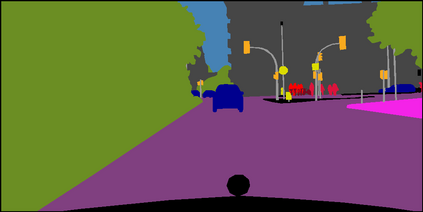

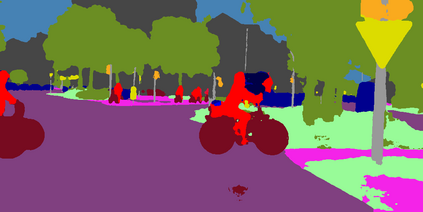

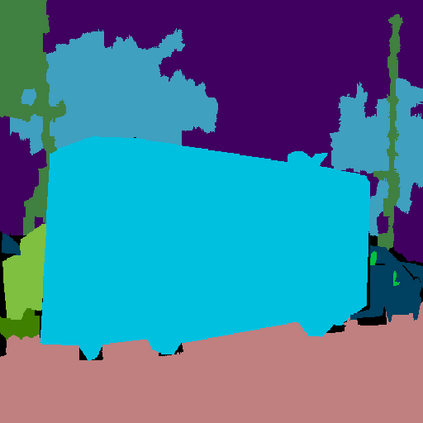

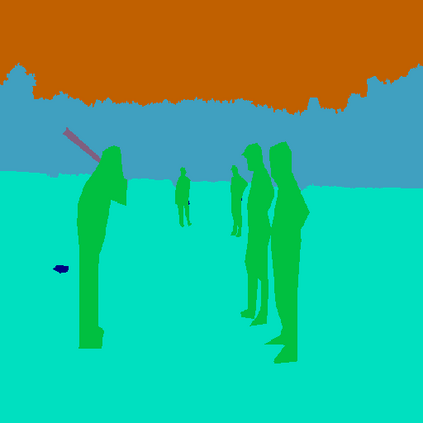

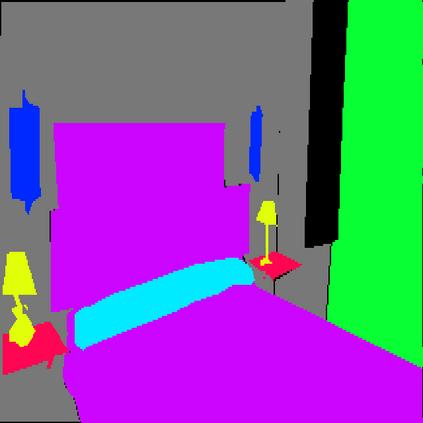

Finetuning a pretrained backbone in the encoder part of an image transformer network has been the traditional approach for the semantic segmentation task. However, such an approach leaves out the semantic context that an image provides during the encoding stage. This paper argues that incorporating semantic information of the image into pretrained hierarchical transformer-based backbones while finetuning improves the performance considerably. To achieve this, we propose SeMask, a simple and effective framework that incorporates semantic information into the encoder with the help of a semantic attention operation. In addition, we use a lightweight semantic decoder during training to provide supervision to the intermediate semantic prior maps at every stage. Our experiments demonstrate that incorporating semantic priors enhances the performance of the established hierarchical encoders with a slight increase in the number of FLOPs. We provide empirical proof by integrating SeMask into each variant of the Swin-Transformer as our encoder paired with different decoders. Our framework achieves a new state-of-the-art of 58.22% mIoU on the ADE20K dataset and improvements of over 3% in the mIoU metric on the Cityscapes dataset. The code and checkpoints are publicly available at https://github.com/Picsart-AI-Research/SeMask-Segmentation .

翻译:图像变压器网络的编码器部分的精密骨干进行微调是传统的语义分解任务。 但是, 这样一种方法忽略了图像在编码阶段提供的语义背景。 本文认为, 将图像的语义信息纳入经过预先训练的等级变压器主干网, 同时微调会大大改进性能。 为了实现这一点, 我们提议SeMask, 这是一个简单而有效的框架, 在语义处理操作的帮助下, 将语义信息纳入编码器中。 此外, 我们在培训过程中使用了轻量语义解析解析器, 以对每个阶段之前的中间语义地图进行监督。 我们的实验表明, 将语义前言信息纳入经过训练的等级变压变压器功能, 略微增加FLOP 的数量。 我们通过将Semask 将Swin- Transformormation 和不同的解译器组合在一起来提供经验证明。 我们的框架在ADE20号/ Recommexi 数据库中实现了3- 22 mIU的新的状态, 在市/ IMS daset 20 数据库/ massetal set set 和httpset/ dalSet.