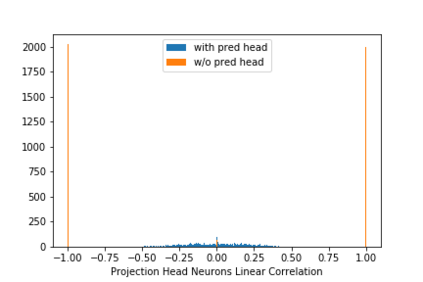

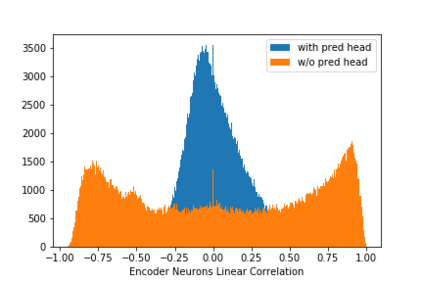

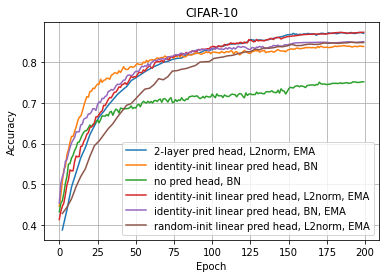

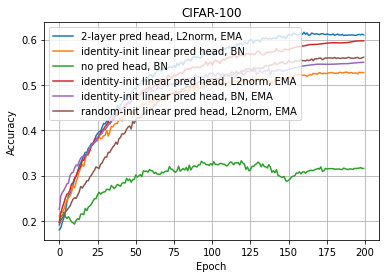

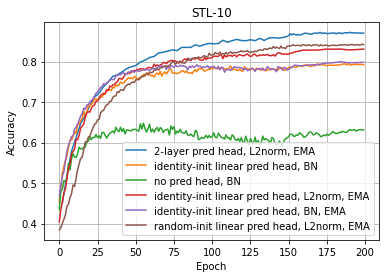

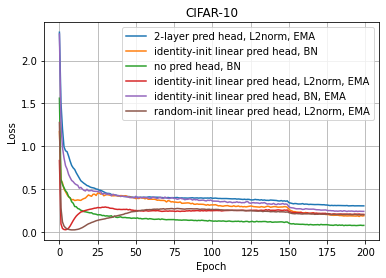

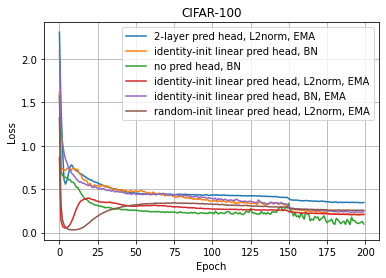

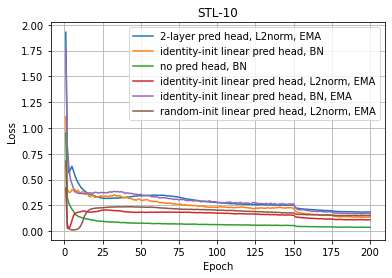

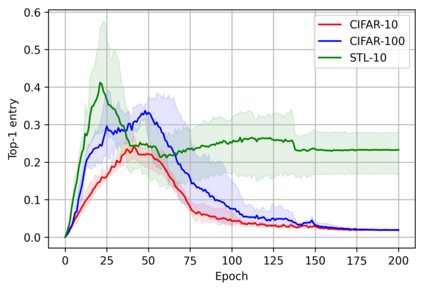

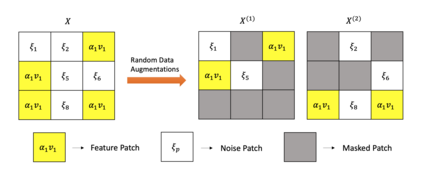

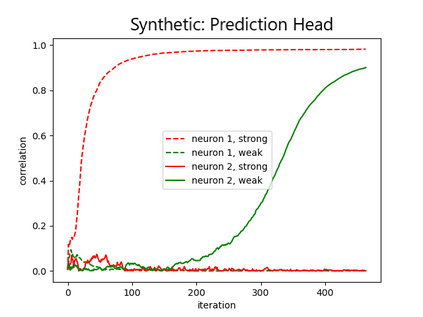

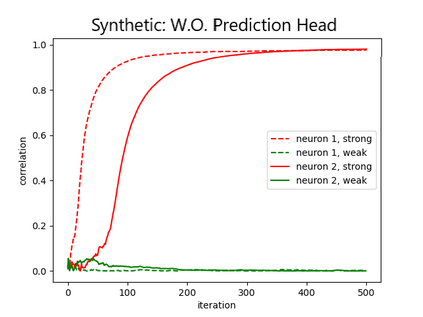

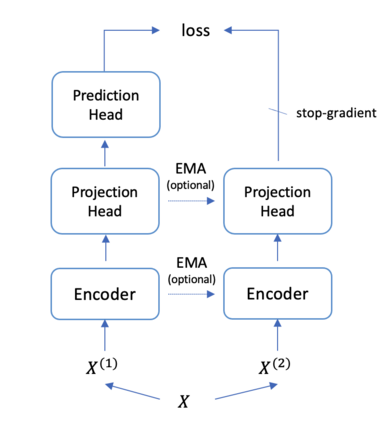

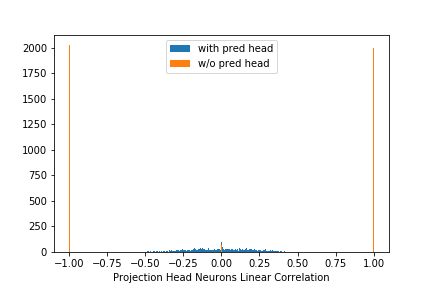

Recently the surprising discovery of the Bootstrap Your Own Latent (BYOL) method by Grill et al. shows the negative term in contrastive loss can be removed if we add the so-called prediction head to the network. This initiated the research of non-contrastive self-supervised learning. It is mysterious why even when there exist trivial collapsed global optimal solutions, neural networks trained by (stochastic) gradient descent can still learn competitive representations. This phenomenon is a typical example of implicit bias in deep learning and remains little understood. In this work, we present our empirical and theoretical discoveries on non-contrastive self-supervised learning. Empirically, we find that when the prediction head is initialized as an identity matrix with only its off-diagonal entries being trainable, the network can learn competitive representations even though the trivial optima still exist in the training objective. Theoretically, we present a framework to understand the behavior of the trainable, but identity-initialized prediction head. Under a simple setting, we characterized the substitution effect and acceleration effect of the prediction head. The substitution effect happens when learning the stronger features in some neurons can substitute for learning these features in other neurons through updating the prediction head. And the acceleration effect happens when the substituted features can accelerate the learning of other weaker features to prevent them from being ignored. These two effects enable the neural networks to learn all the features rather than focus only on learning the stronger features, which is likely the cause of the dimensional collapse phenomenon. To the best of our knowledge, this is also the first end-to-end optimization guarantee for non-contrastive methods using nonlinear neural networks with a trainable prediction head and normalization.

翻译:最近,Grill 等人( Grill et al.) 发现了令人惊讶的“ 靴子陷阱” 你 Own Lient (BYOL) 方法,令人惊讶地发现了最近Grill 等人(BYOL) 的方法, 这表明,如果我们在网络中添加了所谓的预测头, 反向损失的负面术语是可以消除的。 这引发了对非争议性自我监督学习的研究。 这引发了对非争议性自我监督的自我监督学习的研究。 它启动了对非争议性自我监督的自我监督学习的研究。 即使全球最佳解决方案崩溃时, 由( 随机) 梯度梯度下降训练的神经网络仍然可以学习竞争性的表现。 理论上说, 我们提供了一个框架来理解可训练性但身份初始化的预测头部的行为, 在一个简单的背景下, 我们描述了非争议性自我监督性自我监督的自我监督的学习头部的替代效应和加速效果。 在学习神经加速性循环中, 最薄弱的功能会随着某些神经加速的功能的加速发生, 学习, 也能够学习其他的加速性效果。