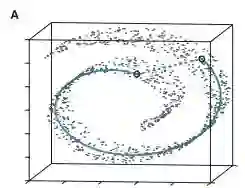

We propose K-Deep Simplex (KDS) which, given a set of data points, learns a dictionary comprising synthetic landmarks, along with representation coefficients supported on a simplex. KDS integrates manifold learning and sparse coding/dictionary learning: reconstruction term, as in classical dictionary learning, and a novel local weighted $\ell_1$ penalty that encourages each data point to represent itself as a convex combination of nearby landmarks. We solve the proposed optimization program using alternating minimization and design an efficient, interpretable autoencoder using algorithm enrolling. We theoretically analyze the proposed program by relating the weighted $\ell_1$ penalty in KDS to a weighted $\ell_0$ program. Assuming that the data are generated from a Delaunay triangulation, we prove the equivalence of the weighted $\ell_1$ and weighted $\ell_0$ programs. If the representation coefficients are given, we prove that the resulting dictionary is unique. Further, we show that low-dimensional representations can be efficiently obtained from the covariance of the coefficient matrix. We apply KDS to the unsupervised clustering problem and prove theoretical performance guarantees. Experiments show that the algorithm is highly efficient and performs competitively on synthetic and real data sets.

翻译:我们提议K- Deep Slipplex (KDS), 根据一组数据点, 我们学习一个由合成里程碑组成的字典, 以及一个简单x所支持的代表性系数。 KDS 包含多种学习和稀少编码/字典学习: 重建术语, 如古典字典的学习, 以及一个新的本地加权 $\ ell_ 1美元 罚款, 这鼓励每个数据点将自己作为附近地标的组合。 我们用交替最小化和设计一个高效的、可解释的自动编码器来解决拟议的优化方案。 我们从理论上分析拟议的方案, 将KDS 中加权的 $\ ell_ 1美元罚款与一个加权的 $\ ell_ 0美元 程序联系起来。 假设数据来自一个重塑的三角, 我们证明加权的 $\ ell_ 1美元 和 加权的 美元 美元 等值 程序是相配制的。 如果给出了代表系数, 我们证明由此产生的字典是独一无二的。 此外, 我们证明可以从系数矩阵的变量中有效地获得低维度的表述。 我们将KDS 应用于一个非超强的合成数据组合, 并证明具有高度的实验性的数据。