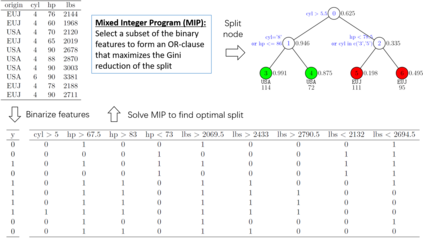

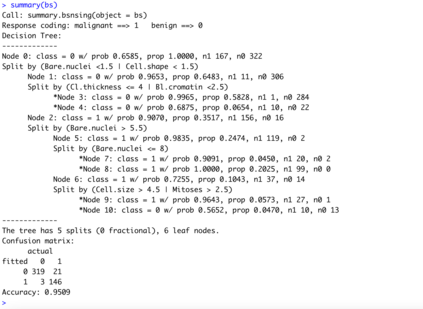

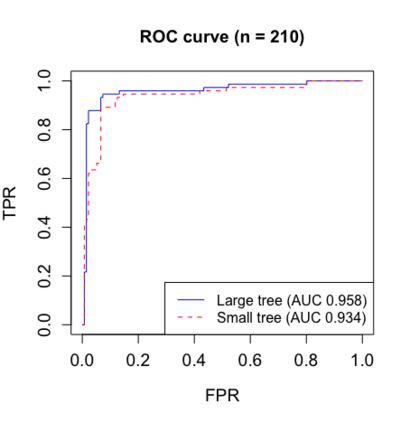

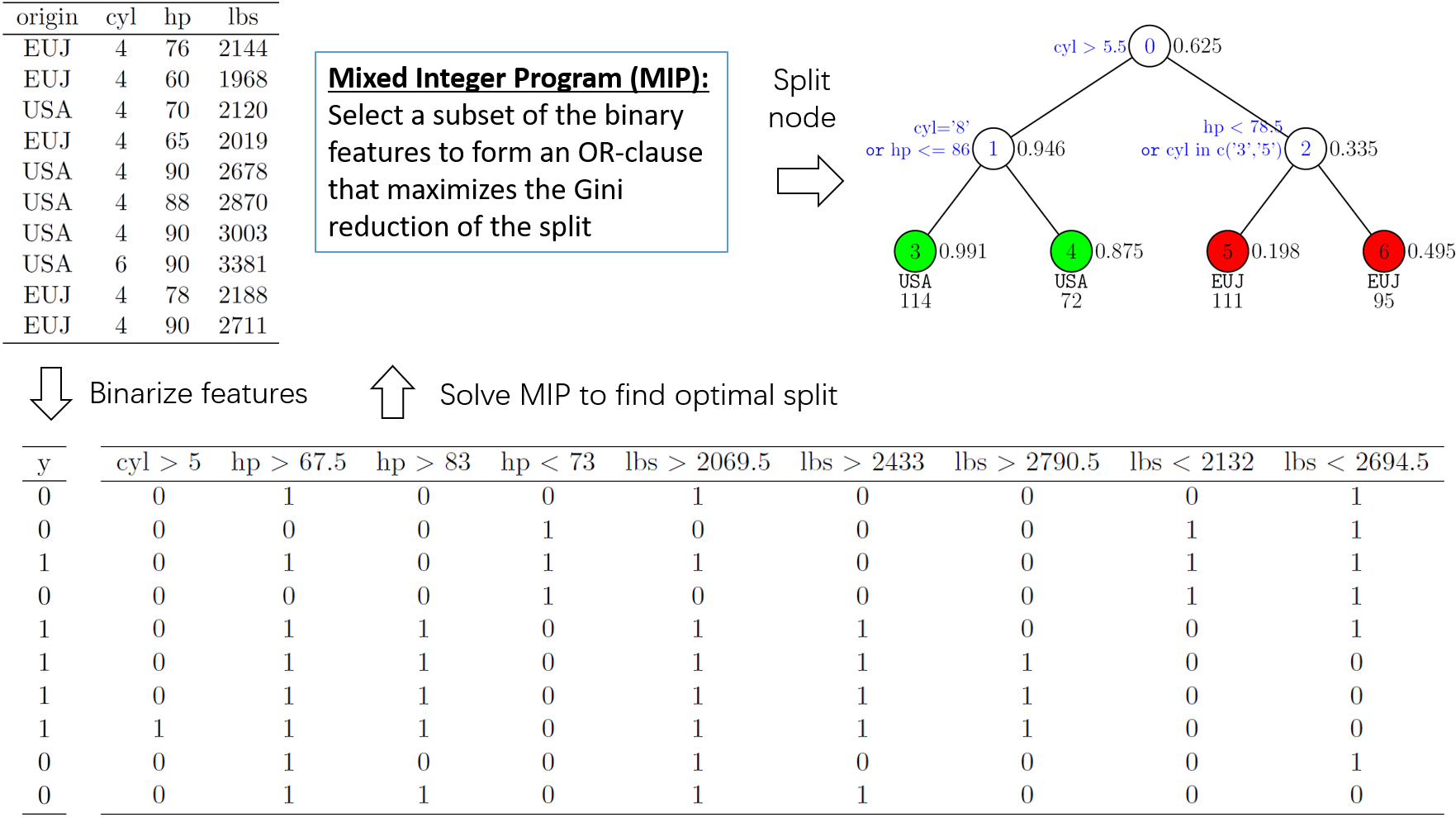

This paper proposes a new mixed-integer programming (MIP) formulation to optimize split rule selection in the decision tree induction process, and develops an efficient search algorithm that is able to solve practical instances of the MIP model faster than commercial solvers. The formulation is novel for it directly maximizes the Gini reduction, an effective split selection criterion which has never been modeled in a mathematical program for its nonconvexity. The proposed approach differs from other optimal classification tree models in that it does not attempt to optimize the whole tree, therefore the flexibility of the recursive partitioning scheme is retained and the optimization model is more amenable. The approach is implemented in an open-source R package named bsnsing. Benchmarking experiments on 75 open data sets suggest that bsnsing trees are the most capable of discriminating new cases compared to trees trained by other decision tree codes including the rpart, C50, party and tree packages in R. Compared to other optimal decision tree packages, including DL8.5, OSDT, GOSDT and indirectly more, bsnsing stands out in its training speed, ease of use and broader applicability without losing in prediction accuracy.

翻译:本文建议采用新的混合点数编程(混合点数编程)新方案,优化决策树上岗过程中的分解规则选择,并开发高效的搜索算法,能够比商业求解者更快地解决混合点数模型的实际案例。这种配方对于它直接最大限度地减少基尼值来说是新颖的,这是一个有效的分解选择标准,从未在数学方案中为非相容性进行模型。提议的方法与其他最佳分类树模型不同,因为它不试图优化整棵树,因此保留了循环分割方案的灵活性,优化模式更便于使用。在名为bsnsing的开放源码R软件包中实施这一方法,对75个开放数据集进行基准化试验表明,树苗木是最能够区分新案例,而与其他决策树代码所培训的树木相比,包括R的rpart、C50、党和树包。与其他最佳决策树包相比,它不试图优化整棵,包括DL8.5、OSDT、GOSDT、GOSDTDT和间接的更多, bsnsinging), 其培训速度、使用方便性和广泛适用性不失于其准确性。