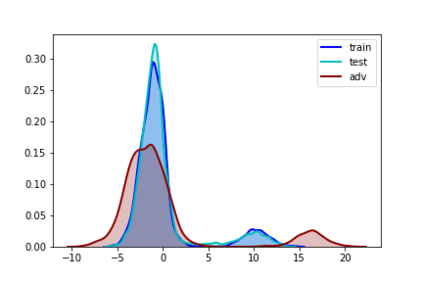

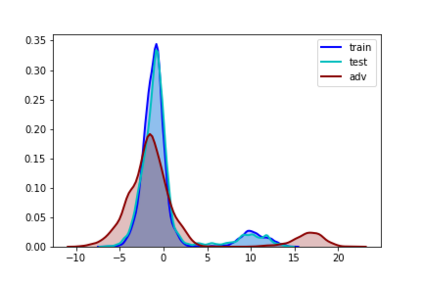

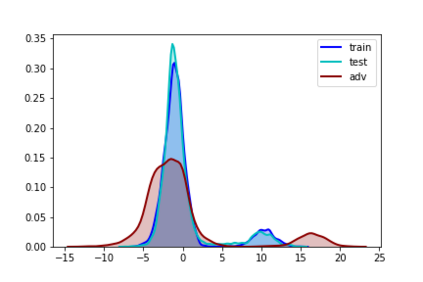

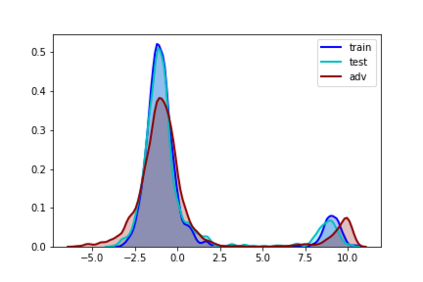

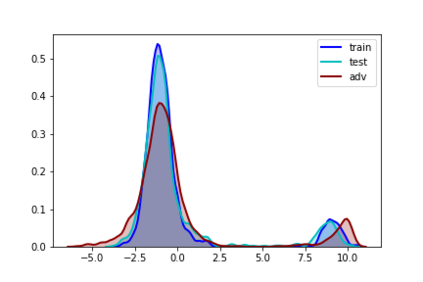

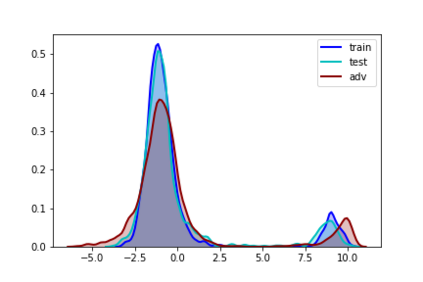

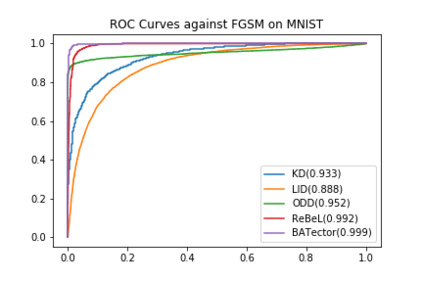

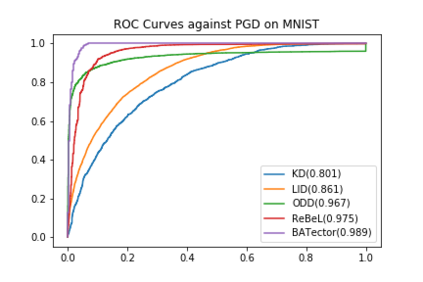

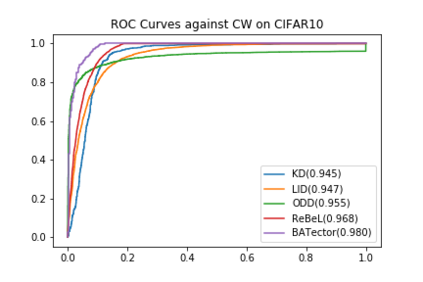

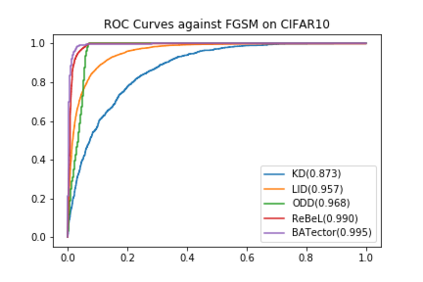

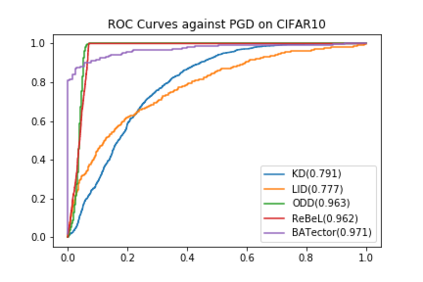

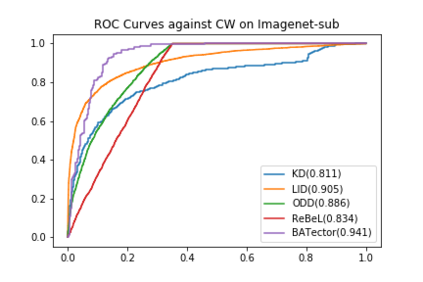

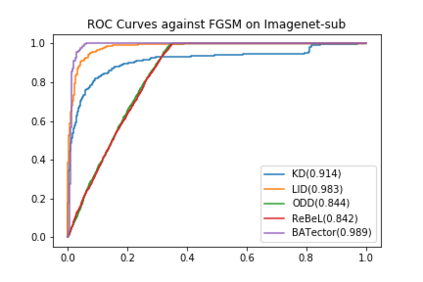

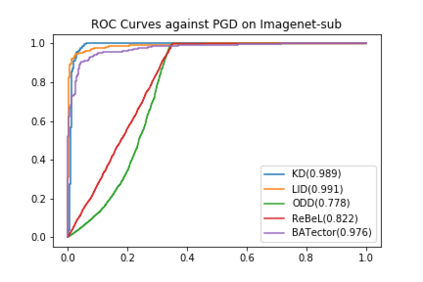

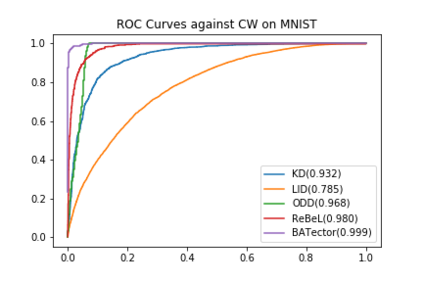

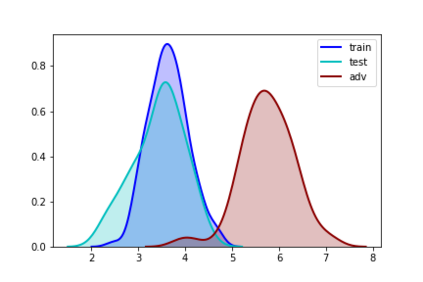

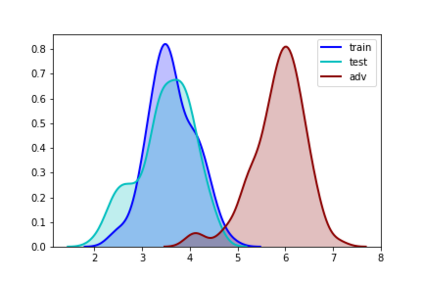

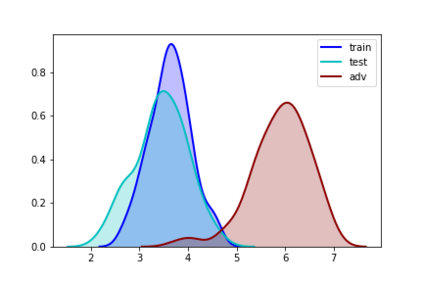

Deep neural networks (DNNs) are vulnerable against adversarial examples, i.e., examples that are carefully crafted to fool the DNNs while being indistinguishable from the natural images to humans. In this paper, we propose a new framework to detect adversarial examples motivated by the observations that random components can improve the smoothness of predictors and make it easier to simulate output distribution of deep neural network. With these observations, we propose a novel Bayesian adversarial example detector, short for BATector, to improve the performance of adversarial example detection. In specific, we study the distributional difference of hidden layer output between natural and adversarial examples, and propose to use the randomness of Bayesian neural network (BNN) to simulate hidden layer output distribution and leverage the distribution dispersion to detect adversarial examples. The advantage of BNN is that the output is stochastic while neural network without random components do not have such characteristics. Empirical results on several benchmark datasets against popular attacks show that, the proposed BATector outperforms the state-of-the-art detectors in adversarial example detection.

翻译:深神经网络(DNNs)在对抗性实例面前是脆弱的,例如,精心设计来愚弄DNNs的例子,同时与自然图像和人类的自然图像无法区分。在本文中,我们提出一个新的框架,以检测由随机组件能够改善预测器的顺利性并使模拟深神经网络输出分布更加容易的观测结果所激发的对抗性实例。通过这些观察,我们提议了一个新的贝叶西亚对抗性范例探测器(BaTector的简称是Baitector),以提高对抗性实例检测的性能。具体地说,我们研究了自然和对抗性实例之间隐藏层输出的分布差异,并提议使用Bayesian神经网络(BNN)的随机性模拟隐藏层输出分布并利用分布分布来探测对抗性实例。BNN的优点是,输出是随机性的,而没有随机组件的神经网络没有这种特性。针对大众攻击的若干基准数据集的实证结果显示,拟议的BATtor在对抗性检测中超越了州-艺术探测器。