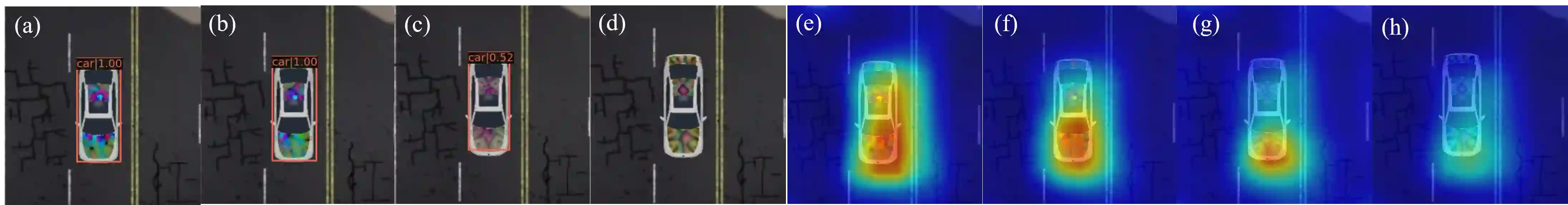

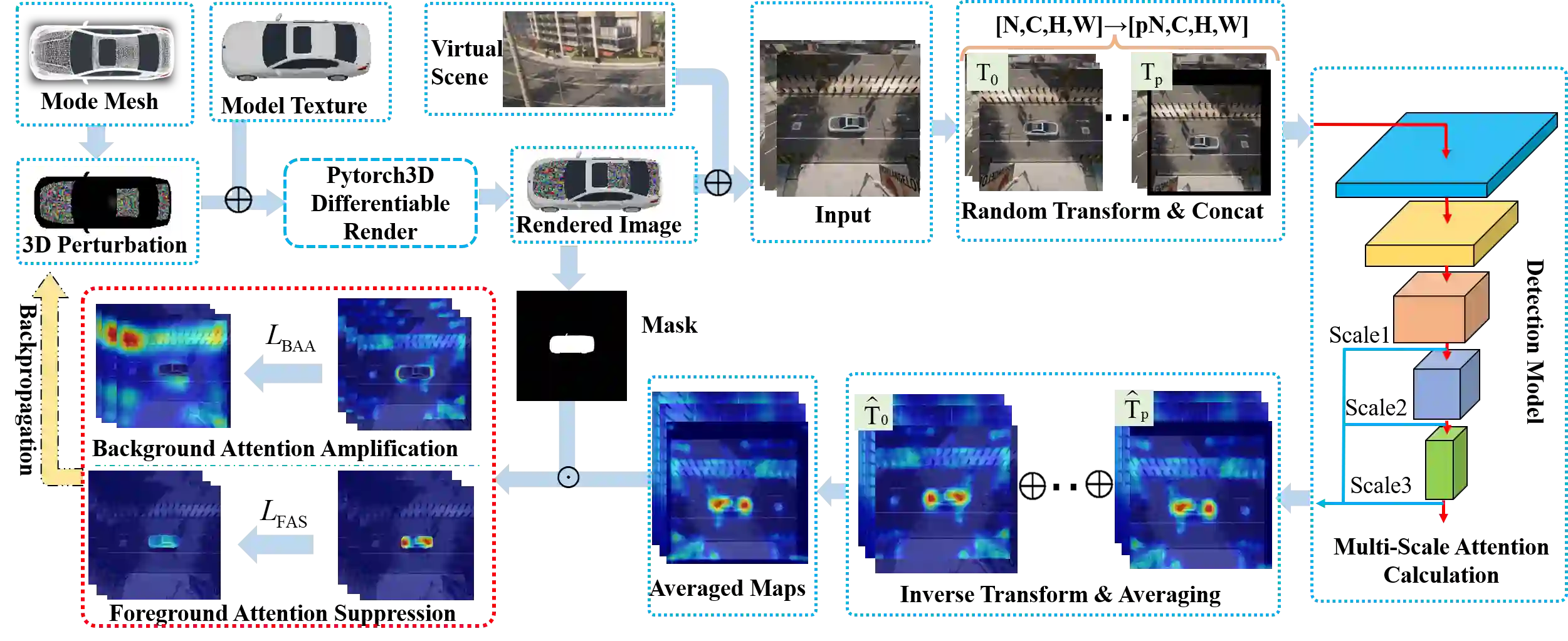

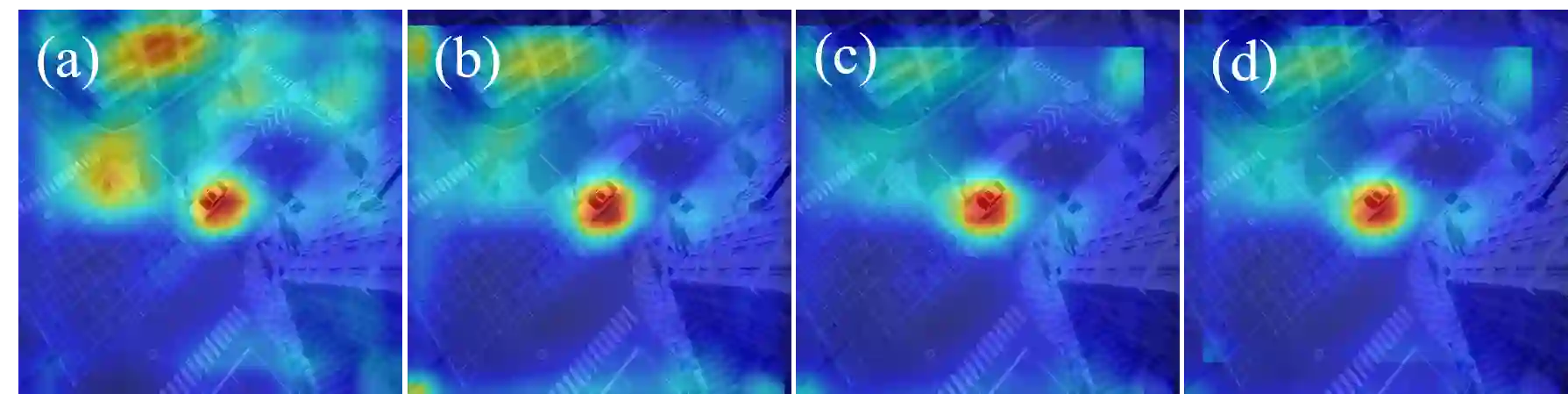

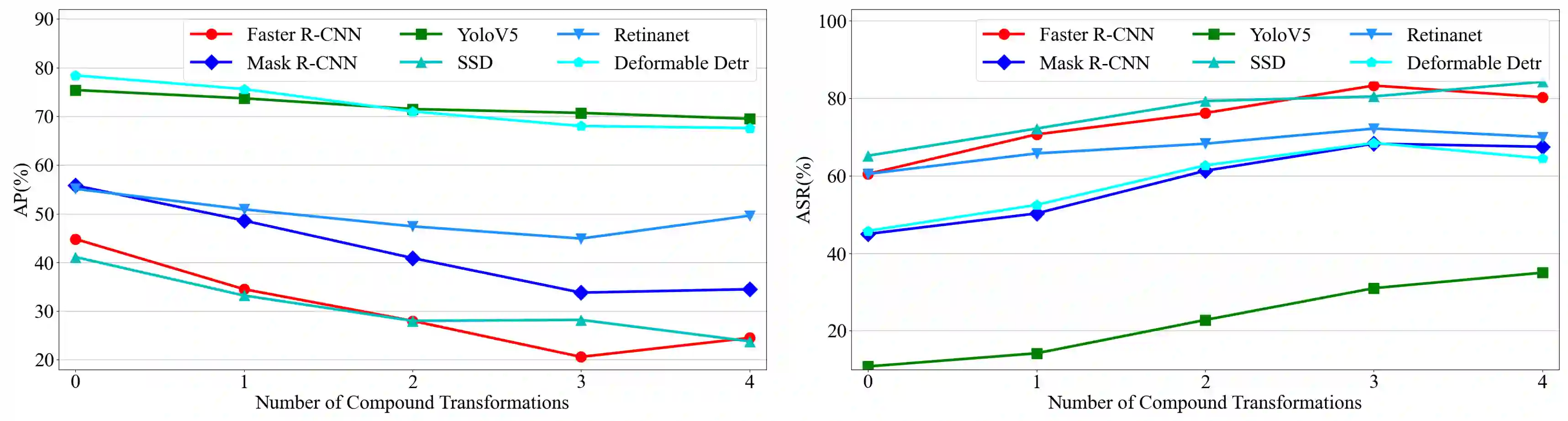

Transferable adversarial attack is always in the spotlight since deep learning models have been demonstrated to be vulnerable to adversarial samples. However, existing physical attack methods do not pay enough attention on transferability to unseen models, thus leading to the poor performance of black-box attack.In this paper, we put forward a novel method of generating physically realizable adversarial camouflage to achieve transferable attack against detection models. More specifically, we first introduce multi-scale attention maps based on detection models to capture features of objects with various resolutions. Meanwhile, we adopt a sequence of composite transformations to obtain the averaged attention maps, which could curb model-specific noise in the attention and thus further boost transferability. Unlike the general visualization interpretation methods where model attention should be put on the foreground object as much as possible, we carry out attack on separable attention from the opposite perspective, i.e. suppressing attention of the foreground and enhancing that of the background. Consequently, transferable adversarial camouflage could be yielded efficiently with our novel attention-based loss function. Extensive comparison experiments verify the superiority of our method to state-of-the-art methods.

翻译:由于深层次的学习模型证明很容易受到对抗性样本的影响,可转移的对抗性攻击始终是焦点,但是,现有的物理攻击方法没有足够重视向看不见模型的可转移性,从而导致黑盒攻击的不良性能。 在本文中,我们提出了一个新的方法,即产生有形的可实现的对抗性伪装,以便实现对探测模型的可转移攻击。更具体地说,我们首先采用基于探测模型的多尺度的注意地图,以便用各种分辨率捕捉物体的特征。与此同时,我们采用一系列综合变形图,以获得平均注意的地图,这可以抑制对模型的噪音的注意,从而进一步增强可转移性。与一般的可视化解释方法不同,即应尽可能将模型的注意力放在地表上,从相反的角度对可分离的注意进行攻击,即压制对地面的注意,加强背景的注意。因此,可转让的对抗性迷彩能随着我们新的关注损失功能而产生效果。广泛比较实验可以核实我们的方法优于状态的方法。