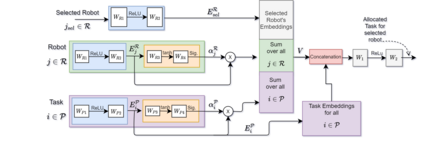

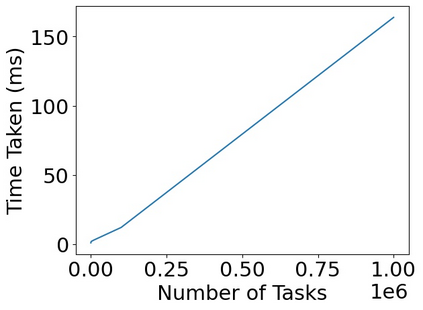

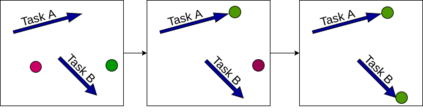

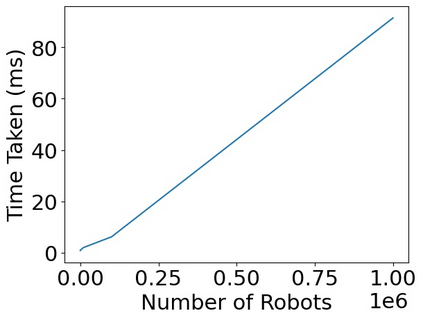

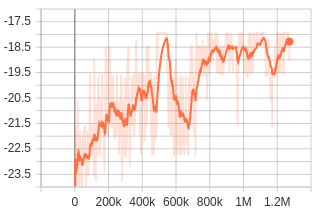

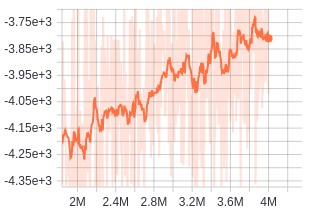

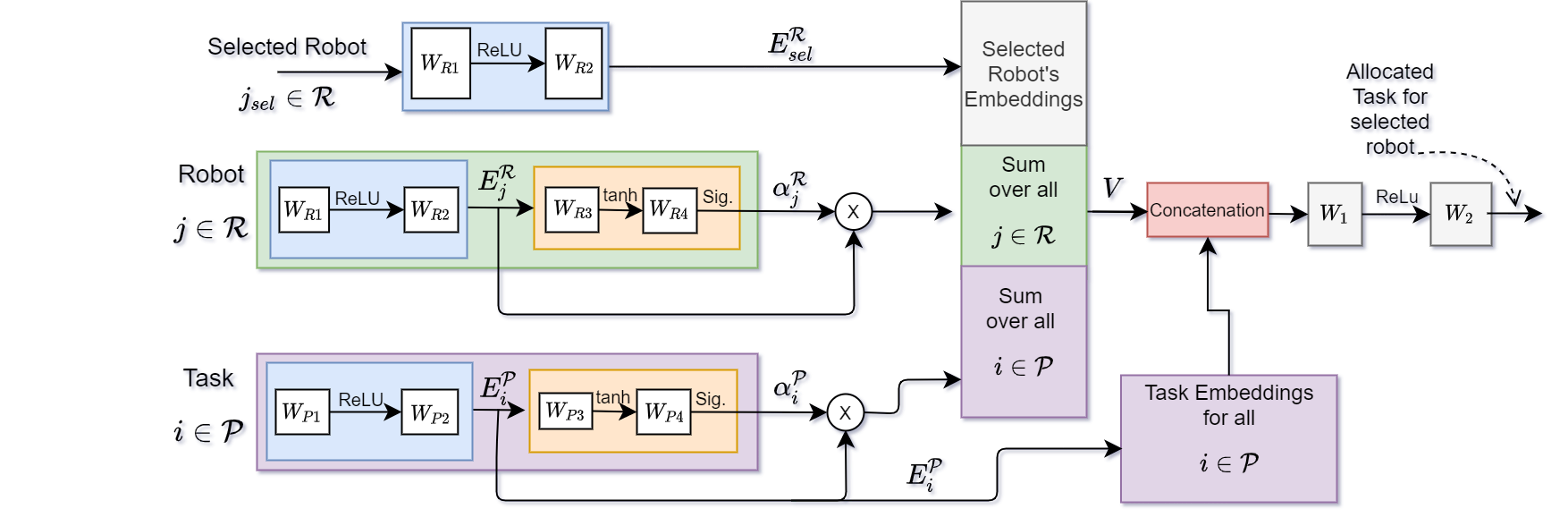

We present a novel reinforcement learning based algorithm for multi-robot task allocation problem in warehouse environments. We formulate it as a Markov Decision Process and solve via a novel deep multi-agent reinforcement learning method (called RTAW) with attention inspired policy architecture. Hence, our proposed policy network uses global embeddings that are independent of the number of robots/tasks. We utilize proximal policy optimization algorithm for training and use a carefully designed reward to obtain a converged policy. The converged policy ensures cooperation among different robots to minimize total travel delay (TTD) which ultimately improves the makespan for a sufficiently large task-list. In our extensive experiments, we compare the performance of our RTAW algorithm to state of the art methods such as myopic pickup distance minimization (greedy) and regret based baselines on different navigation schemes. We show an improvement of upto 14% (25-1000 seconds) in TTD on scenarios with hundreds or thousands of tasks for different challenging warehouse layouts and task generation schemes. We also demonstrate the scalability of our approach by showing performance with up to $1000$ robots in simulations.

翻译:我们为仓库环境中的多机器人任务分配问题提出了一个基于新颖强化学习的算法。我们把它设计成一个Markov 决策程序,并通过一种全新的多试剂强化学习方法(称为RTAW)和关注激励的政策架构加以解决。因此,我们拟议的政策网络使用独立于机器人/任务数目的全球嵌入器。我们用最优政策优化算法进行培训,并使用精心设计的奖励办法获得一致的政策。这一趋同政策确保不同机器人之间的合作,以尽量减少旅行总延误(TTD),最终将造价改进为足够大的任务列表。我们在广泛的实验中,将我们的RTAW算法的性能与各种导航计划的近视距离最小化(greedy)和遗憾基线等艺术方法的状态进行比较。我们显示,在TTD中,以数百或数千项不同具有挑战性的仓库布局和任务生成计划的任务为假设,将高达14%(25-1000秒)。我们还通过在模拟中展示高达1 000美元的机器人的性能来显示我们的方法的可扩展性。</s>