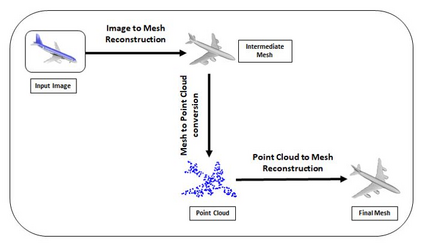

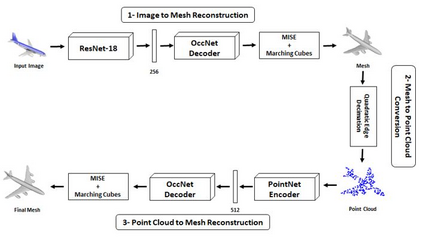

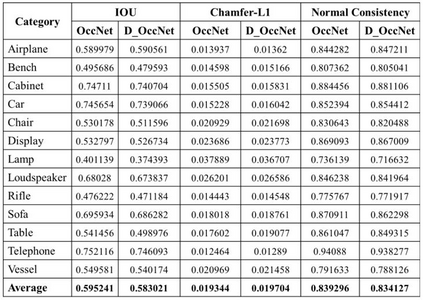

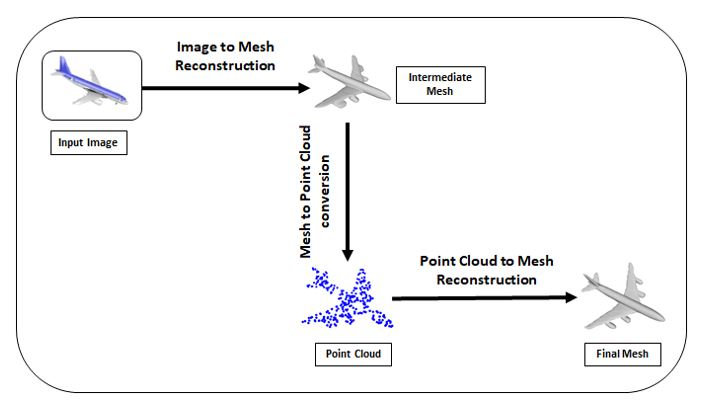

Deep learning based 3D reconstruction of single view 2D image is becoming increasingly popular due to their wide range of real-world applications, but this task is inherently challenging because of the partial observability of an object from a single perspective. Recently, state of the art probability based Occupancy Networks reconstructed 3D surfaces from three different types of input domains: single view 2D image, point cloud and voxel. In this study, we extend the work on Occupancy Networks by exploiting cross-domain learning of image and point cloud domains. Specifically, we first convert the single view 2D image into a simpler point cloud representation, and then reconstruct a 3D surface from it. Our network, the Double Occupancy Network (D-OccNet) outperforms Occupancy Networks in terms of visual quality and details captured in the 3D reconstruction.

翻译:基于 3D 的深度学习重建单一视图 2D 图像正在变得越来越受欢迎, 原因是它们具有广泛的真实世界应用, 但这一任务具有内在挑战性, 因为一个对象从一个角度部分可观测。 最近, 基于 日期概率的占用网络从三种不同类型的输入领域重建了 3D 表面 : 单一视图 2D 图像、 点云和 voxel 。 在这项研究中, 我们通过利用图像和点云域的跨域学习来扩展占用网络的工作。 具体地说, 我们首先将单一视图 2D 图像转换为更简单的点云表, 然后从中重建一个 3D 表面 。 我们的网络, 双位占用网络( D- OccNet) 从视觉质量和在 3D 重建中捕捉到的细节上, 超越了默认 网络 。