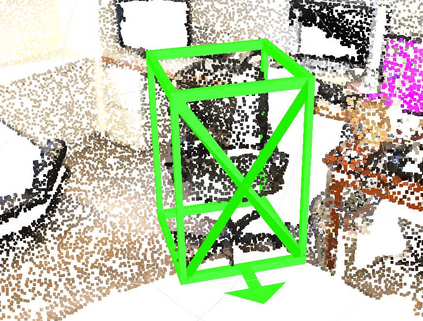

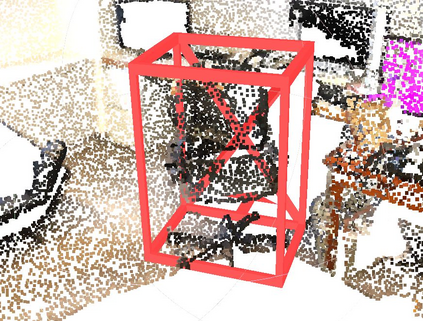

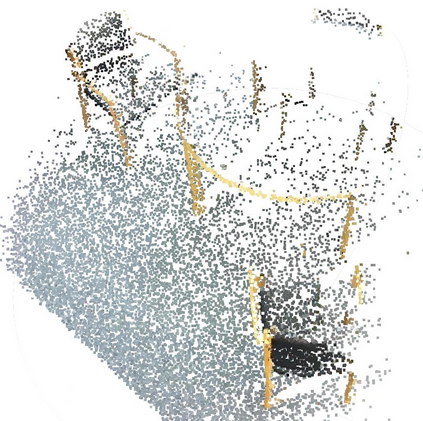

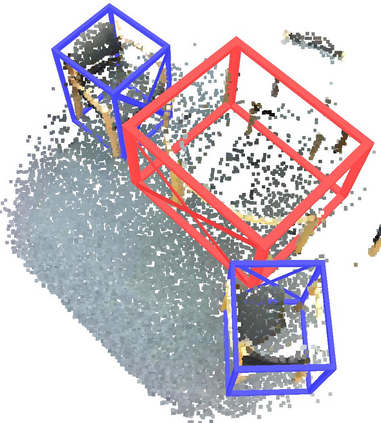

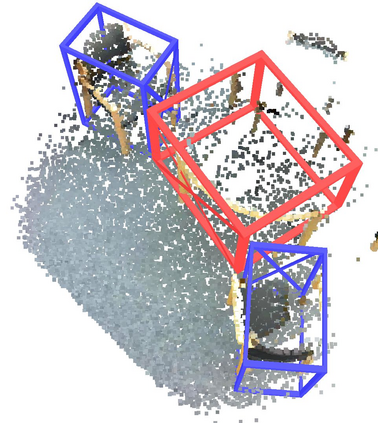

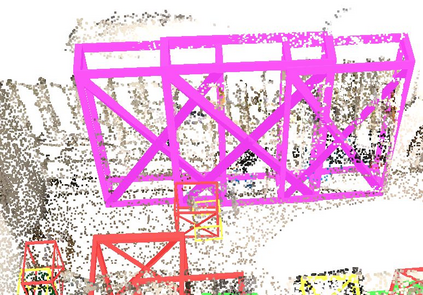

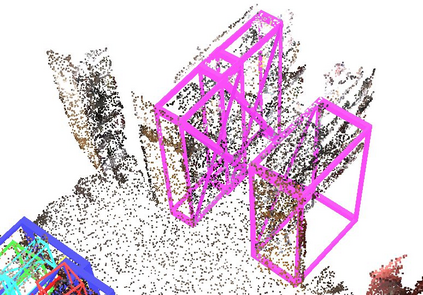

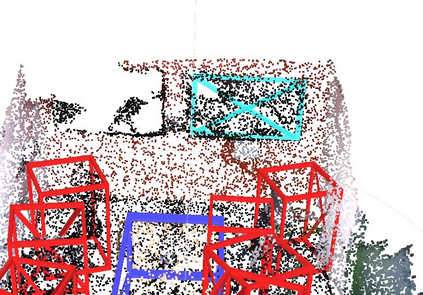

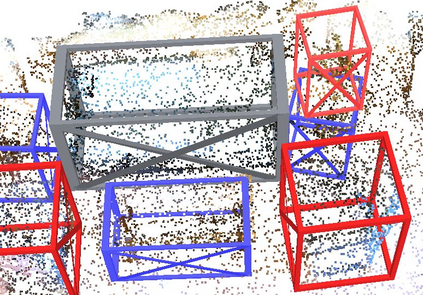

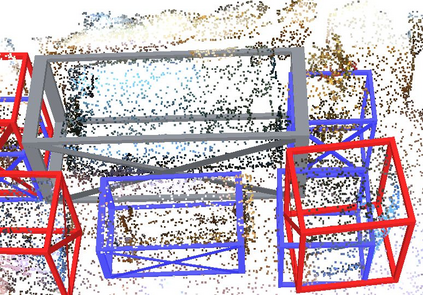

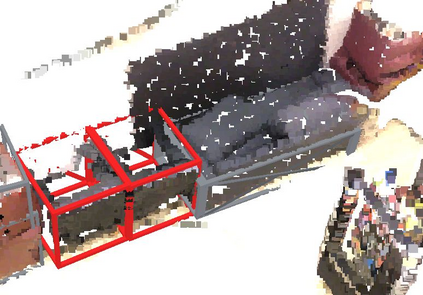

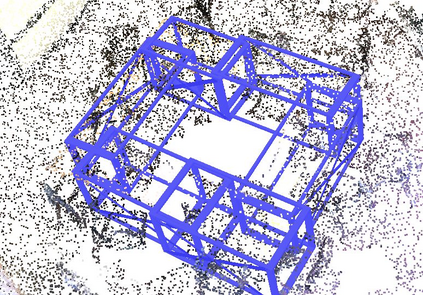

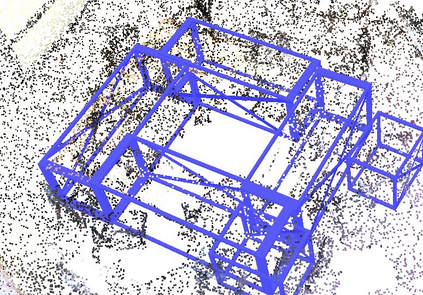

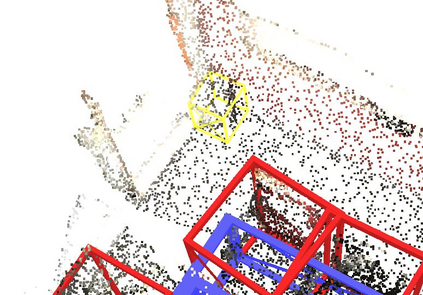

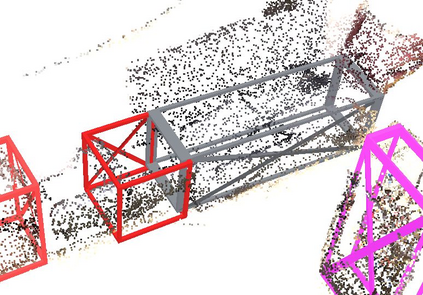

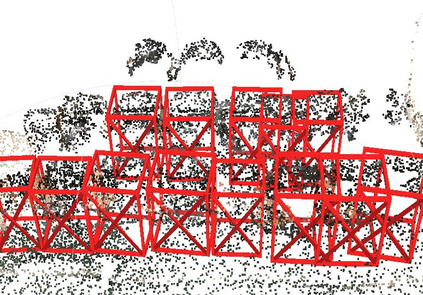

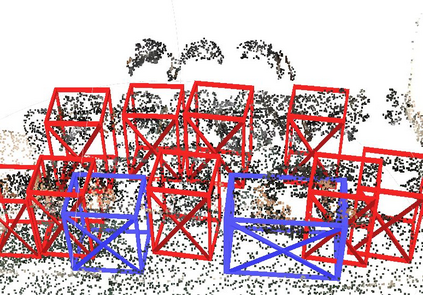

Rotation equivariance has recently become a strongly desired property in the 3D deep learning community. Yet most existing methods focus on equivariance regarding a global input rotation while ignoring the fact that rotation symmetry has its own spatial support. Specifically, we consider the object detection problem in 3D scenes, where an object bounding box should be equivariant regarding the object pose, independent of the scene motion. This suggests a new desired property we call object-level rotation equivariance. To incorporate object-level rotation equivariance into 3D object detectors, we need a mechanism to extract equivariant features with local object-level spatial support while being able to model cross-object context information. To this end, we propose Equivariant Object detection Network (EON) with a rotation equivariance suspension design to achieve object-level equivariance. EON can be applied to modern point cloud object detectors, such as VoteNet and PointRCNN, enabling them to exploit object rotation symmetry in scene-scale inputs. Our experiments on both indoor scene and autonomous driving datasets show that significant improvements are obtained by plugging our EON design into existing state-of-the-art 3D object detectors.

翻译:在 3D 深层学习社区中, 旋转等宽度最近已成为一个非常想要的属性。 然而, 多数现有方法最近已成为 3D 深层学习社区中一个强烈想要的属性。 然而, 大多数现有方法都侧重于全球输入旋转的等差, 而忽略了旋转对称有其自身空间支持的事实。 具体地说, 我们考虑3D 场景中的物体探测问题, 3D 场景中, 对象捆绑框应该与对象构成成异差, 与对象相异, 独立于场景运动运动运动。 这表明了一个新的想要的属性, 我们称之为目标水平的旋转等同差差。 要将目标水平的旋转等异差纳入 3D 对象探测器, 我们需要一个机制来提取具有本地目标水平空间支持的等异差特性, 同时能够模拟交叉对象背景环境信息。 为此, 我们建议使用 Q 对象探测网络(EON) 和自动驱动数据检测器的实验显示, 通过插入现有设计 EON 3 将获得显著的改进。