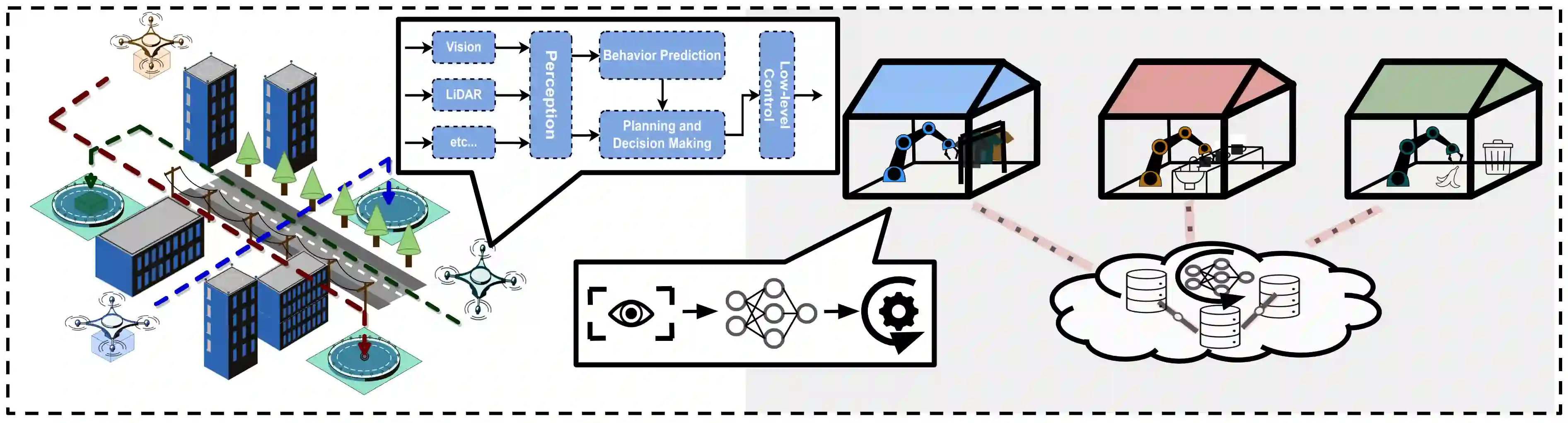

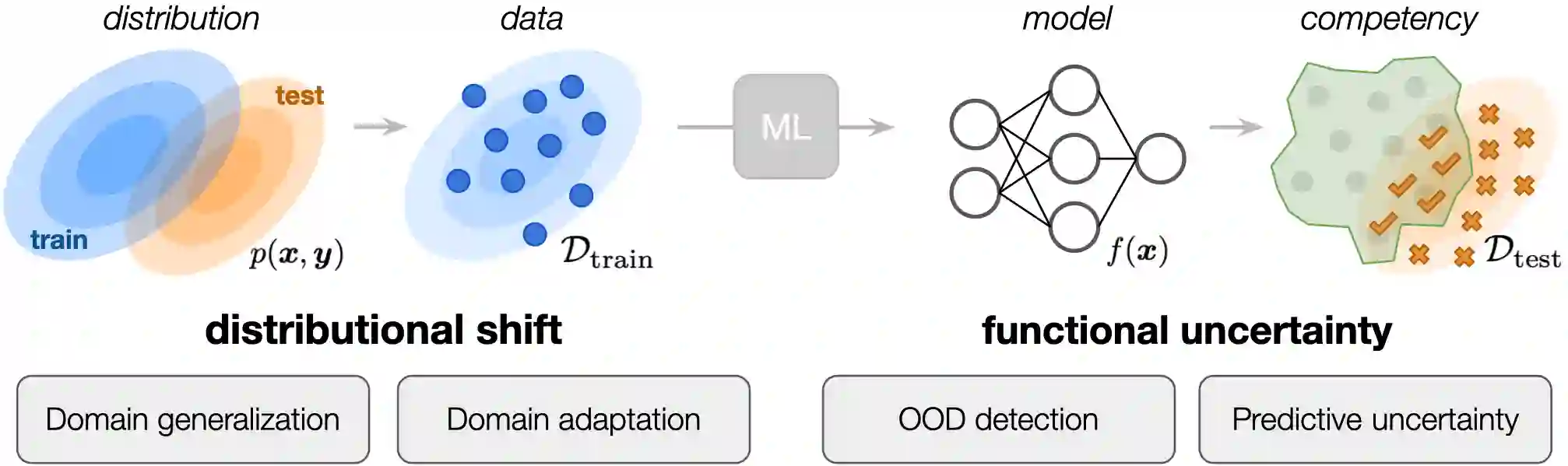

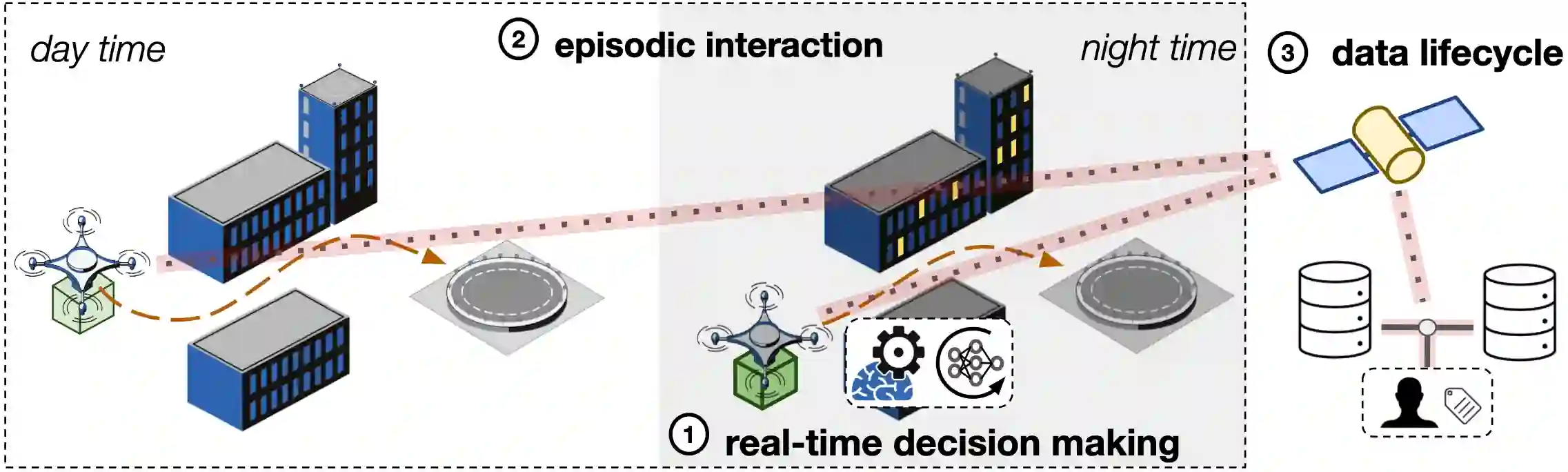

When testing conditions differ from those represented in training data, so-called out-of-distribution (OOD) inputs can mar the reliability of black-box learned components in the modern robot autonomy stack. Therefore, coping with OOD data is an important challenge on the path towards trustworthy learning-enabled open-world autonomy. In this paper, we aim to demystify the topic of OOD data and its associated challenges in the context of data-driven robotic systems, drawing connections to emerging paradigms in the ML community that study the effect of OOD data on learned models in isolation. We argue that as roboticists, we should reason about the overall system-level competence of a robot as it performs tasks in OOD conditions. We highlight key research questions around this system-level view of OOD problems to guide future research toward safe and reliable learning-enabled autonomy.

翻译:当测试条件不同于培训数据时,所谓的分配外(OOD)投入可能会破坏现代机器人自主堆叠中黑盒所学部件的可靠性,因此,处理OOD数据是走向可信学习、开放世界自主道路上的一个重要挑战。本文旨在解开OOD数据专题及其在数据驱动机器人系统背景下的相关挑战的神秘性,与研究OOD数据对孤立的学习模型的影响的ML社区新出现的模式建立联系。我们主张,作为机器人学家,我们应了解机器人在OOD条件下执行任务的总体系统能力。我们强调围绕OOD问题这一系统层面观点的主要研究问题,以指导未来研究如何实现安全和可靠的学习自主。