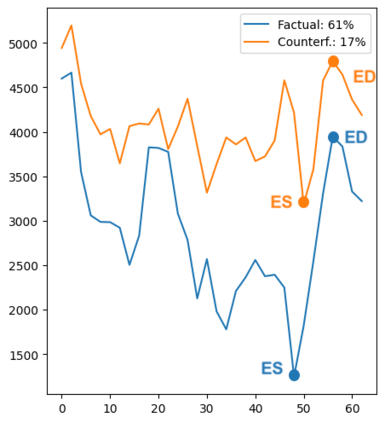

Causally-enabled machine learning frameworks could help clinicians to identify the best course of treatments by answering counterfactual questions. We explore this path for the case of echocardiograms by looking into the variation of the Left Ventricle Ejection Fraction, the most essential clinical metric gained from these examinations. We combine deep neural networks, twin causal networks and generative adversarial methods for the first time to build D'ARTAGNAN (Deep ARtificial Twin-Architecture GeNerAtive Networks), a novel causal generative model. We demonstrate the soundness of our approach on a synthetic dataset before applying it to cardiac ultrasound videos to answer the question: "What would this echocardiogram look like if the patient had a different ejection fraction?". To do so, we generate new ultrasound videos, retaining the video style and anatomy of the original patient, while modifying the Ejection Fraction conditioned on a given input. We achieve an SSIM score of 0.79 and an R2 score of 0.51 on the counterfactual videos. Code and models are available at: https://github.com/HReynaud/dartagnan.

翻译:由机体驱动的机体学习框架可以帮助临床医生通过回答反事实问题来确定最佳治疗方法。 我们通过研究这些检查获得的最基本临床计量标准左侧心电图分数的变异,探索回声心电图案例的这一路径。 我们把深神经网络、双因果网络和基因对抗方法首次结合起来,以建立D'ARTAGANAN(深重再生的双构件-建筑GeNerative网络),这是一个全新的因果基因化模型。 我们在将合成数据集应用到心脏超声波视频以解答问题之前,展示了我们在合成数据集上的方法的健全性:“如果病人有不同的弹射分数,这种回声心电图会是什么样子?”为了做到这一点,我们制作了新的超声波视频,保留了视频样式和原始病人的解剖法,同时根据给定的投入修改了EjectFraction条件。 我们在反事实视频上取得了0.79分和0.51分的R2分数。 代码和模型见: https://giethub.com。