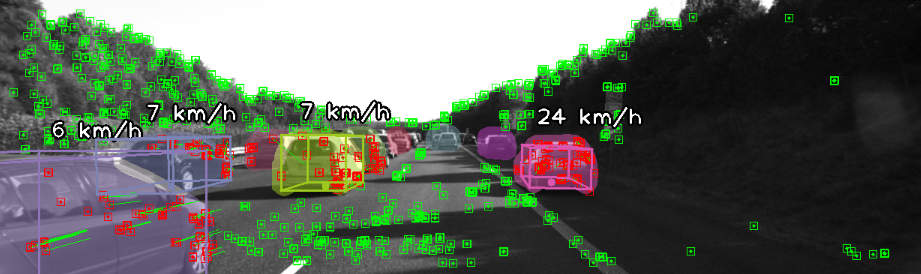

The assumption of scene rigidity is common in visual SLAM algorithms. However, it limits their applicability in populated real-world environments. Furthermore, most scenarios including autonomous driving, multi-robot collaboration and augmented/virtual reality, require explicit motion information of the surroundings to help with decision making and scene understanding. We present in this paper DynaSLAM II, a visual SLAM system for stereo and RGB-D configurations that tightly integrates the multi-object tracking capability. DynaSLAM II makes use of instance semantic segmentation and of ORB features to track dynamic objects. The structure of the static scene and of the dynamic objects is optimized jointly with the trajectories of both the camera and the moving agents within a novel bundle adjustment proposal. The 3D bounding boxes of the objects are also estimated and loosely optimized within a fixed temporal window. We demonstrate that tracking dynamic objects does not only provide rich clues for scene understanding but is also beneficial for camera tracking. The project code will be released upon acceptance.

翻译:场景僵硬的假设在视觉 SLAM 算法中很常见,但它限制了其在人口密集的现实环境中的适用性。此外,大多数情景,包括自主驾驶、多机器人协作和增强/虚拟现实,都需要周围环境的清晰运动信息,以帮助决策和了解场景。我们在本文中展示了Dynaslam II,一个用于立体和 RGB-D 配置的视觉SLAM 系统,它密切结合了多物体跟踪能力。Dynaslam II 使用实例语法分割和ORB 功能跟踪动态物体。静止场景和动态物体的结构与相机和移动剂的轨迹在新的捆绑式调整提议中一起优化。这些物体的3D 边框也在固定的时间窗口中进行估计和大致优化。我们证明,跟踪动态物体不仅为现场了解提供了丰富的线索,而且有助于相机跟踪。项目代码在被接受后将发布。