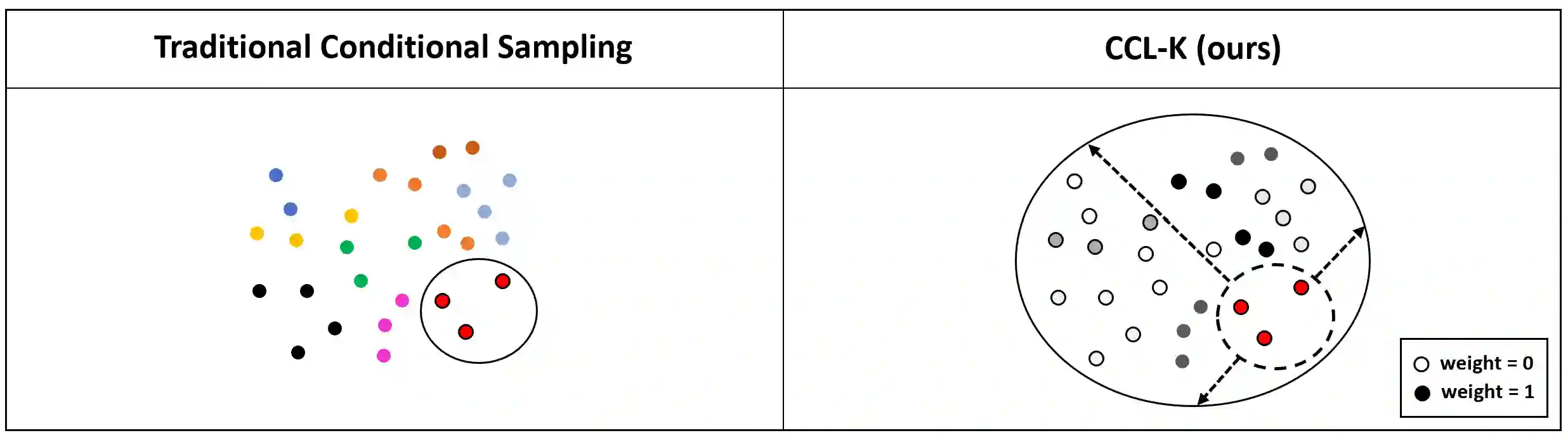

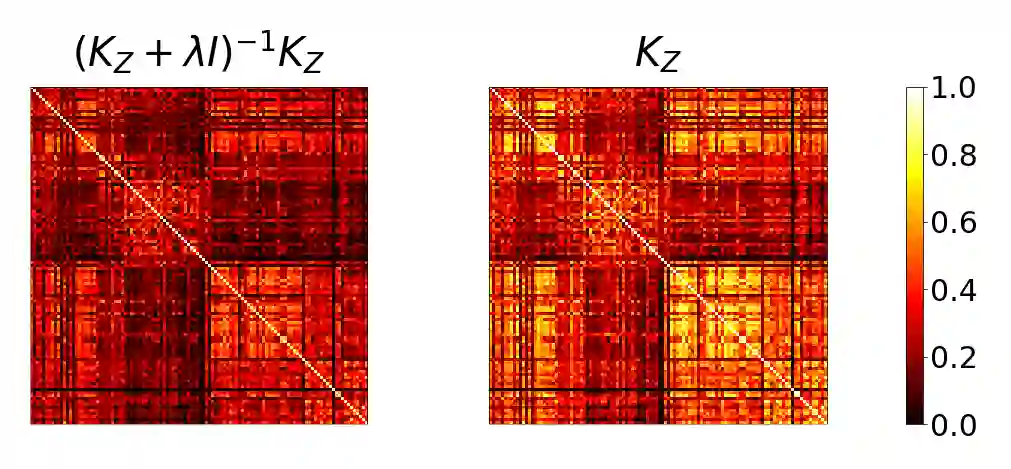

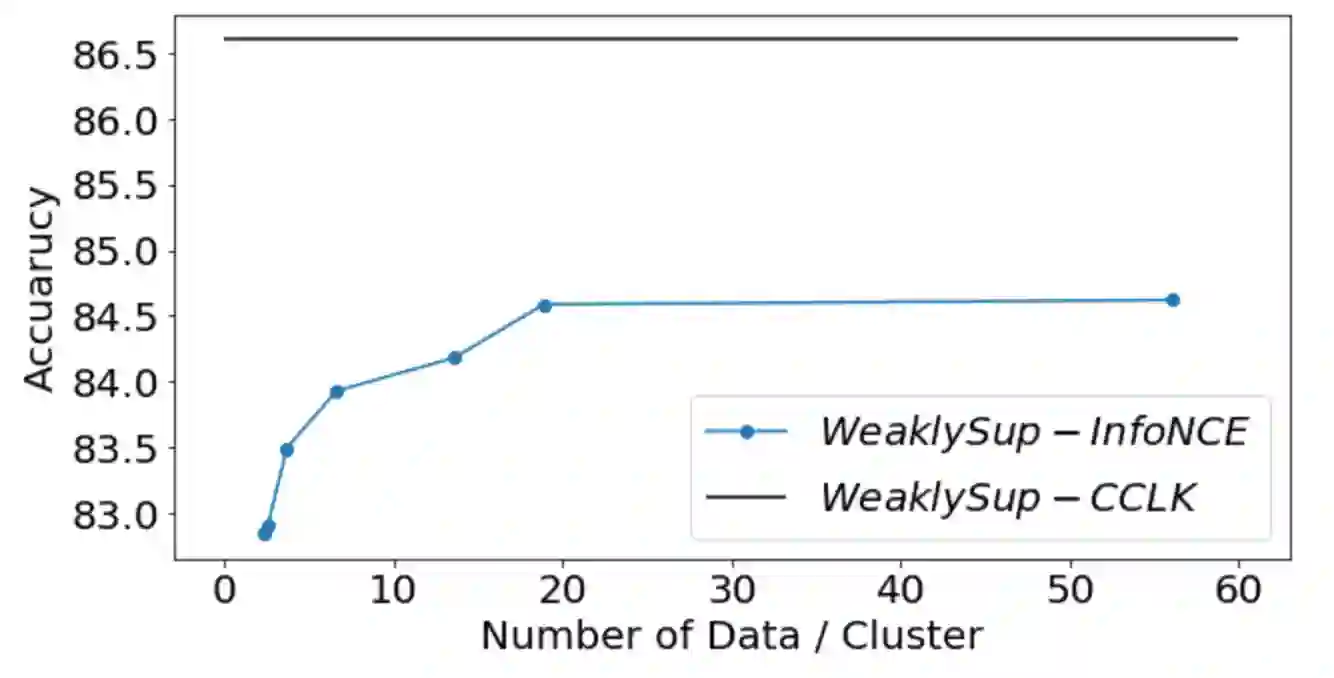

Conditional contrastive learning frameworks consider the conditional sampling procedure that constructs positive or negative data pairs conditioned on specific variables. Fair contrastive learning constructs negative pairs, for example, from the same gender (conditioning on sensitive information), which in turn reduces undesirable information from the learned representations; weakly supervised contrastive learning constructs positive pairs with similar annotative attributes (conditioning on auxiliary information), which in turn are incorporated into the representations. Although conditional contrastive learning enables many applications, the conditional sampling procedure can be challenging if we cannot obtain sufficient data pairs for some values of the conditioning variable. This paper presents Conditional Contrastive Learning with Kernel (CCL-K) that converts existing conditional contrastive objectives into alternative forms that mitigate the insufficient data problem. Instead of sampling data according to the value of the conditioning variable, CCL-K uses the Kernel Conditional Embedding Operator that samples data from all available data and assigns weights to each sampled data given the kernel similarity between the values of the conditioning variable. We conduct experiments using weakly supervised, fair, and hard negatives contrastive learning, showing CCL-K outperforms state-of-the-art baselines.

翻译:有条件对比学习框架考虑到以具体变量为条件的、建立正对或负数据配对的有条件抽样程序; 对比学习法以同一性别(对敏感信息进行调控)建立负对(对敏感信息进行调控),这反过来减少了从所学的表述中获取的不良信息; 监督不力的对比学习制成具有类似说明属性(对辅助信息进行调控)的正面对(对辅助信息进行调控),而这反过来又被纳入表述中。 虽然有条件对比学习法允许许多应用,但如果我们不能为调控变量的某些值获得足够的数据配对,则有条件采样程序可能具有挑战性。 本文展示了与内核的有条件对立学习( CEL-K), 将现有的有条件对比目标转换为减轻数据不足问题的替代形式。 CCL-K 使用根据调控变量的价值进行抽样的数据, 使用从所有可用数据中提取数据的 Kernel Contrational 嵌入的操作者, 给每个抽样数据分配权重,因为调控变值之间有相似的内脏。 我们用薄弱、 公平、硬反向对比基线进行实验, 显示CCL-KFRAD-FSD-FSD-FSD-FD-SD-SD-SD-SD-S-SD-SD-SD-SD-SD-SD-S-S-S-SD-S-S-S-S-S-S-S-S-S-S-S-SD-SD-SD-SD-SD-SD-SD-SD-SD-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-K-C-C-C-K-C-C-K-K-C-C-C-K-C-C-C-C-C-C-C-K-C-C-C-C-C-K-C-C-C-C-C-C-C-C-C-C-C-C-C-C-K