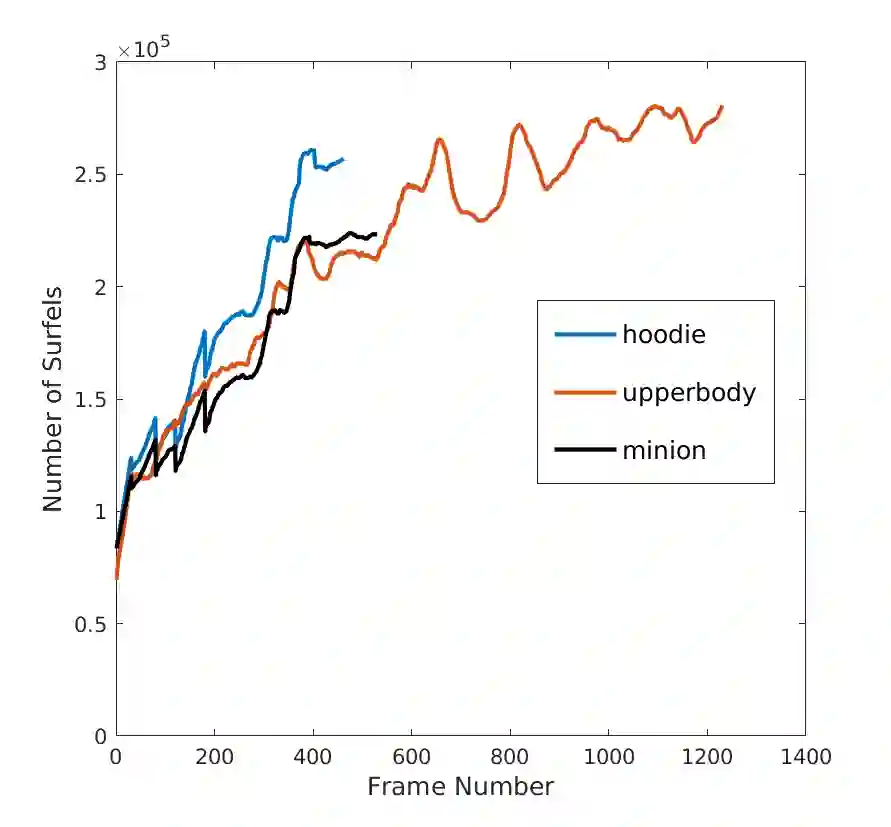

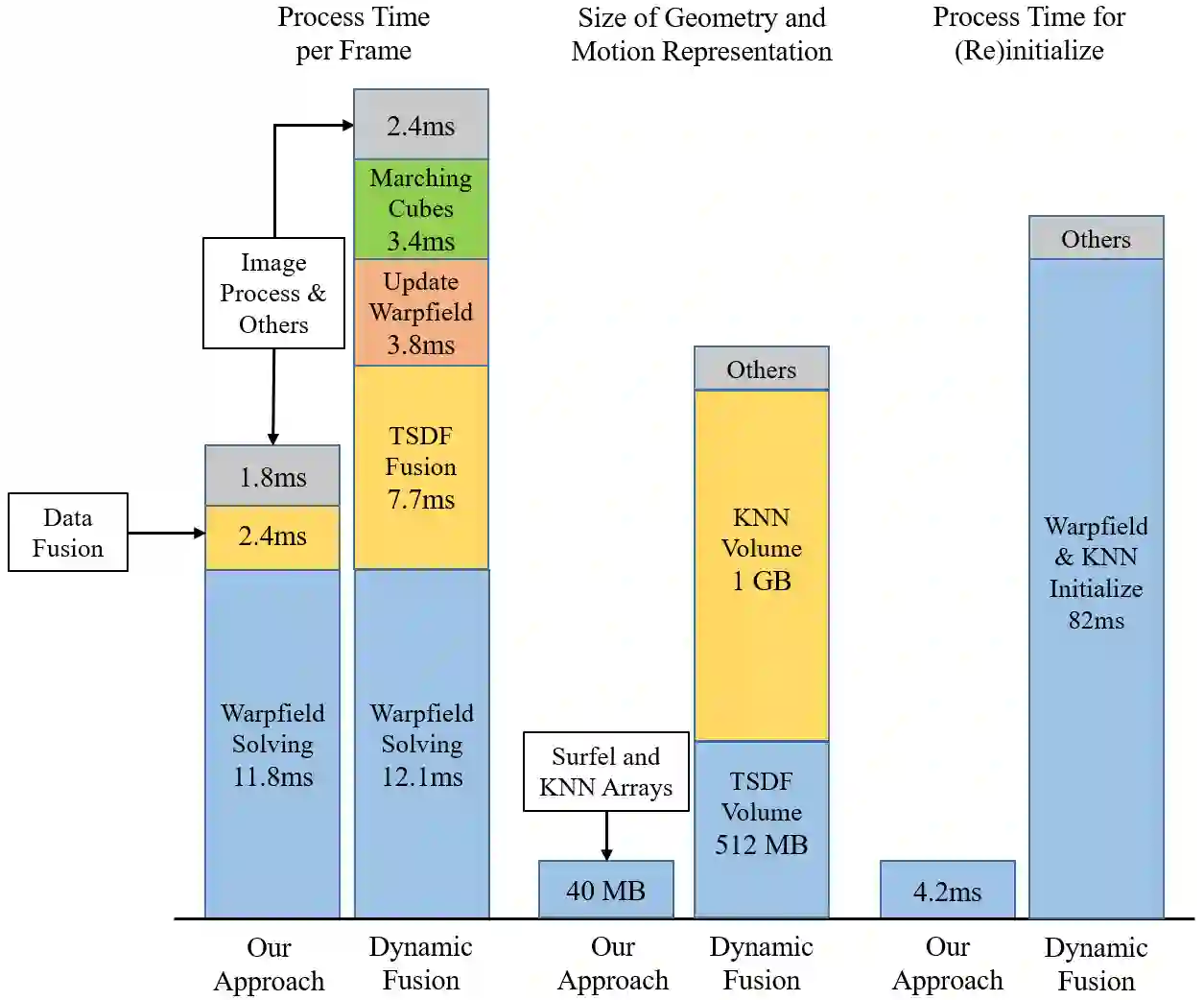

We contribute a dense SLAM system that takes a live stream of depth images as input and reconstructs non-rigid deforming scenes in real time, without templates or prior models. In contrast to existing approaches, we do not maintain any volumetric data structures, such as truncated signed distance function (TSDF) fields or deformation fields, which are performance and memory intensive. Our system works with a flat point (surfel) based representation of geometry, which can be directly acquired from commodity depth sensors. Standard graphics pipelines and general purpose GPU (GPGPU) computing are leveraged for all central operations: i.e., nearest neighbor maintenance, non-rigid deformation field estimation and fusion of depth measurements. Our pipeline inherently avoids expensive volumetric operations such as marching cubes, volumetric fusion and dense deformation field update, leading to significantly improved performance. Furthermore, the explicit and flexible surfel based geometry representation enables efficient tackling of topology changes and tracking failures, which makes our reconstructions consistent with updated depth observations. Our system allows robots to maintain a scene description with non-rigidly deformed objects that potentially enables interactions with dynamic working environments.

翻译:我们贡献的是一个密集的SLAM系统,它以实时的深度图像流为输入,并实时地重建非硬化变形场,没有模板或先前的模型。与现有的方法不同,我们不维持任何体积数据结构,例如短短的签名远程功能场或变形场,它们是性能和记忆密集的。我们的系统使用一个基于平点(表面)的几何表示法,该表示法可以直接从商品深度传感器获得。标准图形管道和通用GPU(GPPU)计算法用于所有中央操作:即最近的近邻维护、非硬化变形场估计和深度测量的聚合。我们的输油管本身避免了昂贵的体积操作,如立方体进化、体振动和密集的变形场更新,导致性能的大幅改进。此外,基于表面测量法的清晰灵活的几何表示法能够有效地处理地形变化和跟踪失败,从而使我们的重建与最新的深度观测相一致。我们的系统允许机器人与非硬化的物体保持场景描述,从而有可能与动态工作环境相互作用。