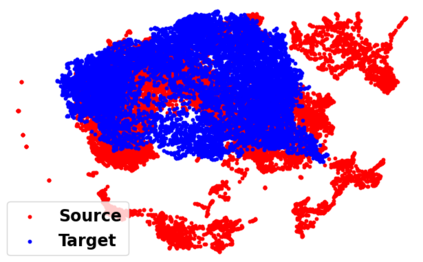

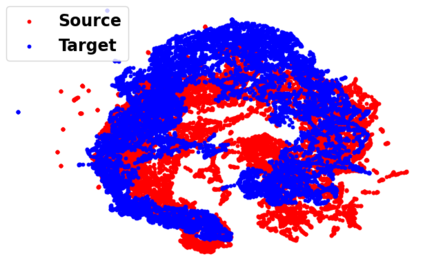

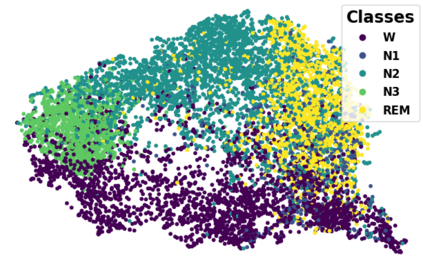

Sleep staging is of great importance in the diagnosis and treatment of sleep disorders. Recently, numerous data driven deep learning models have been proposed for automatic sleep staging. They mainly rely on the assumption that training and testing data are drawn from the same distribution which may not hold in real-world scenarios. Unsupervised domain adaption (UDA) has been recently developed to handle this domain shift problem. However, previous UDA methods applied for sleep staging has two main limitations. First, they rely on a totally shared model for the domain alignment, which may lose the domain-specific information during feature extraction. Second, they only align the source and target distributions globally without considering the class information in the target domain, which hinders the classification performance of the model. In this work, we propose a novel adversarial learning framework to tackle the domain shift problem in the unlabeled target domain. First, we develop unshared attention mechanisms to preserve the domain-specific features in the source and target domains. Second, we design a self-training strategy to align the fine-grained class distributions for the source and target domains via target domain pseudo labels. We also propose dual distinct classifiers to increase the robustness and quality of the pseudo labels. The experimental results on six cross-domain scenarios validate the efficacy of our proposed framework for sleep staging and its advantage over state-of-the-art UDA methods.

翻译:最近,许多数据驱动的深层次学习模式被推荐用于自动睡眠模式,它们主要依赖于这样的假设,即培训和测试数据来自在现实情景下可能无法维持的同一分布。最近开发了不受监督的域适应(UDA)来应对这个域变换问题。然而,以前用于睡眠变换的UDA方法有两个主要的局限性。首先,它们依赖于完全共享的域对齐模式,在特征提取过程中可能会失去特定域域的信息。第二,它们仅对源和目标分布进行全球调整,而不考虑目标域的类别信息,这妨碍了模型的分类性能。在此工作中,我们提出了一个新的对抗性格学习框架,以解决未加标签的目标域域域的域变问题。首先,我们开发了未共享的注意机制,以维护源和目标域的域特定特征。第二,我们设计了自我培训战略,以通过目标域伪标签来调整源和目标域的精细分类分布。我们还提议了双重截然不同的基级化模型,以便提高模型的稳健性和跨度。