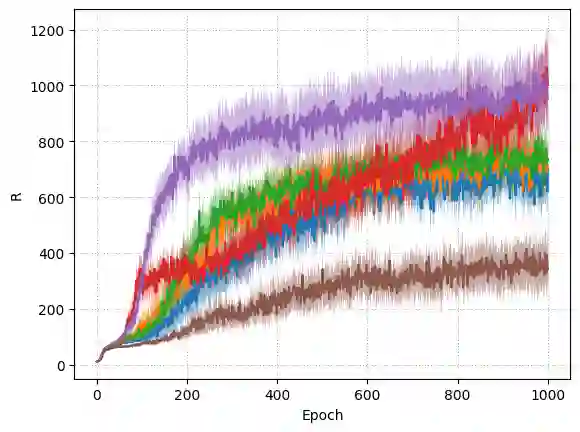

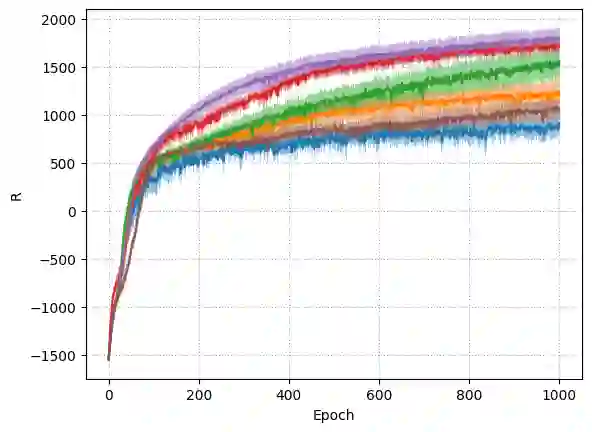

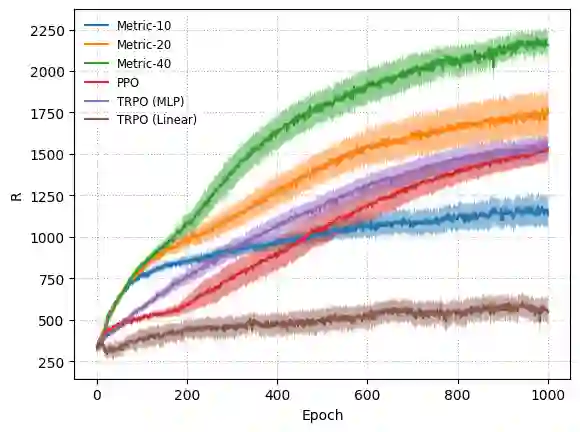

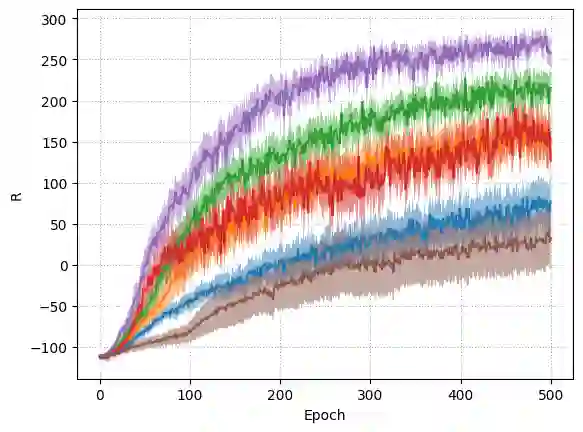

Reinforcement learning (RL) has demonstrated its ability to solve high dimensional tasks by leveraging non-linear function approximators. However, these successes are mostly achieved by 'black-box' policies in simulated domains. When deploying RL to the real world, several concerns regarding the use of a 'black-box' policy might be raised. In order to make the learned policies more transparent, we propose in this paper a policy iteration scheme that retains a complex function approximator for its internal value predictions but constrains the policy to have a concise, hierarchical, and human-readable structure, based on a mixture of interpretable experts. Each expert selects a primitive action according to a distance to a prototypical state. A key design decision to keep such experts interpretable is to select the prototypical states from trajectory data. The main technical contribution of the paper is to address the challenges introduced by this non-differentiable prototypical state selection procedure. Experimentally, we show that our proposed algorithm can learn compelling policies on continuous action deep RL benchmarks, matching the performance of neural network based policies, but returning policies that are more amenable to human inspection than neural network or linear-in-feature policies.

翻译:强化强化学习( RL) 展示了它通过利用非线性函数匹配器解决高维任务的能力。 但是,这些成功大多是通过模拟域中的“ 黑箱” 政策实现的。 在向现实世界部署“ 黑箱” 政策时, 可能会提出一些关于使用“ 黑箱” 政策的关切。 为了让所学政策更加透明, 我们在本文件中提议了一个政策循环计划, 保留一个复杂功能与内部价值预测相近的匹配器, 但它限制了政策在可解释专家的混合基础上有一个简洁、 等级和人可读的结构。 每位专家都选择了一种原始行动, 与原型状态相距甚远的原始行动。 保持这些专家可解释的关键设计决定是从轨迹数据中选择原型状态。 本文的主要技术贡献是应对这种非差别的准典型状态选择程序所带来的挑战。 实验性地说, 我们提议的算法可以学习关于持续行动深度RL基准的令人信服的政策, 匹配以神经网络为基础的政策, 但返回政策比直线式检查更适合人类的政策。

相关内容

Source: Apple - iOS 8