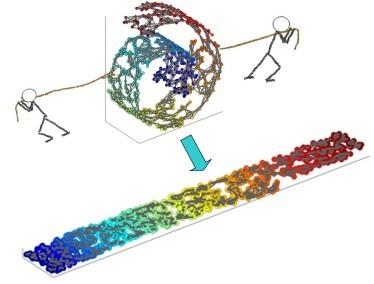

This is a tutorial and survey paper for Locally Linear Embedding (LLE) and its variants. The idea of LLE is fitting the local structure of manifold in the embedding space. In this paper, we first cover LLE, kernel LLE, inverse LLE, and feature fusion with LLE. Then, we cover out-of-sample embedding using linear reconstruction, eigenfunctions, and kernel mapping. Incremental LLE is explained for embedding streaming data. Landmark LLE methods using the Nystrom approximation and locally linear landmarks are explained for big data embedding. We introduce the methods for parameter selection of number of neighbors using residual variance, Procrustes statistics, preservation neighborhood error, and local neighborhood selection. Afterwards, Supervised LLE (SLLE), enhanced SLLE, SLLE projection, probabilistic SLLE, supervised guided LLE (using Hilbert-Schmidt independence criterion), and semi-supervised LLE are explained for supervised and semi-supervised embedding. Robust LLE methods using least squares problem and penalty functions are also introduced for embedding in the presence of outliers and noise. Then, we introduce fusion of LLE with other manifold learning methods including Isomap (i.e., ISOLLE), principal component analysis, Fisher discriminant analysis, discriminant LLE, and Isotop. Finally, we explain weighted LLE in which the distances, reconstruction weights, or the embeddings are adjusted for better embedding; we cover weighted LLE for deformed distributed data, weighted LLE using probability of occurrence, SLLE by adjusting weights, modified LLE, and iterative LLE.

翻译:这是本地线性嵌入器( LLE) 及其变体的教义和调查文件。 LLE 的概念符合嵌入空间中的本地方块结构。 在本文中, 我们首先覆盖 LLE、 内核 LLE、 反LLLE、 与 LLLE 混合特性。 然后, 我们用线性重建、 电子元件和内核映射来覆盖外层嵌入。 为嵌入流数据解释递增 LLLE 。 使用 Nystrom 近似和本地线性地标的LLLLLE 方法, 为大数据嵌入解释使用 LEE 的LLLLE 参数选择邻居数的参数选择方法。 之后, Supervised LLLLE( SLLE)、 增强 SLLE、 预测、 不稳定性 SLLLLLLE 、 监管 LLLLE 的LLLE 和半超级LLLLLLL 用于监管和半超超级嵌嵌嵌嵌嵌嵌入嵌入和半层嵌入嵌入。 Roal LLLLLLLLLLLE 方法,,, 使用最低级的 Ral LLLLLLLLE 和S 和S 的 Ral LLLE 和S 的升级 和S 演示次 分析。