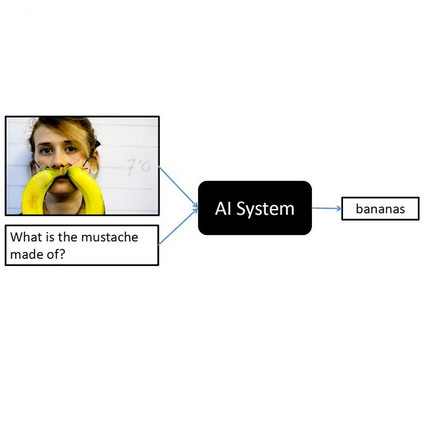

Advances in GPT-based large language models (LLMs) are revolutionizing natural language processing, exponentially increasing its use across various domains. Incorporating uni-directional attention, these autoregressive LLMs can generate long and coherent paragraphs. However, for visual question answering (VQA) tasks that require both vision and language processing, models with bi-directional attention or models employing fusion techniques are often employed to capture the context of multiple modalities all at once. As GPT does not natively process vision tokens, to exploit the advancements in GPT models for VQA in robotic surgery, we design an end-to-end trainable Language-Vision GPT (LV-GPT) model that expands the GPT2 model to include vision input (image). The proposed LV-GPT incorporates a feature extractor (vision tokenizer) and vision token embedding (token type and pose). Given the limitations of unidirectional attention in GPT models and their ability to generate coherent long paragraphs, we carefully sequence the word tokens before vision tokens, mimicking the human thought process of understanding the question to infer an answer from an image. Quantitatively, we prove that the LV-GPT model outperforms other state-of-the-art VQA models on two publically available surgical-VQA datasets (based on endoscopic vision challenge robotic scene segmentation 2018 and CholecTriplet2021) and on our newly annotated dataset (based on the holistic surgical scene dataset). We further annotate all three datasets to include question-type annotations to allow sub-type analysis. Furthermore, we extensively study and present the effects of token sequencing, token type and pose embedding for vision tokens in the LV-GPT model.

翻译:基于GPT的大型语言模型(LLM)的进步正在彻底改变自然语言处理领域,并在各个领域指数级地增加应用。这些自回归的LLMs可以生成长而连贯的段落,而采用双向关注或融合技术的模型通常用于捕捉多种模态的上下文来进行视觉问答(VQA)任务。由于GPT没有本地处理视觉令牌的能力,为了利用GPT模型在机器人手术中的VQA的进展,我们设计了一种端到端可训练的语言-视觉GPT(LV-GPT)模型,该模型通过包括视觉输入(图像)来扩展GPT2模型。所提出的LV-GPT包括一个特征提取器(视觉分词器)和视觉令牌嵌入(令牌类型和姿态)。考虑到GPT模型中单向关注的局限性以及它们生成连贯长段落的能力,我们在视觉令牌之前仔细排序单词令牌,模仿人类的思维过程,从图像中理解问题以推断答案。通过数量化的方法,我们证明LV-GPT模型优于其他最先进的基于VQA的模型,在两个公开可用的手术VQA数据集(基于内窥镜视觉挑战机器人场景分割2018和CholecTriplet2021)以及我们新注释的数据集(基于整体手术场景数据集)上均表现出色。我们进一步对三个数据集进行注释,以包括问题类型注释,以便进行子类型分析。此外,我们广泛研究并展示了LV-GPT模型中令牌排序、令牌类型和姿态嵌入对视觉令牌的影响。