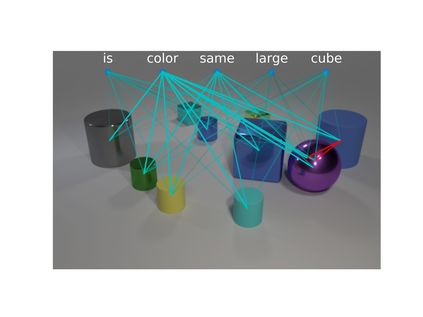

We present Language-binding Object Graph Network, the first neural reasoning method with dynamic relational structures across both visual and textual domains with applications in visual question answering. Relaxing the common assumption made by current models that the object predicates pre-exist and stay static, passive to the reasoning process, we propose that these dynamic predicates expand across the domain borders to include pair-wise visual-linguistic object binding. In our method, these contextualized object links are actively found within each recurrent reasoning step without relying on external predicative priors. These dynamic structures reflect the conditional dual-domain object dependency given the evolving context of the reasoning through co-attention. Such discovered dynamic graphs facilitate multi-step knowledge combination and refinements that iteratively deduce the compact representation of the final answer. The effectiveness of this model is demonstrated on image question answering demonstrating favorable performance on major VQA datasets. Our method outperforms other methods in sophisticated question-answering tasks wherein multiple object relations are involved. The graph structure effectively assists the progress of training, and therefore the network learns efficiently compared to other reasoning models.

翻译:我们提出具有语言约束力的物体图网,这是第一个具有视觉和文字领域动态关系结构的神经推理方法,在视觉和文字领域都有动态关系结构,在视觉问题解答中也有相应的应用。我们放松了当前模型的共同假设,即物体先存在后保持静态,对推理过程没有影响,我们建议这些动态上游扩展跨域边界,以包括双向视觉语言物体绑定。在我们的方法中,这些背景化物体链接在不依赖外部预言的每个经常性推理步骤中都得到了积极的发现。这些动态结构反映了有条件的双体物体依赖性,因为通过共同注意推理的背景在不断变化。这些发现的动态图有助于多步知识组合和完善,反复推导出最后答案的简明代表。这一模型的有效性表现在图像问题上,在显示主要VQA数据集的优异性表现。我们的方法在涉及多个对象关系的复杂解答任务中超越了其他方法。图形结构有效地协助了培训的进展,因此网络与其他推理模型相比学习效率。