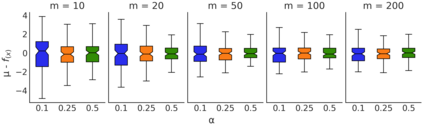

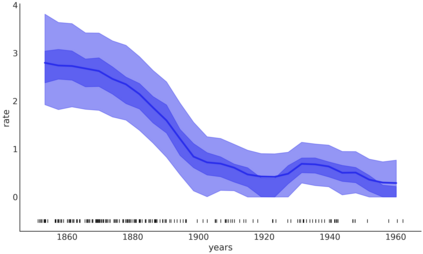

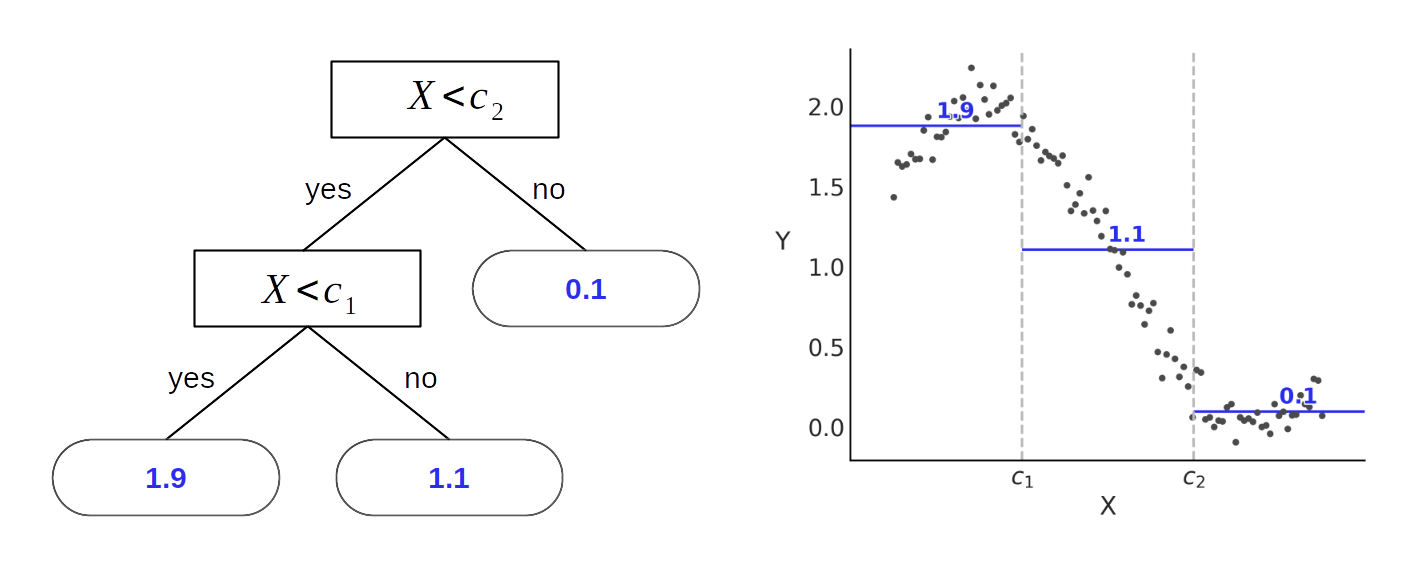

Bayesian additive regression trees (BART) is a non-parametric method to approximate functions. It is a black-box method based on the sum of many trees where priors are used to regularize inference, mainly by restricting trees' learning capacity so that no individual tree is able to explain the data, but rather the sum of trees. We discuss BART in the context of probabilistic programming languages (PPLs), i.e. we present BART as a primitive that can be used as a component of a probabilistic model rather than as a standalone model. Specifically, we introduce the Python library PyMC-BART, which works by extending PyMC, a library for probabilistic programming. We present a few examples of models that can be built using PyMC-BART, discuss recommendations for selection of hyperparameters, and finally, we close with limitations of our implementation and future directions for improvement.

翻译:Bayesian 添加回归树(BART) 是一种非参数性的方法来估计功能。 它是一种黑箱方法,它基于许多树的总和,这些树使用前科来规范推理,主要是限制树木的学习能力,以便没有单个的树木能够解释数据,而是树木的总和。 我们用概率性编程语言(PPLs)讨论BART,即我们把BART说成是原始的,可以用作概率模型的一部分,而不是独立模型。 具体地说,我们引入了Python 图书馆PyMC-BART,通过扩展PyMC(一个概率性编程图书馆,即概率性编程图书馆)来开展工作。 我们举了几个例子说明可以用PyMC-BART(PyMC-BART)来建立的模式,讨论选择超参数的建议,最后,我们结束了我们实施和今后改进方向的局限性。