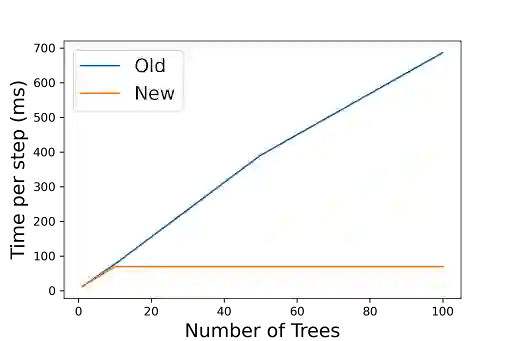

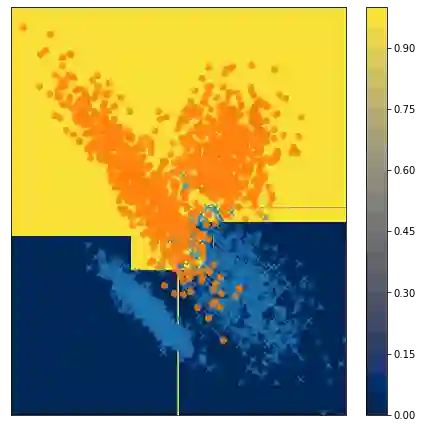

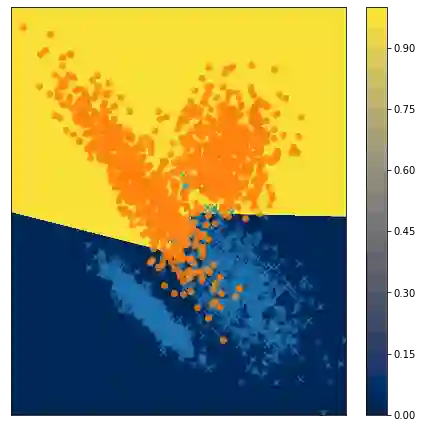

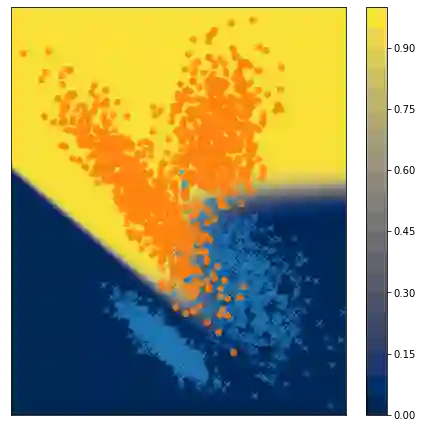

Decision tree ensembles are widely used and competitive learning models. Despite their success, popular toolkits for learning tree ensembles have limited modeling capabilities. For instance, these toolkits support a limited number of loss functions and are restricted to single task learning. We propose a flexible framework for learning tree ensembles, which goes beyond existing toolkits to support arbitrary loss functions, missing responses, and multi-task learning. Our framework builds on differentiable (a.k.a. soft) tree ensembles, which can be trained using first-order methods. However, unlike classical trees, differentiable trees are difficult to scale. We therefore propose a novel tensor-based formulation of differentiable trees that allows for efficient vectorization on GPUs. We perform experiments on a collection of 28 real open-source and proprietary datasets, which demonstrate that our framework can lead to 100x more compact and 23% more expressive tree ensembles than those by popular toolkits.

翻译:决策树群被广泛使用,具有竞争性的学习模式被广泛使用。尽管取得了成功,学习树群的流行工具包的建模能力有限。例如,这些工具包支持了有限的损失功能,并仅限于单项任务学习。我们提出了一个学习树群的灵活框架,该框架超越了现有的工具包,支持任意损失功能、缺失反应和多任务学习。我们的框架以不同(a.k.a.软)树群为基础,可以使用一等方法加以培训。然而,与古典树不同,不同的树木难以规模化。因此,我们提议对不同的树群进行新的高压配方,允许在GPUs上高效传承。我们在收集28个真正的开放源和专有数据集方面进行了实验,这表明我们的框架可以导致100倍的紧凑度和23%的直观树群集,而不像流行的工具包那样。