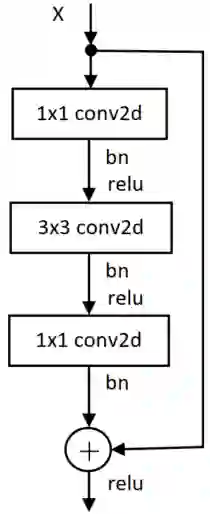

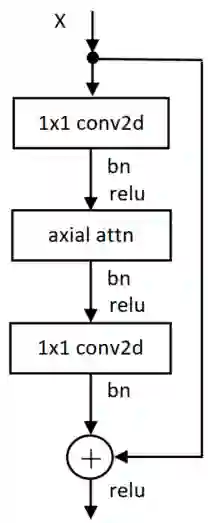

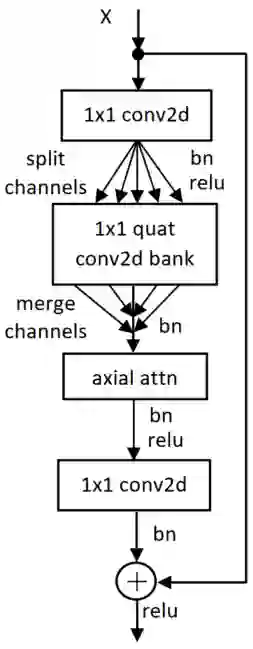

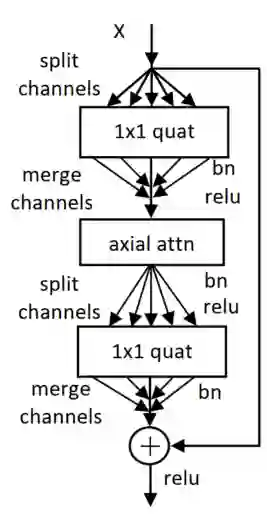

In recent years, hypercomplex-inspired neural networks (HCNNs) have been used to improve deep learning architectures due to their ability to enable channel-based weight sharing, treat colors as a single entity, and improve representational coherence within the layers. The work described herein studies the effect of replacing existing layers in an Axial Attention network with their representationally coherent variants to assess the effect on image classification. We experiment with the stem of the network, the bottleneck layers, and the fully connected backend, by replacing them with representationally coherent variants. These various modifications lead to novel architectures which all yield improved accuracy performance on the ImageNet300k classification dataset. Our baseline networks for comparison were the original real-valued ResNet, the original quaternion-valued ResNet, and the Axial Attention ResNet. Since improvement was observed regardless of which part of the network was modified, there is a promise that this technique may be generally useful in improving classification accuracy for a large class of networks.

翻译:近年来,超复杂受启发神经网络(HCNNs)被用于改善深层学习结构,因为它们有能力促进基于频道的重量共享,将颜色作为单一实体对待,并提高各层之间的代表性一致性。本文所述的工作研究以代表一致的变体取代轴心网络现有层的影响,以评估对图像分类的影响。我们试验网络的干部、瓶颈层和完全连接的后端,用代表一致的变体取而代之。这些不同的修改导致新的结构,使图像网300k分类数据集的精确性能得到提高。我们用于比较的基线网络是原始实际估价的ResNet、最初的四氧化值ResNet和轴心ResNet。因为无论网络的哪个部分被修改,都观察到改进了,因此有希望这一技术在提高大量网络的分类准确性方面普遍有用。