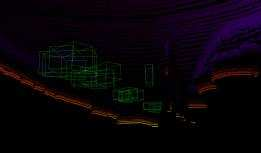

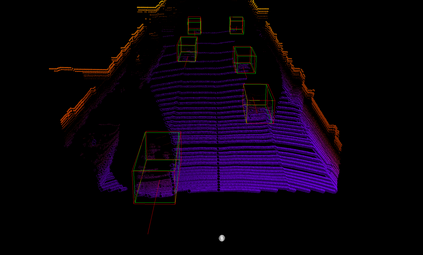

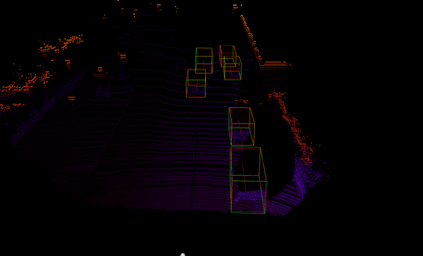

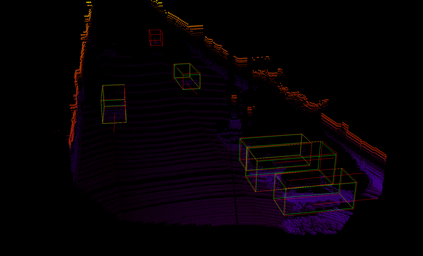

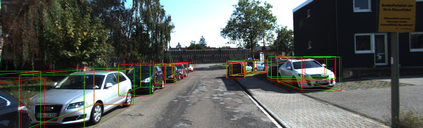

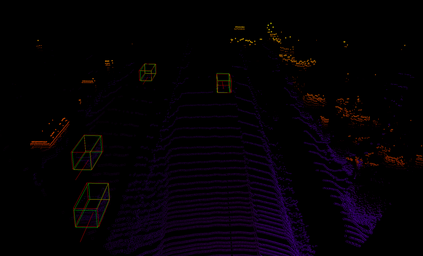

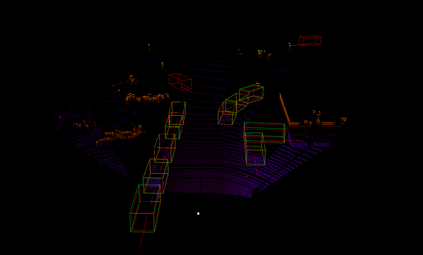

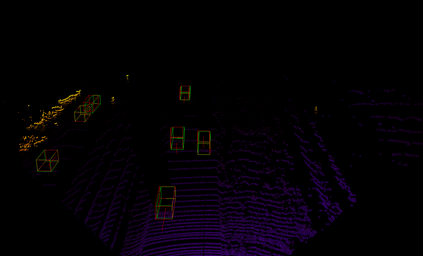

When localizing and detecting 3D objects for autonomous driving scenes, obtaining information from multiple sensor (e.g. camera, LIDAR) typically increases the robustness of 3D detectors. However, the efficient and effective fusion of different features captured from LIDAR and camera is still challenging, especially due to the sparsity and irregularity of point cloud distributions. This notwithstanding, point clouds offer useful complementary information. In this paper, we would like to leverage the advantages of LIDAR and camera sensors by proposing a deep neural network architecture for the fusion and the efficient detection of 3D objects by identifying their corresponding 3D bounding boxes with orientation. In order to achieve this task, instead of densely combining the point-wise feature of the point cloud and the related pixel features, we propose a novel fusion algorithm by projecting a set of 3D Region of Interests (RoIs) from the point clouds to the 2D RoIs of the corresponding the images. Finally, we demonstrate that our deep fusion approach achieves state-of-the-art performance on the KITTI 3D object detection challenging benchmark.

翻译:在为自动驾驶场定位和探测三维物体时,从多个传感器(例如相机、LIDAR)获取信息通常会提高三维探测器的坚固度;然而,从拉热雷达和相机中捕捉到的不同特征的高效和有效融合仍然具有挑战性,特别是由于点云分布的广度和不规则性。尽管如此,点云提供了有用的补充信息。在本文件中,我们希望利用LIDAR和相机传感器的优势,提出一个深神经网络结构,通过确定相应的三维捆绑框和定向来进行融合和有效探测三维物体。为了完成这项任务,我们建议采用新的组合算法,从点云到相应图像的2D RoIs 投射一套三维利益区。最后,我们证明,我们的深融合方法在KITTI 3D物体探测基准上取得了最先进的性能。