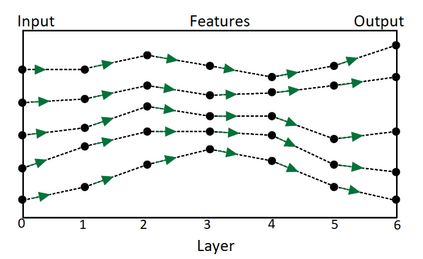

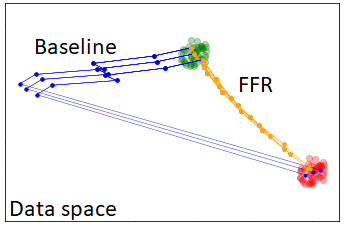

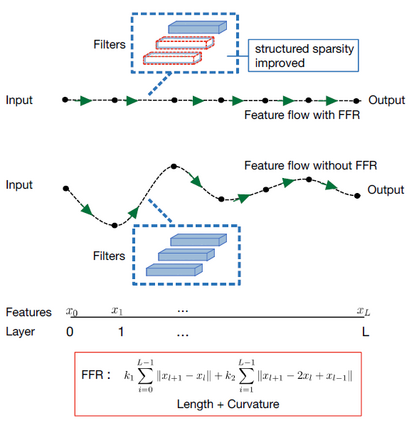

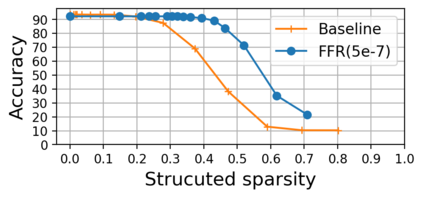

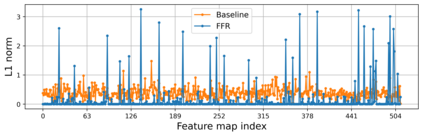

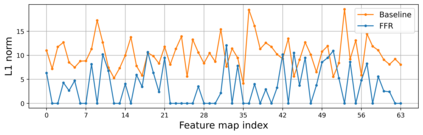

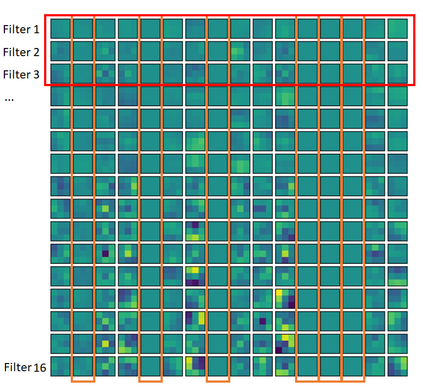

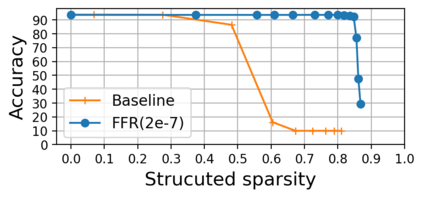

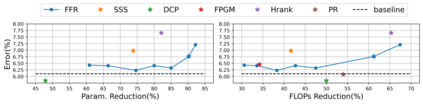

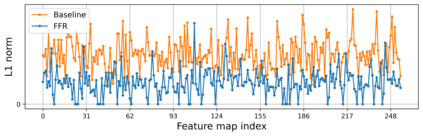

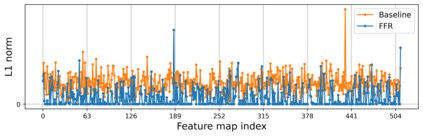

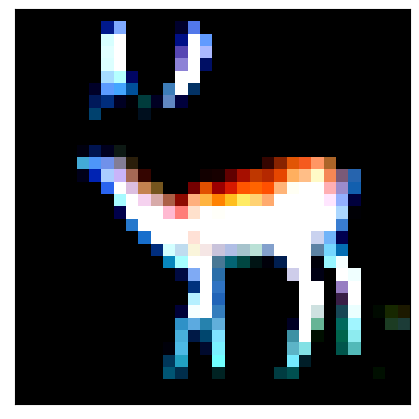

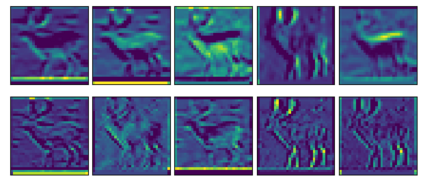

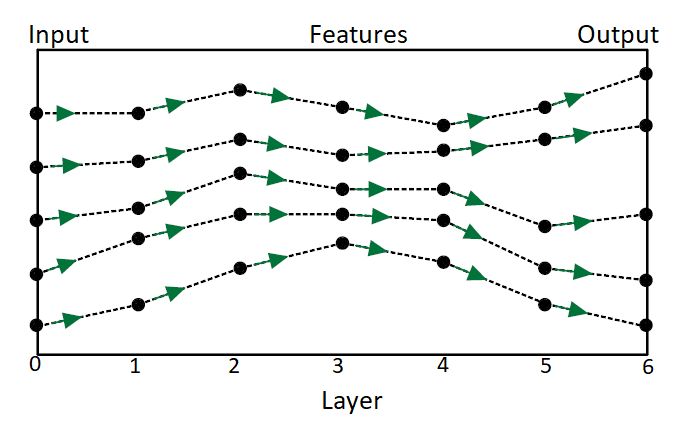

Pruning is a model compression method that removes redundant parameters in deep neural networks (DNNs) while maintaining accuracy. Most available filter pruning methods require complex treatments such as iterative pruning, features statistics/ranking, or additional optimization designs in the training process. In this paper, we propose a simple and effective regularization strategy from a new perspective of evolution of features, which we call feature flow regularization (FFR), for improving structured sparsity and filter pruning in DNNs. Specifically, FFR imposes controls on the gradient and curvature of feature flow along the neural network, which implicitly increases the sparsity of the parameters. The principle behind FFR is that coherent and smooth evolution of features will lead to an efficient network that avoids redundant parameters. The high structured sparsity obtained from FFR enables us to prune filters effectively. Experiments with VGGNets, ResNets on CIFAR-10/100, and Tiny ImageNet datasets demonstrate that FFR can significantly improve both unstructured and structured sparsity. Our pruning results in terms of reduction of parameters and FLOPs are comparable to or even better than those of state-of-the-art pruning methods.

翻译:在本文中,我们从功能演变的新角度提出了一个简单有效的规范化战略,我们称之为“功能流规范化”(FFR),目的是改进DNNS的结构性宽度和过滤运行。具体地说,FFFR对神经网络沿线地貌流的梯度和曲度进行控制,这间接地增加了参数的广度。FFFR的原则是,特征的连贯和平稳演变将导致一个高效的网络,避免冗余参数。从FFFR获得的高结构宽度使我们能够有效地利用过滤器。与VGGNets、CIFAR-10/100上的ResNets和Tinyy图像网数据集进行的实验表明,FFR能够大大改进神经网络上地貌流动的梯度和曲度,这间接地增加了参数的广度。我们运行的结果是,参数和FLOPs的减少和FLOPs运行方法比这些状态的方法要好。