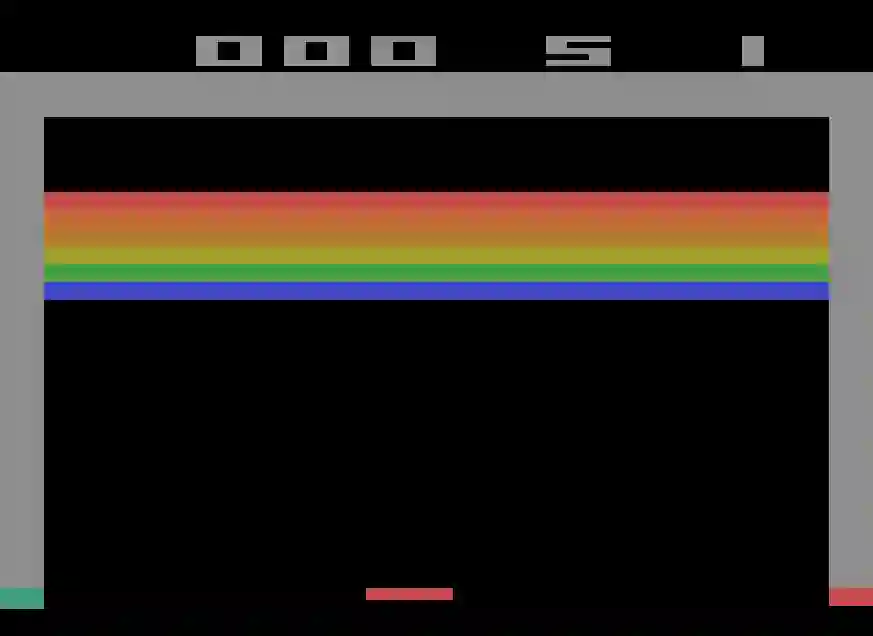

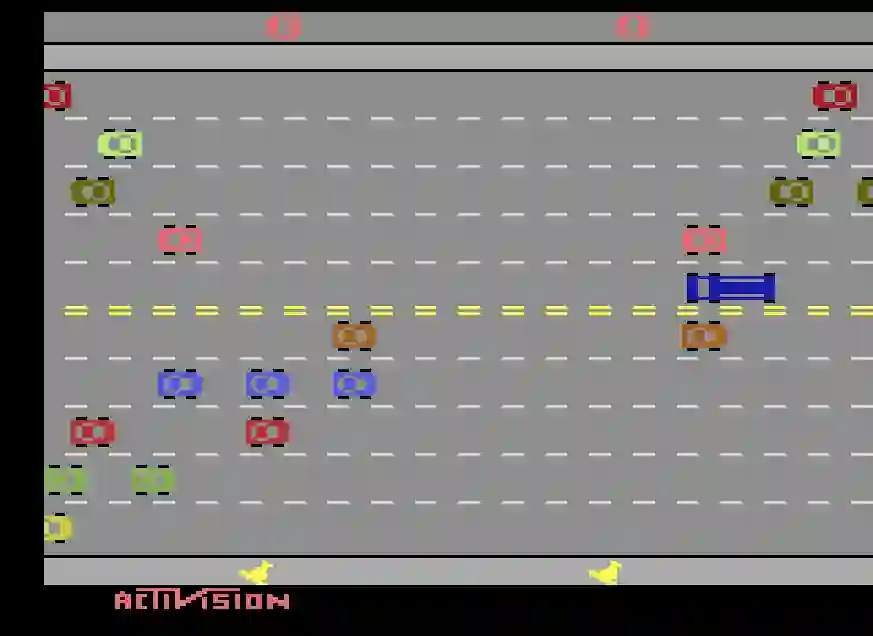

Deep reinforcement learning (RL) algorithms have shown an impressive ability to learn complex control policies in high-dimensional environments. However, despite the ever-increasing performance on popular benchmarks such as the Arcade Learning Environment (ALE), policies learned by deep RL algorithms often struggle to generalize when evaluated in remarkably similar environments. In this paper, we assess the generalization capabilities of DQN, one of the most traditional deep RL algorithms in the field. We provide evidence suggesting that DQN overspecializes to the training environment. We comprehensively evaluate the impact of traditional regularization methods, $\ell_2$-regularization and dropout, and of reusing the learned representations to improve the generalization capabilities of DQN. We perform this study using different game modes of Atari 2600 games, a recently introduced modification for the ALE which supports slight variations of the Atari 2600 games traditionally used for benchmarking. Despite regularization being largely underutilized in deep RL, we show that it can, in fact, help DQN learn more general features. These features can then be reused and fine-tuned on similar tasks, considerably improving the sample efficiency of DQN.

翻译:深入强化学习(RL)算法显示,在高维环境中学习复杂的控制政策的能力令人印象深刻,然而,尽管在诸如Arcade学习环境(ALE)等流行基准上,表现越来越高,但深RL算法所学的政策往往难以在非常相似的环境中加以普遍评价。在本文件中,我们评估了DQN的普及能力,这是该领域最传统的最深RL算法之一。我们提供了证据表明,DQN过于专门从事培训环境。我们全面评估了传统正规化方法($@ell_2$-正规化和辍学)的影响,以及重新使用学到的表达法来提高DQN的普及能力。我们利用Atari 2600游戏的不同游戏模式进行了这项研究,最近对Atari 2600游戏进行了修改,支持了传统用于基准的Atari 2600游戏的微小变化。尽管在深度RL中,我们证明,正规化在事实上可以帮助DQN学习更普遍的特征。这些特征可以再利用和微调类似任务,大大地提高DQN的样本效率。