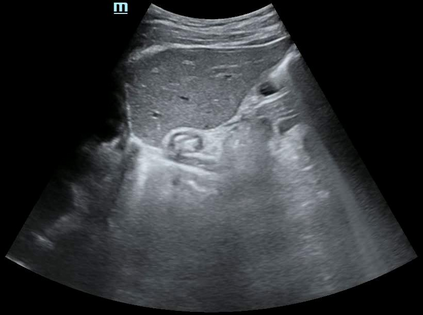

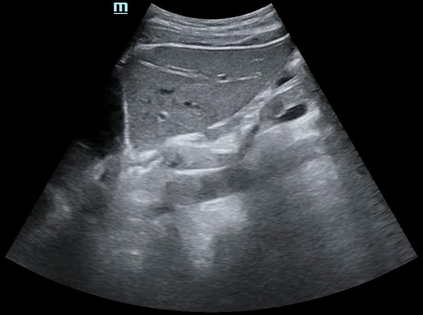

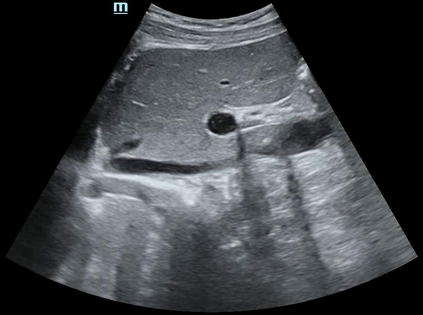

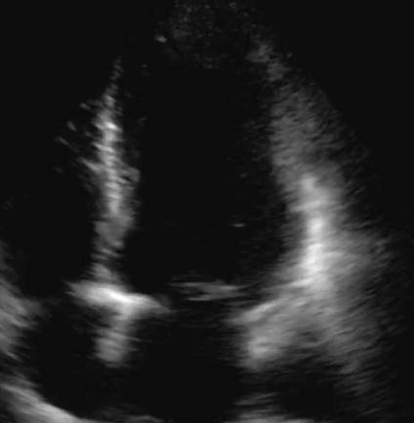

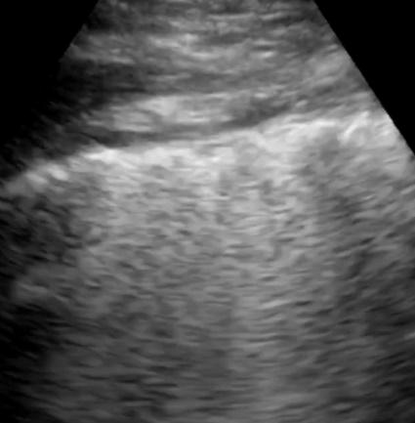

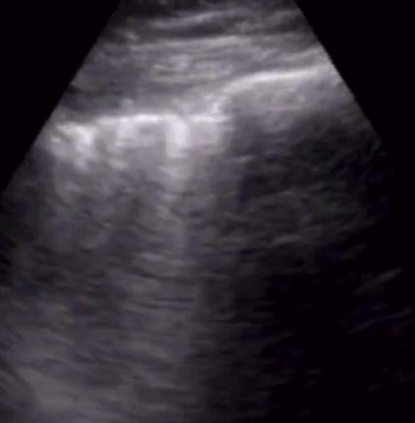

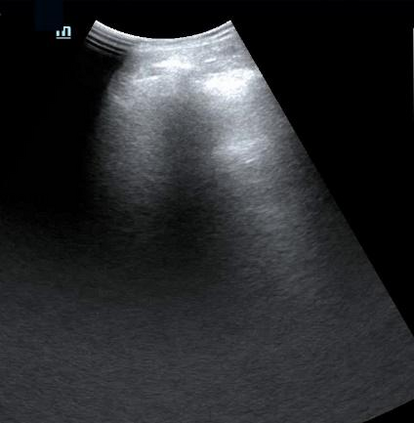

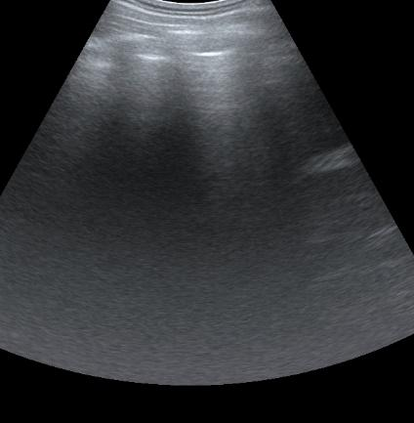

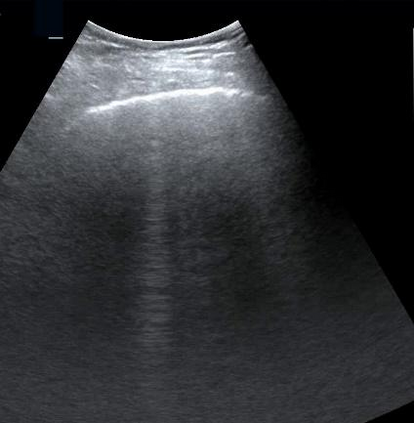

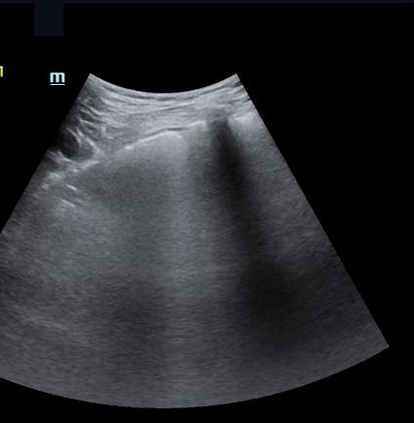

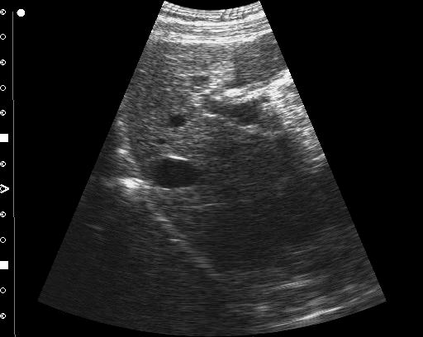

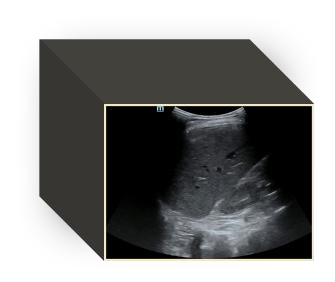

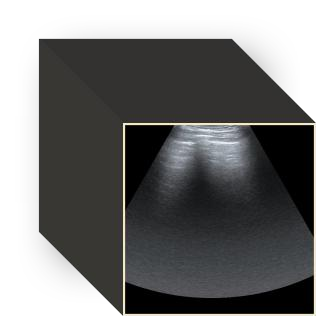

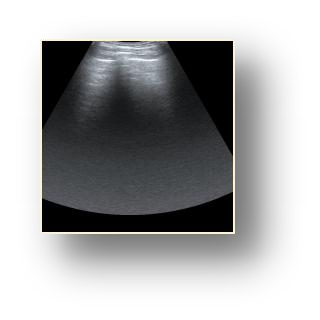

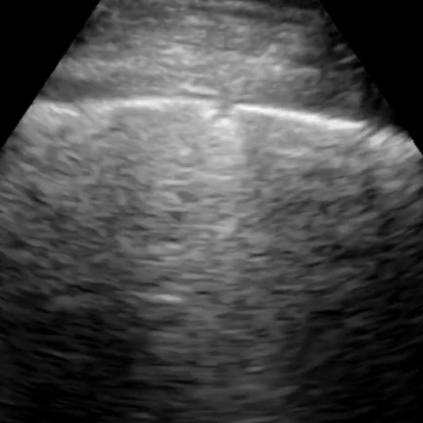

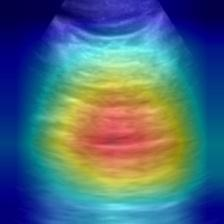

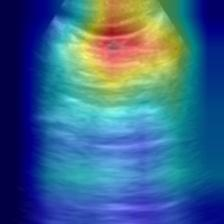

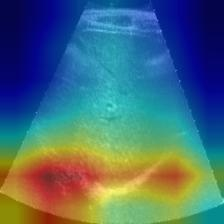

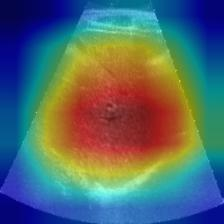

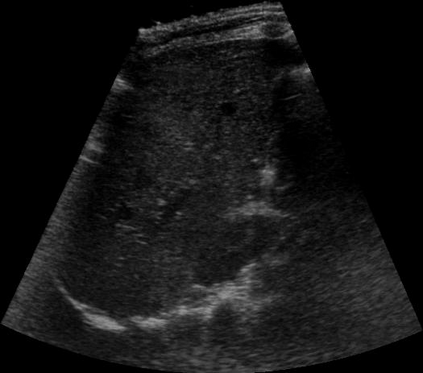

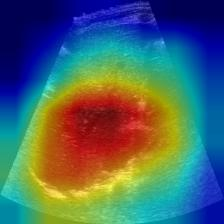

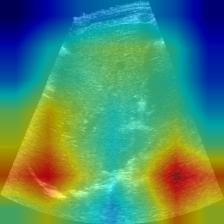

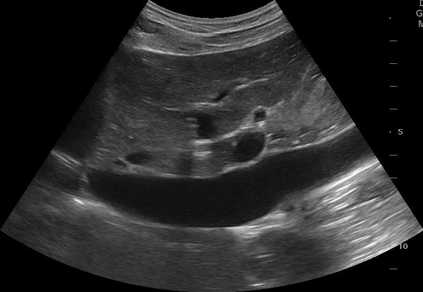

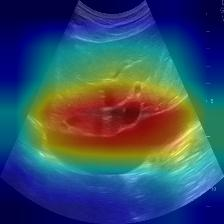

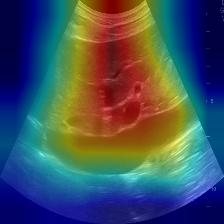

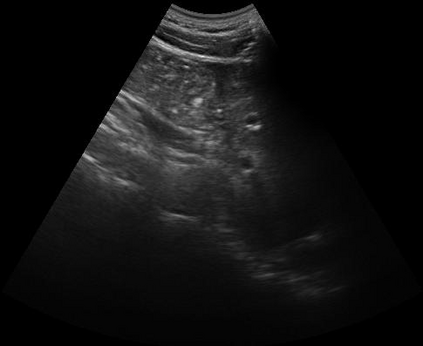

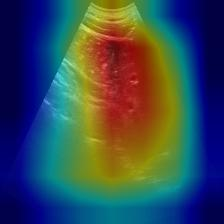

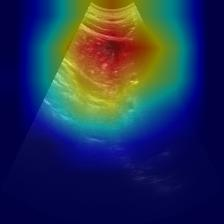

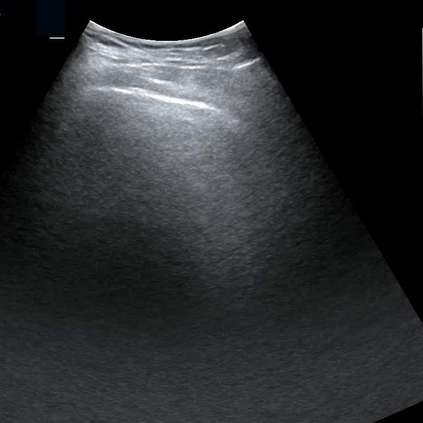

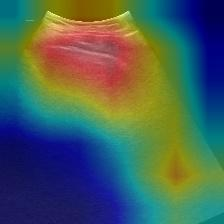

Most deep neural networks (DNNs) based ultrasound (US) medical image analysis models use pretrained backbones (e.g., ImageNet) for better model generalization. However, the domain gap between natural and medical images causes an inevitable performance bottleneck. To alleviate this problem, an US dataset named US-4 is constructed for direct pretraining on the same domain. It contains over 23,000 images from four US video sub-datasets. To learn robust features from US-4, we propose an US semi-supervised contrastive learning method, named USCL, for pretraining. In order to avoid high similarities between negative pairs as well as mine abundant visual features from limited US videos, USCL adopts a sample pair generation method to enrich the feature involved in a single step of contrastive optimization. Extensive experiments on several downstream tasks show the superiority of USCL pretraining against ImageNet pretraining and other state-of-the-art (SOTA) pretraining approaches. In particular, USCL pretrained backbone achieves fine-tuning accuracy of over 94% on POCUS dataset, which is 10% higher than 84% of the ImageNet pretrained model. The source codes of this work are available at https://github.com/983632847/USCL.

翻译:大部分深心神经网络(DNNS)基于超声波(美国)的超声波医学图像分析模型使用预先训练的脊椎(如图像网络)来进行更好的模型化分析。然而,自然图像和医疗图像之间的领域差距造成了不可避免的性能瓶颈。为了缓解这一问题,为在同一领域直接训练建造了一个名为US-4的美国数据集。该数据集包含来自四个美国视频子数据集的23 000多张图像。为了从美国-4中学习强健的特征,我们提议了美国半监督的对比学习方法,名为USCL(USCL),用于预培训。为了避免负面对子与有限的美国视频中丰富的地雷视觉特征之间的高度相似性,USCL采用了一种样品配对生成方法来丰富单步对比性优化所涉及的特征。关于一些下游任务的广泛实验显示了USCL对图像网络预培训和其他状态艺术预培训方法的优势。特别是,USCLU预先训练的骨架在POCS数据集上实现了94 %的微调精准性精确度,这在图像网络上比84%高10 %/MUSPASTASTASTRAIN premstrain practresmex pract press press rodustrismex sramduction sramduction sramduction sramduction surgles)。