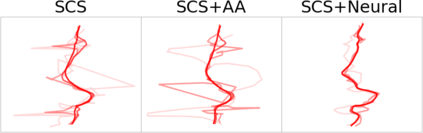

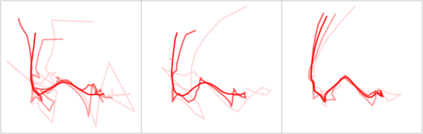

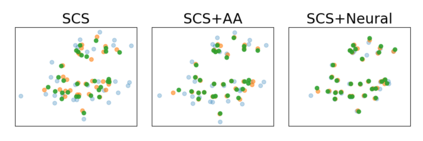

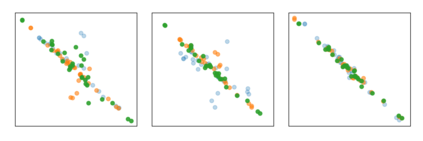

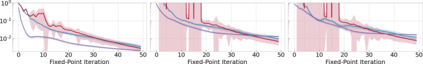

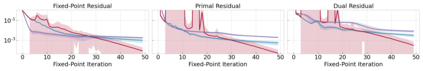

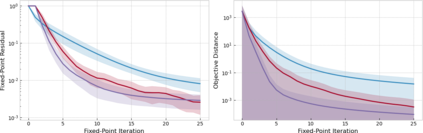

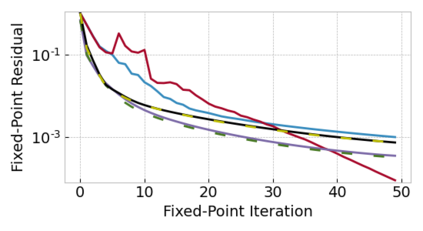

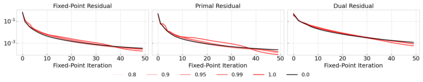

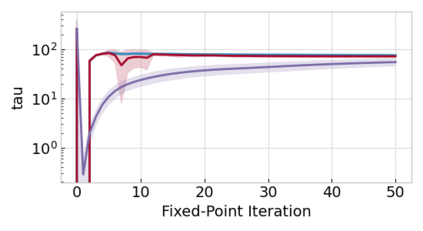

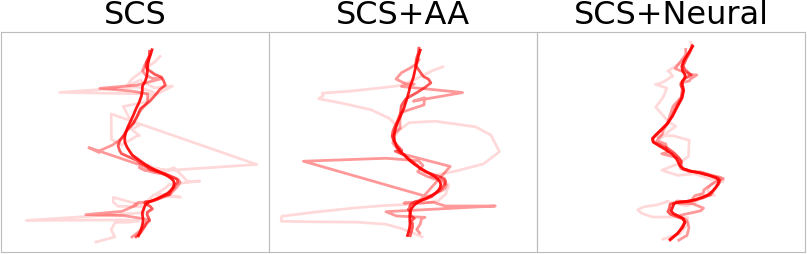

Fixed-point iterations are at the heart of numerical computing and are often a computational bottleneck in real-time applications that typically need a fast solution of moderate accuracy. We present neural fixed-point acceleration which combines ideas from meta-learning and classical acceleration methods to automatically learn to accelerate fixed-point problems that are drawn from a distribution. We apply our framework to SCS, the state-of-the-art solver for convex cone programming, and design models and loss functions to overcome the challenges of learning over unrolled optimization and acceleration instabilities. Our work brings neural acceleration into any optimization problem expressible with CVXPY. The source code behind this paper is available at https://github.com/facebookresearch/neural-scs

翻译:固定点迭代是数字计算的核心,往往是实时应用的计算瓶颈,通常需要适度精确的快速解决方案。我们提出神经固定点加速,将元学习和经典加速法的理念结合起来,自动学习加速从分布中得出的固定点问题。我们把框架应用到SCS,即最先进的连接连接程序设计解决方案解决方案,设计模型和损失功能,以克服在无滚动优化和加速不稳性方面学习的挑战。我们的工作将神经加速引入任何与 CVXPY 可见的优化问题。本文的源代码可在https://github.com/facebookresearch/neural-scs查阅。