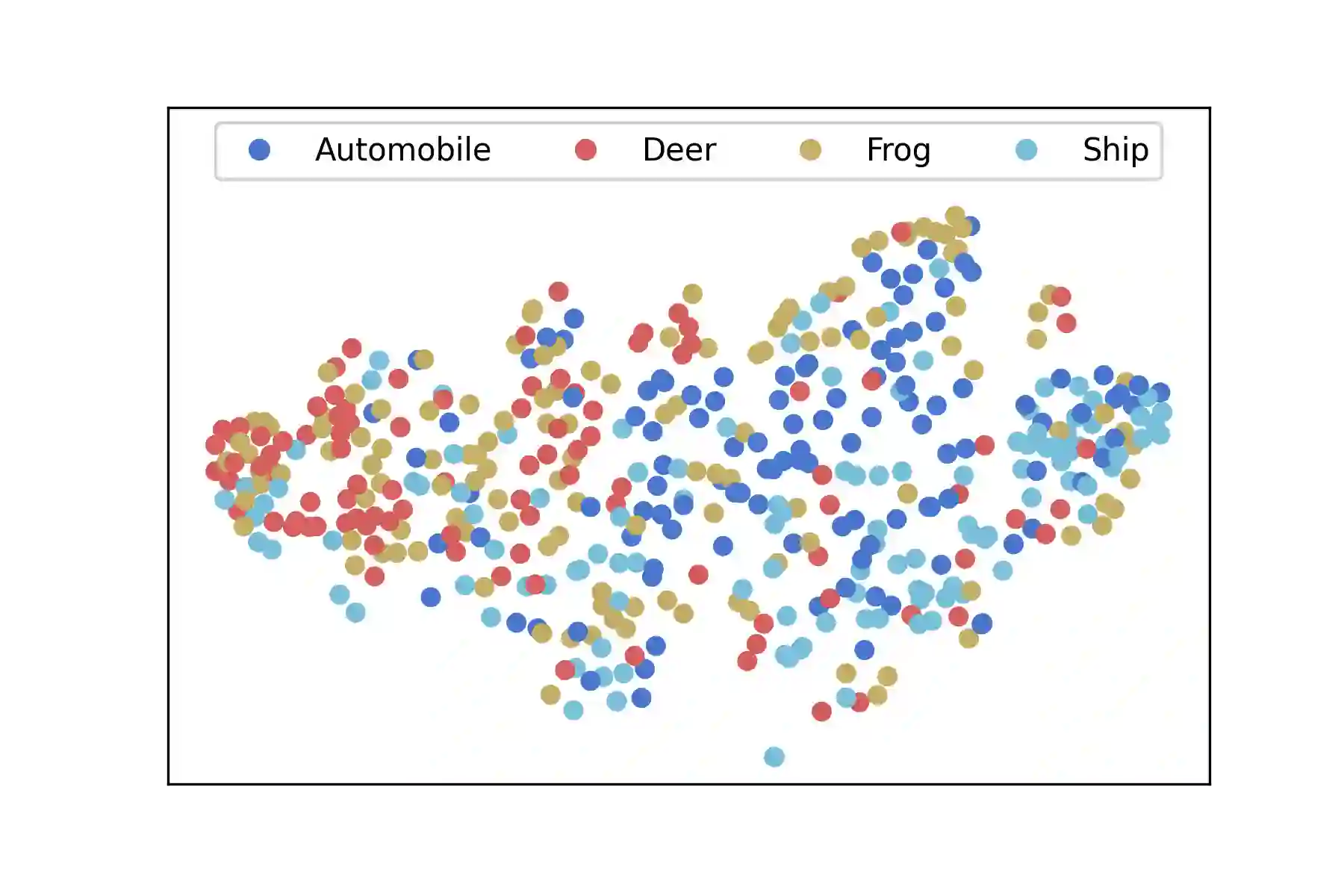

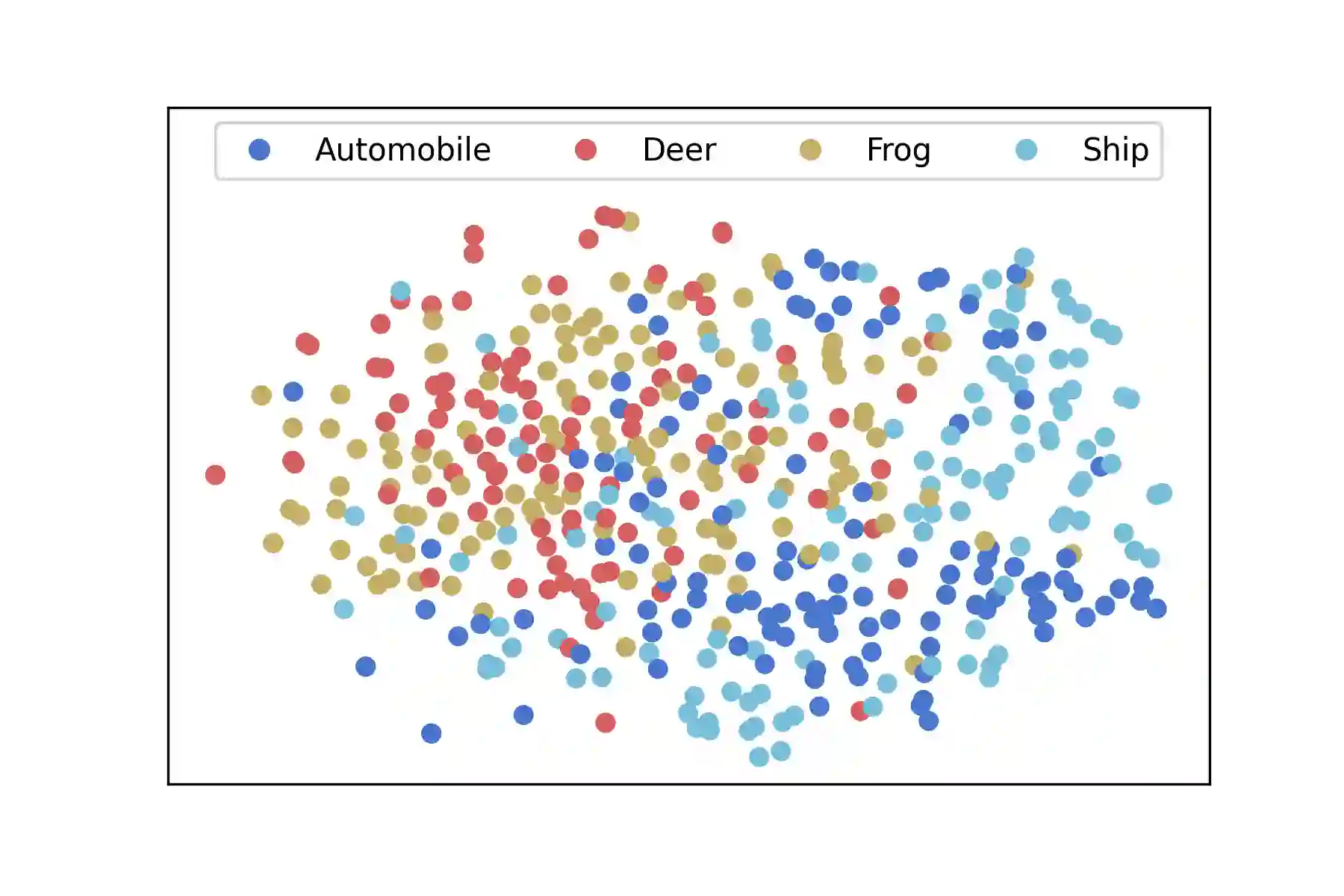

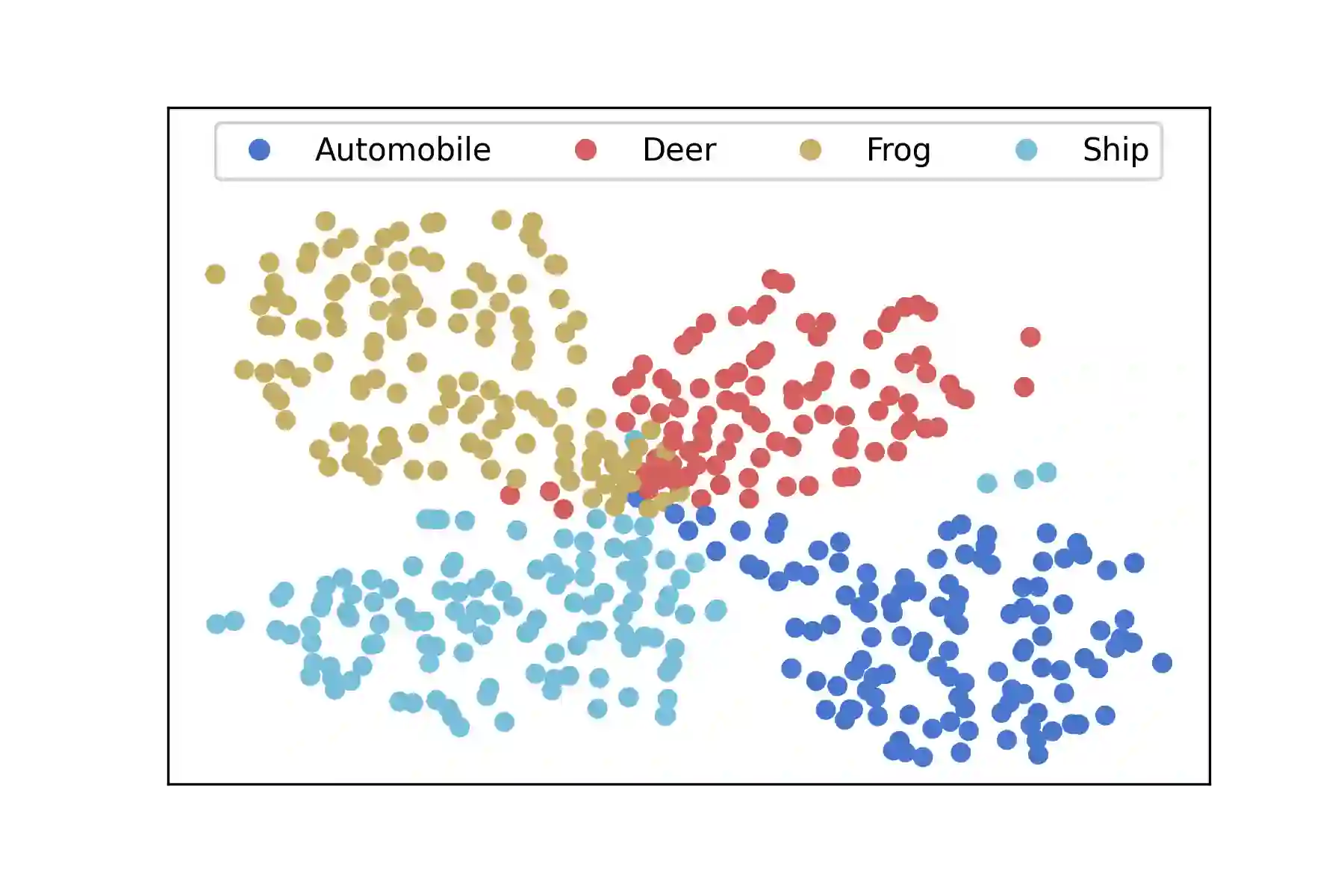

Recently, self-supervised learning has attracted great attention, since it only requires unlabeled data for training. Contrastive learning is one popular method for self-supervised learning and has achieved promising empirical performance. However, the theoretical understanding of its generalization ability is still limited. To this end, we define a kind of $(\sigma,\delta)$-measure to mathematically quantify the data augmentation, and then provide an upper bound of the downstream classification error based on the measure. We show that the generalization ability of contrastive self-supervised learning depends on three key factors: alignment of positive samples, divergence of class centers, and concentration of augmented data. The first two factors can be optimized by contrastive algorithms, while the third one is priorly determined by pre-defined data augmentation. With the above theoretical findings, we further study two canonical contrastive losses, InfoNCE and cross-correlation loss, and prove that both of them are indeed able to satisfy the first two factors. Moreover, we empirically verify the third factor by conducting various experiments on the real-world dataset, and show that our theoretical inferences on the relationship between the data augmentation and the generalization of contrastive self-supervised learning agree with the empirical observations.

翻译:最近,自我监督的学习引起了很大的注意,因为它只要求没有标签的训练数据。 对比学习是自我监督学习的一种流行方法,并且取得了有希望的经验性表现。 但是,对于其一般化能力的理论理解仍然有限。 为此,我们定义了一种(gma,\delta)$的数学量化数据扩增量的计量方法,然后提供了基于该计量的下游分类错误的上限。 我们表明,对比性自我监督学习的普遍化能力取决于三个关键因素:正样的对齐、阶级中心的差异以及扩大的数据的集中。前两个因素可以通过对比性算法优化,而第三个因素则由预先界定的数据扩增确定。根据上述理论研究结果,我们进一步研究了两种有对比性的损失,即InfoNCE和交叉关系损失,并证明两者确实能够满足前两个因素。 此外,我们通过在真实世界数据集观测中进行各种实验,通过实验来验证第三个因素,我们通过理论性地验证了实际数据变异性观察与自我升级之间的理论性对比。