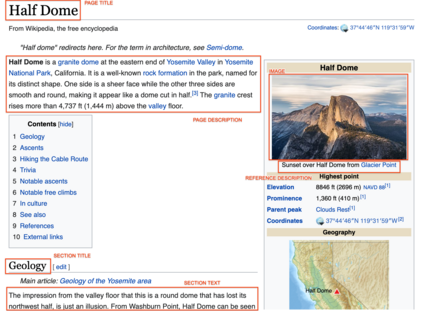

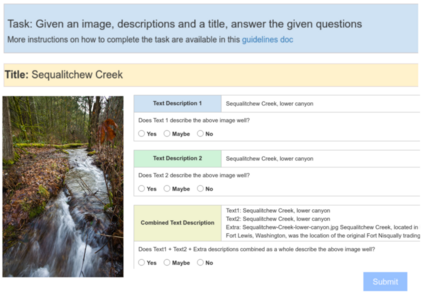

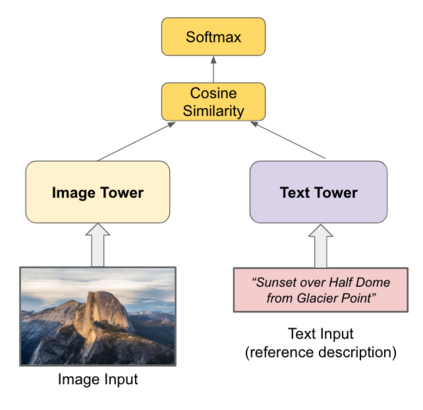

The milestone improvements brought about by deep representation learning and pre-training techniques have led to large performance gains across downstream NLP, IR and Vision tasks. Multimodal modeling techniques aim to leverage large high-quality visio-linguistic datasets for learning complementary information (across image and text modalities). In this paper, we introduce the Wikipedia-based Image Text (WIT) Dataset\footnote{\url{https://github.com/google-research-datasets/wit}} to better facilitate multimodal, multilingual learning. WIT is composed of a curated set of 37.6 million entity rich image-text examples with 11.5 million unique images across 108 Wikipedia languages. Its size enables WIT to be used as a pretraining dataset for multimodal models, as we show when applied to downstream tasks such as image-text retrieval. WIT has four main and unique advantages. First, WIT is the largest multimodal dataset by the number of image-text examples by 3x (at the time of writing). Second, WIT is massively multilingual (first of its kind) with coverage over 100+ languages (each of which has at least 12K examples) and provides cross-lingual texts for many images. Third, WIT represents a more diverse set of concepts and real world entities relative to what previous datasets cover. Lastly, WIT provides a very challenging real-world test set, as we empirically illustrate using an image-text retrieval task as an example.

翻译:深层代表性学习和培训前技术带来的里程碑式改进导致下游NLP、IR和Vision任务的大幅业绩收益。多模式模拟技术旨在利用高质量的高品质语言语言数据集学习补充信息(跨图像和文本模式)。在本文中,我们引入了基于维基百科的图像文本(WIT) Dataset\ footote=url{https://github.com/google-research-datasets/wit ⁇ ),以更好地促进多语言的多式联运学习。第二,WIT由一套3,760万个实体丰富的图像文本范例和108种维基百科语言的1,150万个独特图像集组成。它的规模使得WIT能够用作多模式模型的预培训数据集,正如我们在应用图像文本检索等下游任务时所显示的那样。WIT有四个主要和独特的优势。第一,WIT是最大的多式联运数据集,由3x的图像文本数目组成(在撰写时)。第二,WIT是一个庞大的多语种多语言实例,覆盖范围超过1,100+维基维基语言的图像的版本,在实际版本中提供最起码的版本。