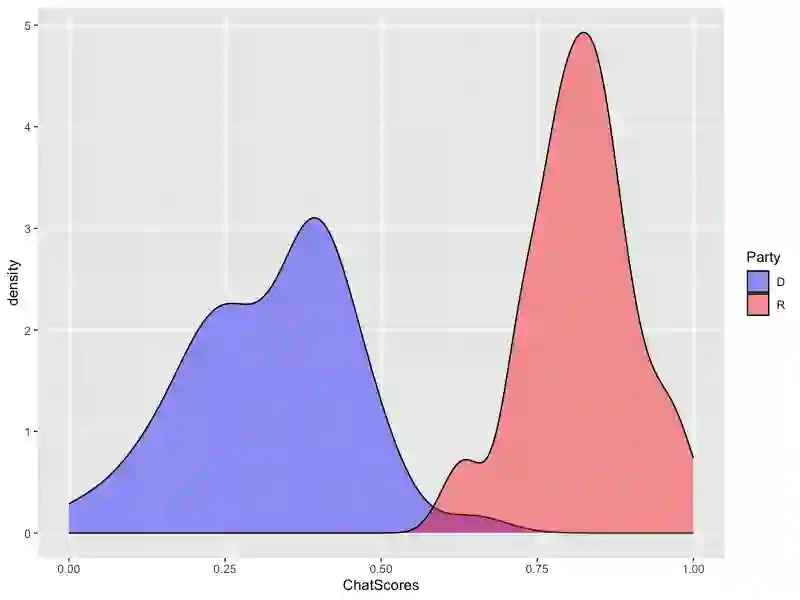

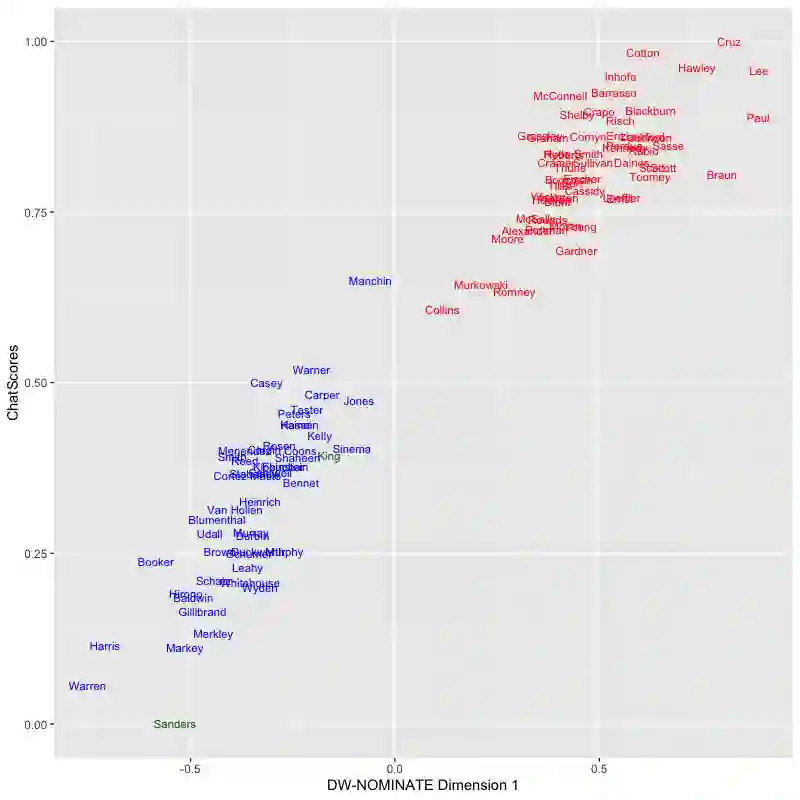

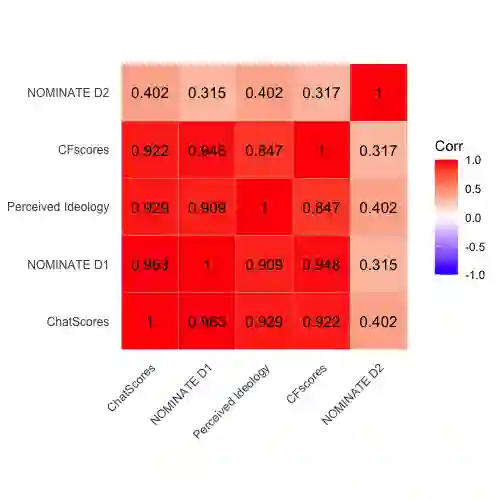

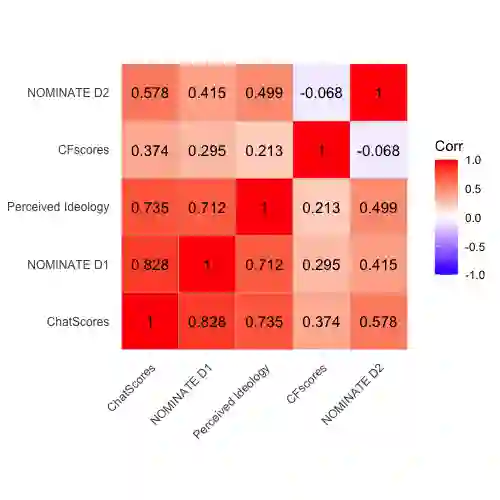

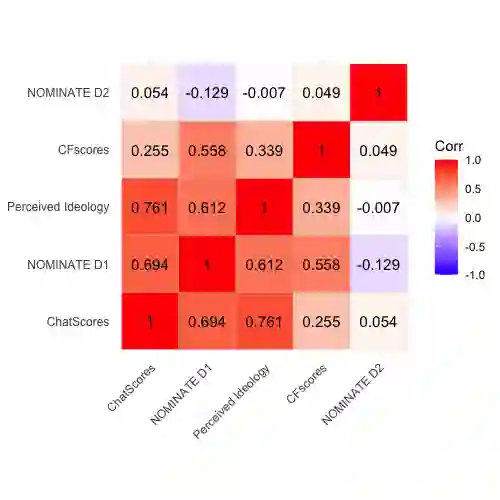

The mass aggregation of knowledge embedded in large language models (LLMs) holds the promise of new solutions to problems of observability and measurement in the social sciences. We examine the utility of one such model for a particularly difficult measurement task: measuring the latent ideology of lawmakers, which allows us to better understand functions that are core to democracy, such as how politics shape policy and how political actors represent their constituents. We scale the senators of the 116th United States Congress along the liberal-conservative spectrum by prompting ChatGPT to select the more liberal (or conservative) senator in pairwise comparisons. We show that the LLM produced stable answers across repeated iterations, did not hallucinate, and was not simply regurgitating information from a single source. This new scale strongly correlates with pre-existing liberal-conservative scales such as NOMINATE, but also differs in several important ways, such as correctly placing senators who vote against their party for far-left or far-right ideological reasons on the extreme ends. The scale also highly correlates with ideological measures based on campaign giving and political activists' perceptions of these senators. In addition to the potential for better-automated data collection and information retrieval, our results suggest LLMs are likely to open new avenues for measuring latent constructs like ideology that rely on aggregating large quantities of data from public sources.

翻译:大型语言模型(LLM)中嵌入的大量知识的聚集为社会科学中的可观察性和测量问题提供了新的解决方案。我们研究了一个特别困难的测量任务对其中一个这样的模型的实用性:测量立法者的潜在意识形态,这有助于更好地理解民主的核心功能,如政治如何塑造政策以及政治行为者如何代表他们的选民。我们通过提示ChatGPT在两两比较中选择更自由(或保守)的参议员,对第116届美国国会的参议员进行了横向比较,从而将参议员沿左派 - 右派谱系进行了量化。我们展示了LLM在重复迭代中产生了稳定的答案,没有出现幻觉,并且不仅仅是简单地重复一个单一来源的信息。新的评分与现有的左右分化评价工具(如NOMINATE)强烈相关,但在一些重要方面也有所不同,例如正确地将出于极左和极右意识形态原因而反对其党派的参议员置于极端位置。此评分还与基于竞选捐款和政治活动家对这些参议员的感知的意识形态测量高度相关。除了可能更好的自动化数据收集和信息检索外,我们的结果表明,LLM很有可能开辟测量如意识形态这样依赖于从公共来源聚合大量数据的潜在构建的新途径。